P-1 AI, a startup founded by former Google DeepMind researcher Aleksa Gordić, along with former Airbus CTO Paul Eremenko, raised $23 million in seed funding.

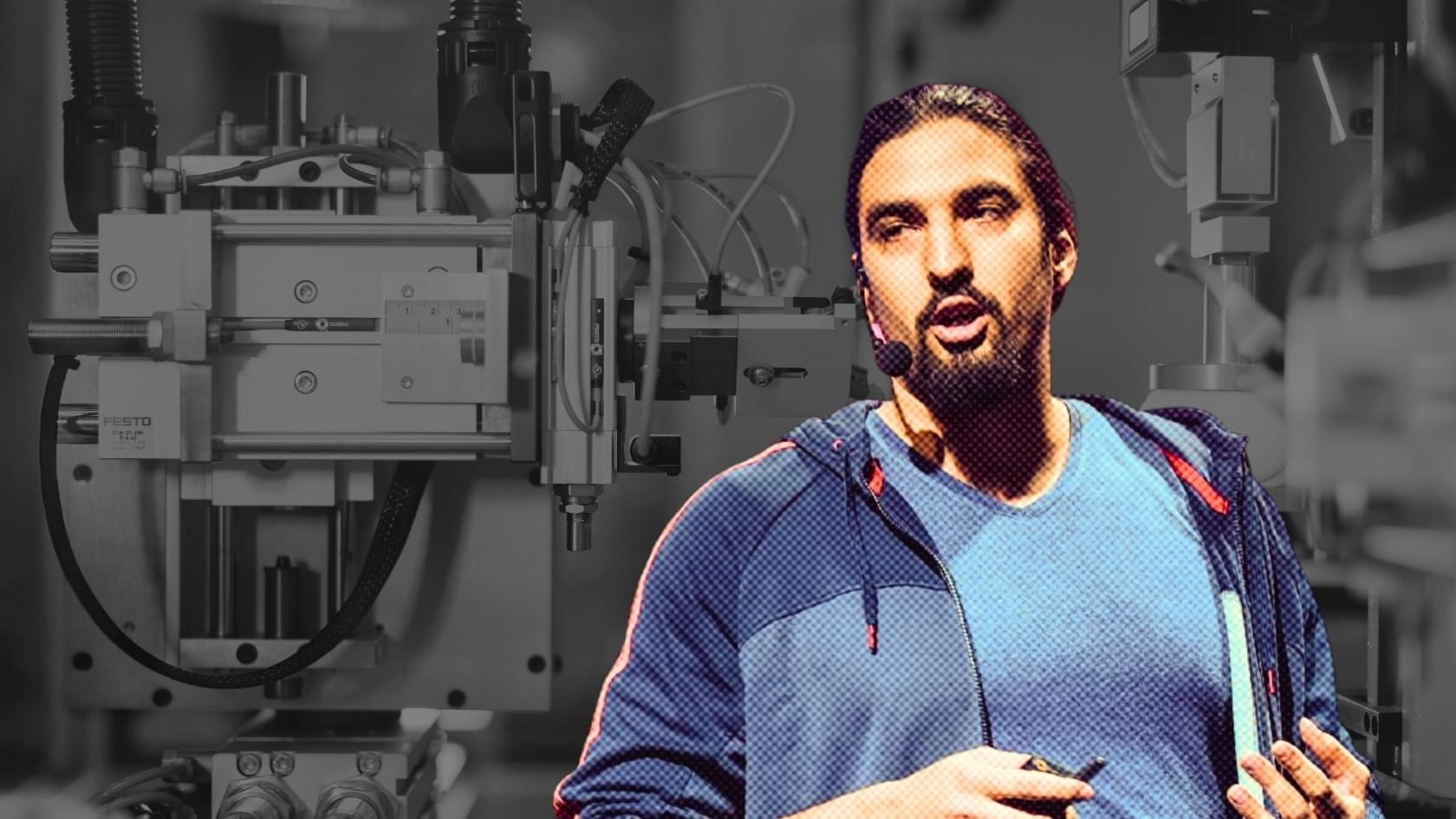

Background/Objectives: Accurately predicting protein–ligand binding affinity is essential in drug discovery for identifying effective compounds. While existing sequence-based machine learning models for binding affinity prediction have shown potential, they lack accuracy and robustness in pattern recognition, which limits their generalizability across diverse and novel binding complexes. To overcome these limitations, we developed GNNSeq, a novel hybrid machine learning model that integrates a Graph Neural Network (GNN) with Random Forest (RF) and XGBoost. Methods: GNNSeq predicts ligand binding affinity by extracting molecular characteristics and sequence patterns from protein and ligand sequences. The fully optimized GNNSeq model was trained and tested on subsets of the PDBbind dataset. The novelty of GNNSeq lies in its exclusive reliance on sequence features, a hybrid GNN framework, and an optimized kernel-based context-switching design. By relying exclusively on sequence features, GNNSeq eliminates the need for pre-docked complexes or high-quality structural data, allowing for accurate binding affinity predictions even when interaction-based or structural information is unavailable. The integration of GNN, XGBoost, and RF improves GNNSeq performance by hierarchical sequence learning, handling complex feature interactions, reducing variance, and forming a robust ensemble that improves predictions and mitigates overfitting. The GNNSeq unique kernel-based context switching scheme optimizes model efficiency and runtime, dynamically adjusts feature weighting between sequence and basic structural information, and improves predictive accuracy and model generalization. Results: In benchmarking, GNNSeq performed comparably to several existing sequence-based models and achieved a Pearson correlation coefficient (PCC) of 0.784 on the PDBbind v.2020 refined set and 0.84 on the PDBbind v.2016 core set. During external validation with the DUDE-Z v.2023.06.20 dataset, GNNSeq attained an average area under the curve (AUC) of 0.74, demonstrating its ability to distinguish active ligands from decoys across diverse ligand–receptor pairs. To further evaluate its performance, we combined GNNSeq with two additional specialized models that integrate structural and protein–ligand interaction features. When tested on a curated set of well-characterized drug–target complexes, the hybrid models achieved an average PCC of 0.89, with the top-performing model reaching a PCC of 0.97. GNNSeq was designed with a strong emphasis on computational efficiency, training on 5000+ complexes in 1 h and 32 min, with real-time affinity predictions for test complexes. Conclusions: GNNSeq provides an efficient and scalable approach for binding affinity prediction, offering improved accuracy and generalizability while enabling large-scale virtual screening and cost-effective hit identification. GNNSeq is publicly available in a server-based graphical user interface (GUI) format.

Researchers have created a light-powered soft robot that can carry loads through the air along established tracks, similar to cable cars or aerial trams. The soft robot operates autonomously, can climb slopes at angles of up to 80 degrees, and can carry loads up to 12 times its weight.

“We’ve previously created soft robots that can move quickly through the water and across solid ground, but wanted to explore a design that can carry objects through the air across open space,” says Jie Yin, associate professor of mechanical and aerospace engineering at North Carolina State University and corresponding author of a paper on the work published in Advanced Science.

“The simplest way to do this is to follow an established track—similar to the aerial trams you see in the mountains. And we’ve now demonstrated that this is possible.”

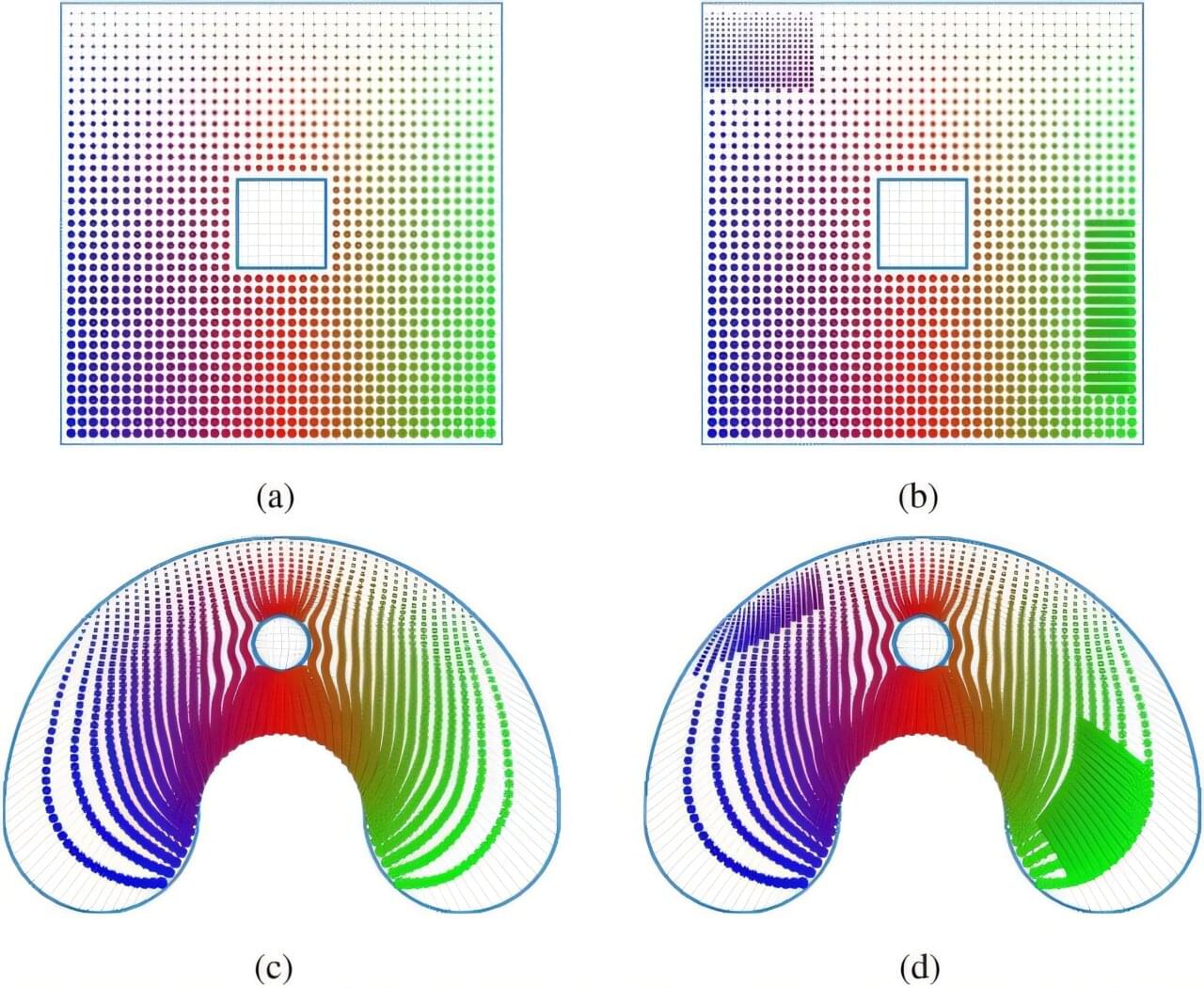

A research team from the Skoltech AI Center proposed a new neural network architecture for generating structured curved coordinate grids, an important tool for calculations in physics, biology, and even finance. The study is published in the Scientific Reports journal.

“Building a coordinate grid is a key task for modeling. Breaking down a complex space into manageable pieces is necessary, as it allows you to accurately determine the changes in different quantities—temperature, speed, pressure, and so on,” commented the lead author of the paper, Bari Khairullin, a Ph.D. student from the Computational and Data Science and Engineering program at Skoltech.

“Without a good grid, calculations become either inaccurate or impossible. In physics, they help model the movement of liquids and gases, in biology, tissue growth and drug distribution, and in finance, they predict market fluctuations. The proposed approach opens up new possibilities in building grids using artificial intelligence.”

At a time when we run ourselves ragged to meet society’s expectations of productivity, performance and time optimization, is it right that our robot vacuum cleaners and other smart appliances should sit idle for most of the day?

Computer scientists at the University of Bath in the UK think not. In a new paper, they propose over 100 ways to tap into the latent potential of our robotic devices. The researchers say these devices could be reprogrammed to perform helpful tasks around the home beyond their primary functions, keeping them physically active during their regular downtime.

New functions could include playing with the cat, watering plants, carrying groceries from car to kitchen, delivering breakfast in bed and closing windows when it rains.

Humans are known to make mental associations between various real-world stimuli and concepts, including colors. For example, red and orange are typically associated with words such as “hot” or “warm,” blue with “cool” or “cold,” and white with “clean.”

Interestingly, some past psychology studies have shown that even if some of these associations arise from people’s direct experience of seeing colors in the world around them, many people who were born blind still make similar color-adjective associations. The processes underpinning the formation of associations between colors and specific adjectives have not yet been fully elucidated.

Researchers at the University of Wisconsin-Madison recently carried out a study to further investigate how language contributes to how we learn about color, using mathematical and computational tools, including Open AI’s GPT-4 large language model (LLM). Their findings, published in Communications Psychology, suggest that color-adjective associations are rooted in the structure of language itself and are thus not only learned through experience.

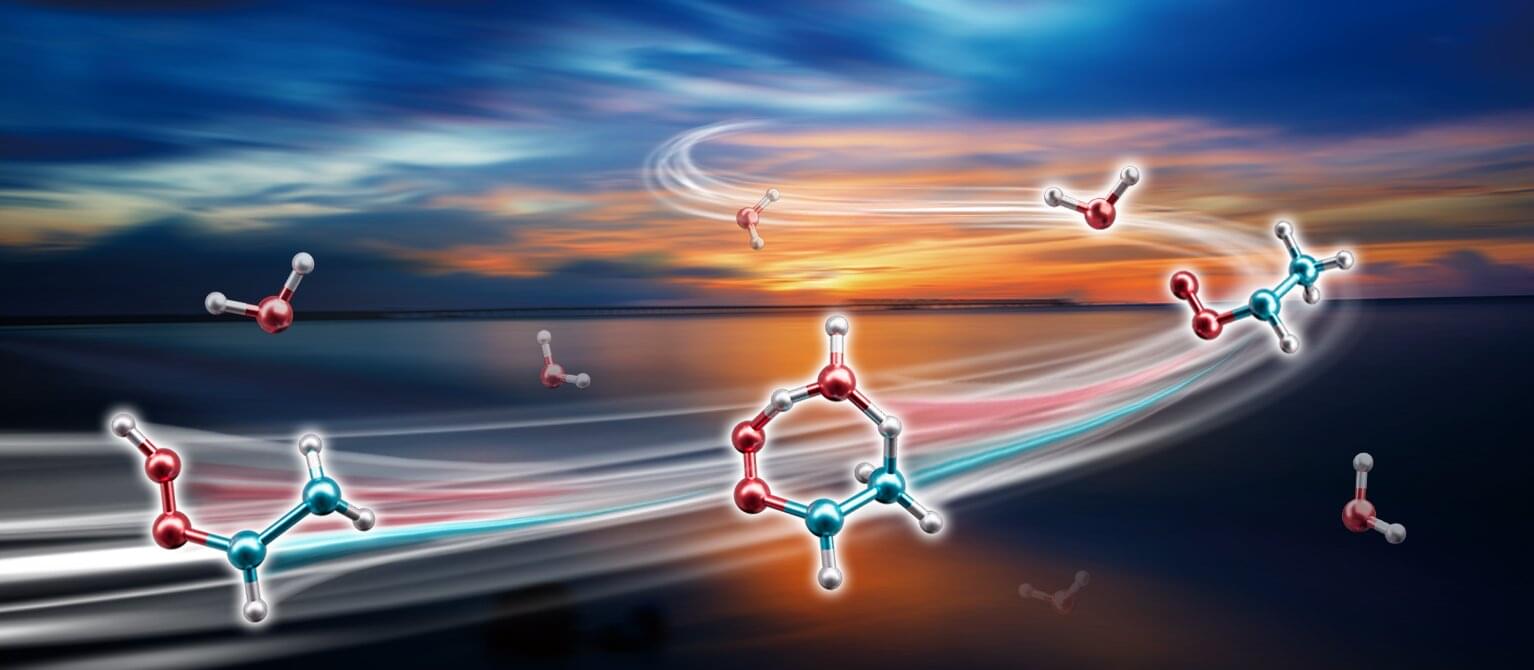

Criegee intermediates (CIs)—highly reactive species formed when ozone reacts with alkenes in the atmosphere—play a crucial role in generating hydroxyl radicals (the atmosphere’s “cleansing agents”) and aerosols that impact climate and air quality. The syn-CH3CHOO is particularly important among these intermediates, accounting for 25%–79% of all CIs depending on the season.

Until now, scientists have believed that syn-CH3CHOO primarily disappeared through self-decomposition. However, in a study published in Nature Chemistry, a team led by Profs. Yang Xueming, Zhang Donghui, Dong Wenrui and Fu Bina from the Dalian Institute of Chemical Physics (DICP) of the Chinese Academy of Sciences has uncovered a surprising new pathway: syn-CH3CHOO’s reaction with atmospheric water vapor is approximately 100 times faster than previously predicted by theoretical models.

Using advanced laser techniques, the researchers experimentally measured the reaction rate between syn-CH3CHOO and water vapor, and discovered the faster reaction time. To uncover the reason behind this acceleration, they constructed a high-accuracy full-dimensional (27D) potential energy surface using the fundamental invariant-neural network approach and performed full-dimensional dynamical calculations.

Researchers at Rice University have developed a new machine learning (ML) algorithm that excels at interpreting the “light signatures” (optical spectra) of molecules, materials and disease biomarkers, potentially enabling faster and more precise medical diagnoses and sample analysis.

“Imagine being able to detect early signs of diseases like Alzheimer’s or COVID-19 just by shining a light on a drop of fluid or a tissue sample,” said Ziyang Wang, an electrical and computer engineering doctoral student at Rice who is a first author on a study published in ACS Nano. “Our work makes this possible by teaching computers how to better ‘read’ the signal of light scattered from tiny molecules.”

Every material or molecule interacts with light in a unique way, producing a distinct pattern, like a fingerprint. Optical spectroscopy, which entails shining a laser on a material to observe how light interacts with it, is widely used in chemistry, materials science and medicine. However, interpreting spectral data can be difficult and time-consuming, especially when differences between samples are subtle. The new algorithm, called Peak-Sensitive Elastic-net Logistic Regression (PSE-LR), is specially designed to analyze light-based data.