An AI model can scan your brain with non-invasive equipment and convert your thoughts into typed sentences — with no implants required.

Artificial Intelligence (AI), particularly large language models like GPT-4, has shown impressive performance on reasoning tasks. But does AI truly understand abstract concepts, or is it just mimicking patterns? A new study from the University of Amsterdam and the Santa Fe Institute reveals that while GPT models perform well on some analogy tasks, they fall short when the problems are altered, highlighting key weaknesses in AI’s reasoning capabilities. The work is published in Transactions on Machine Learning Research.

Analogical reasoning is the ability to draw a comparison between two different things based on their similarities in certain aspects. It is one of the most common methods by which human beings try to understand the world and make decisions. An example of analogical reasoning: cup is to coffee as soup is to??? (the answer being: bowl)

Large language models (LLMs) like GPT-4 perform well on various tests, including those requiring analogical reasoning. But can AI models truly engage in general, robust reasoning or do they over-rely on patterns from their training data? This study by language and AI experts Martha Lewis (Institute for Logic, Language and Computation at the University of Amsterdam) and Melanie Mitchell (Santa Fe Institute) examined whether GPT models are as flexible and robust as humans in making analogies.

A little over a year after releasing two open Gemma AI models built from the same technology behind its Gemini AI, Google is updating the family with Gemma 3.

According to the blog post, these models are intended for use by developers creating AI applications capable of running wherever they’re needed, on anything from a phone to a workstation with support for over 35 languages, as well as the ability to analyze text, images, and short videos.

The company claims that it’s the world’s best single-accelerator model, outperforming competition from Facebook’s Llama, DeepSeek, and OpenAI for performance on a host with a single GPU, as well as optimized capabilities for running on Nvidia’s GPUs and dedicated AI hardware.

Gemma 3’s vision encoder is also upgraded, with support for high-res and non-square images, while the new ShieldGemma 2 image safety classifier is available for use to filter both image input and output for content classified as sexually explicit, dangerous, or violent.

To go deeper into those claims, you can check out the 26-page technical report.

Last year it was unclear how much interest there would be in a model like Gemma, however, the popularity of DeepSeek and others shows there is interest in AI tech with lower hardware requirements.

A team from Princeton University has successfully used artificial intelligence (AI) to solve equations that control the quantum behavior of individual atoms and molecules to replicate the early stages of ice formation. The simulation shows how water molecules transition into solid ice with quantum accuracy.

Roberto Car, Princeton’s Ralph W. *31 Dornte Professor in Chemistry, who co-pioneered the approach of simulating molecular behaviors based on the underlying quantum laws more than 35 years ago, said, “In a sense, this is like a dream come true. Our hope then was that eventually, we would be able to study systems like this one. Still, it was impossible without further conceptual development, and that development came via a completely different field, that of artificial intelligence and data science.”

Modeling the early stages of freezing water, the ice nucleation process could increase the precision of climate and weather modeling and other processes like flash-freezing food. The new approach could help track the activity of hundreds of thousands of atoms over thousands of times longer periods, albeit still just fractions of a second, than in early studies.

Shanghai’s robotics revolution is here! At a cutting-edge startup, humanoid robots are being trained to navigate the real world-learning tasks from sorting objects to taking coffee. But how does Al collect and refine the data that powers these machines? We got access to a 2,000-square-meter data factory, where robots are trained through motion capture, human guidance, and real-world simulations. With China’s tech and supply chain advantages, could these humanoids become part of our daily lives sooner than we think? #HumanoidRobots #Al #FutureTech.

__________________

ShanghaiEye focuses on producing top-quality contents.

Nobody knows SHANGHAI better than us.

Please subscribe to us ☻☻☻

__________________

For more stories, please click.

■ What’s up today in Shanghai, the most updated news of the city.

• Playlist.

■ Amazing Shanghai, exploring the unknown corners of the city, learning the people, food and stories behind them.

• Amazing Shanghai and China 淘宝魔都玩转中国

■ What Chinese people’s lives are like during the post COVID-19 period.

• COVID-19 新冠疫情

■ Views of foreign scholars on China and its affairs.

• Voices, Let’s Listen! 听她他说

■ Foreign faces in Shanghai, people living in this city sharing their true feelings.

A new study has been published in Nature Communications, presenting the first comprehensive atlas of allele-specific DNA methylation across 39 primary human cell types. The study was led by Ph.D. student Jonathan Rosenski under the guidance of Prof. Tommy Kaplan from the School of Computer Science and Engineering and Prof. Yuval Dor from the Faculty of Medicine at the Hebrew University of Jerusalem and Hadassah Medical Center.

Using machine learning algorithms and deep whole-genome bisulfite sequencing on freshly isolated and purified cell populations, the study unveils a detailed landscape of genetic and epigenetic regulation that could reshape our understanding of gene expression and disease.

A key focus of the research is the success in identifying differences between the two alleles and, in some cases, demonstrating that these differences result from genomic imprinting —meaning that it is not the sequence (genetics) that matters, but rather whether the allele is inherited from the mother or the father. These findings could reshape our understanding of gene expression and disease.

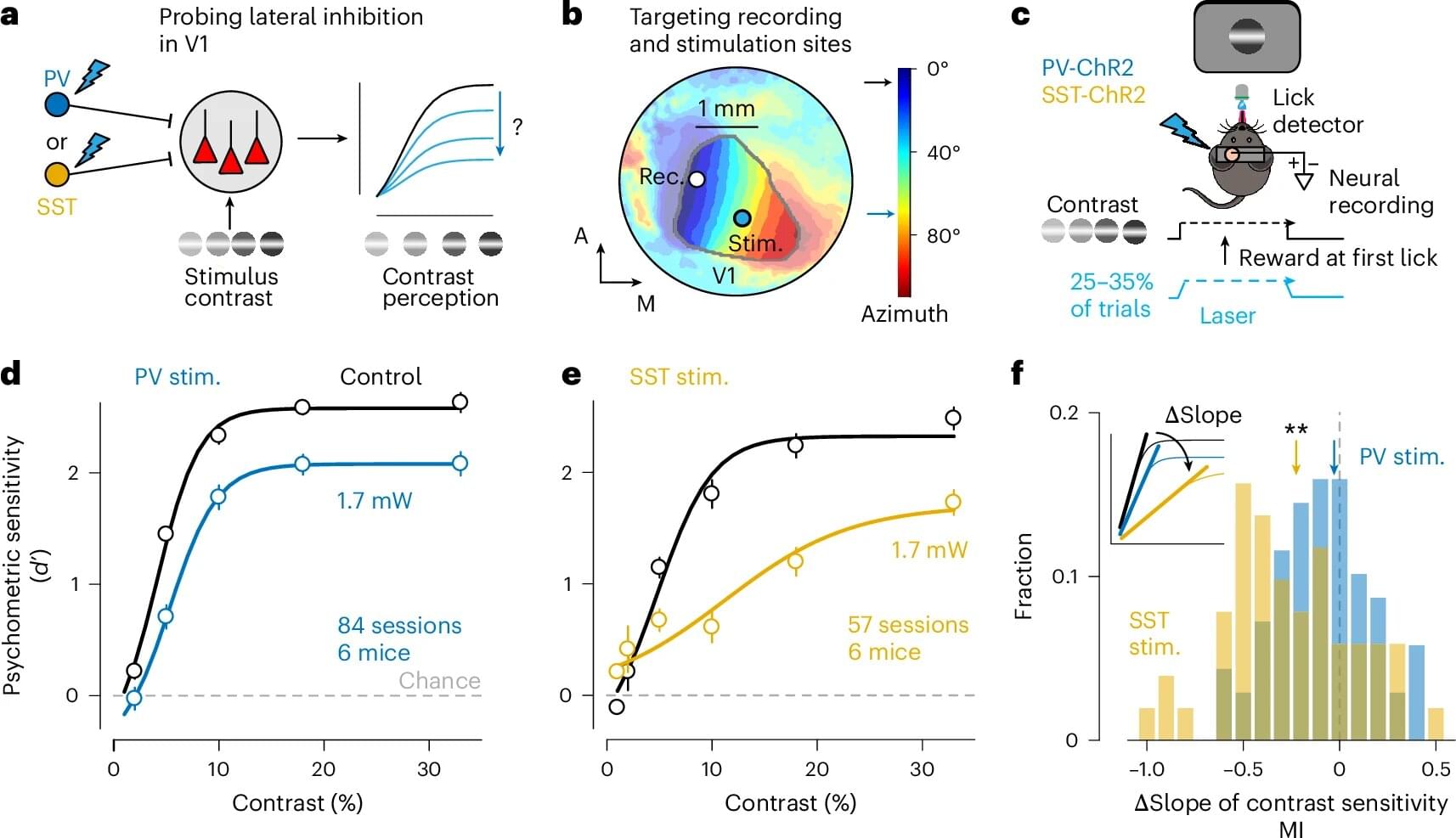

A multidisciplinary team of researchers at Georgia Tech has discovered how lateral inhibition helps our brains process visual information, and it could expand our knowledge of sensory perception, leading to applications in neuro-medicine and artificial intelligence.

Lateral inhibition is when certain neurons suppress the activity of their neighboring neurons. Imagine an artist drawing, darkening the lines around the contours, highlighting the boundaries between objects and space, or objects and other objects. Comparably, in the visual system, lateral inhibition sharpens the contrast between different visual stimuli.

“This research is really getting at how our visual system not only highlights important things, but also actively suppresses irrelevant information in the background,” said lead researcher Bilal Haider, associate professor in the Wallace H. Coulter Department of Biomedical Engineering. “That ability to filter out distractions is crucial.”

Can Tesla REALLY Build Millions of Optimus Bots? ## Tesla is poised to revolutionize robotics and sustainable energy by leveraging its innovative manufacturing capabilities and vertical integration to produce millions of Optimus bots efficiently and cost-effectively ## Questions to inspire discussion ## Manufacturing and Production.

S low model count strategy benefit their production? A: Tesla s speed of innovation and ability to build millions of robots quickly gives them a key advantage in mass producing and scaling manufacturing for humanoid robots like Optimus. + s factory design strategies support rapid production scaling? A: Tesla## Cost and Efficiency.

S vertical integration impact their cost structure? A: Tesla s AI brain in-house, Tesla can avoid paying high margins to external suppliers like Nvidia for the training portion of the brain. +## Technology and Innovation.

S experience in other industries benefit Optimus development? A: Tesla s own supercomputer, Cortex, and AI training cluster are crucial for developing and training the Optimus bot## Quality and Reliability.

S manufacturing experience contribute to Optimus quality? A: Tesla## Market Strategy.

S focus on vehicle appeal relate to Optimus production? A: Tesla## Scaling and Demand.

Researchers at Michigan State University have refined an innovation that has the potential to improve safety, reduce severe injury and increase survival rates in situations ranging from car accidents, sports, law enforcement operations and more.

In 2020 and 2022, Weiyi Lu, an associate professor in MSU’s College of Engineering, developed a liquid nanofoam material made up of tiny holes surrounded by water that has been shown to protect the brain against traumatic injuries when used as a liner in football helmets. Now, MSU engineers and scientists have improved this technology to shield vital internal organs as well.

Falls, motor vehicle crashes and other kinds of collisions can cause blunt force trauma and damage to bodily organs that can lead to life-threatening emergencies. These injuries are often the result of intense mechanical force or pressure that doesn’t penetrate the body like a cut, but causes serious damage to the body’s organs, including internal lacerations, ruptures, bleeding and organ failure.

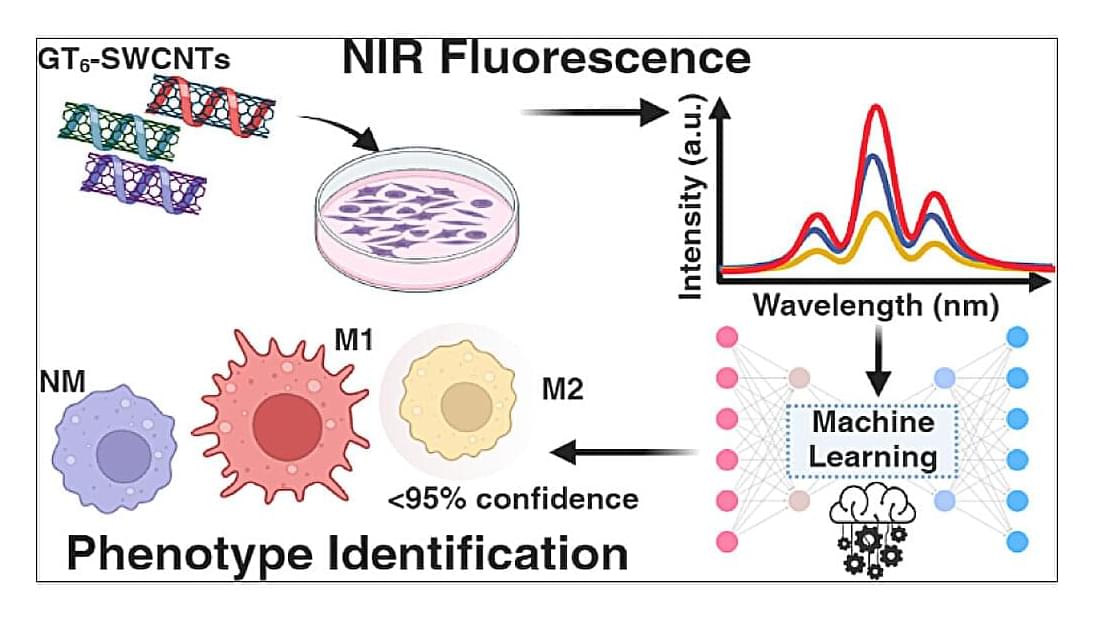

Early diagnosis is crucial in disease prevention and treatment. Many diseases can be identified not just through physical signs and symptoms but also through changes at the cellular and molecular levels.

When it comes to a majority of chronic conditions, early detection, particularly at the cellular level, gives patients a better chance for successful treatment. Detection of early changes at the cellular level can also dramatically improve cancer outcomes.

It’s against this backdrop that a University of Rhode Island professor and a former Ph.D. graduate student looked at understanding the smallest changes between two similar cells.