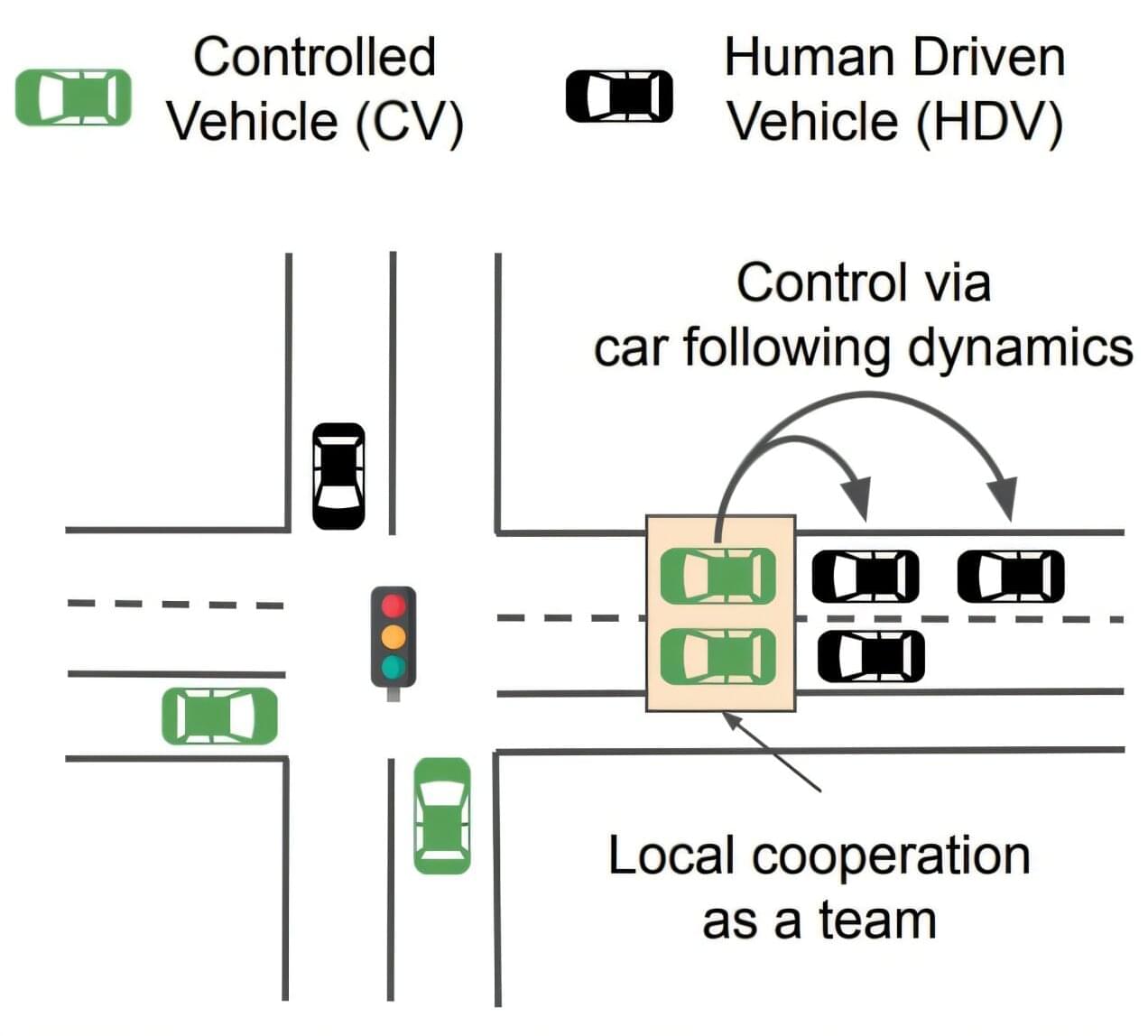

Eco-driving involves making small adjustments to minimize unnecessary fuel consumption. For example, as cars approach a traffic light that has turned red, “there’s no point in me driving as fast as possible to the red light,” she says. By just coasting, “I am not burning gas or electricity in the meantime.” If one car, such as an automated vehicle, slows down at the approach to an intersection, then the conventional, non-automated cars behind it will also be forced to slow down, so the impact of such efficient driving can extend far beyond just the car that is doing it.

That’s the basic idea behind eco-driving, Wu says. But to figure out the impact of such measures, “these are challenging optimization problems” involving many different factors and parameters, “so there is a wave of interest right now in how to solve hard control problems using AI.”

The new benchmark system that Wu and her collaborators developed based on urban eco-driving, which they call “IntersectionZoo,” is intended to help address part of that need. The benchmark was described in detail in a paper presented at the 2025 International Conference on Learning Representation in Singapore.