Three open questions about the Pentagon’s push for generative AI.

“Second Variety” is a science fiction novelette by American writer Philip K. Dick, first published in Space Science Fiction magazine, in May 1953. Set in a world where war between the Soviet Union and United Nations has reduced most of the world to a barren wasteland, the story concerns the discovery, by the few remaining soldiers left, that self-replicating robots originally built to assassinate Soviet agents have gained sentience and are now plotting against both sides. It is one of many stories by Dick to examine the implications of nuclear war, particularly after it has destroyed much or all of the planet. The story was adapted into the movie Screamers in 1995. 00:00 Intro 01:03 Peek into the plot 03:33 Self-Replication and Technological Autonomy 06:51 Current Autonomous Warfare Capabilities 10:30 Space Warfare and the Projection of Terrestrial Conflict 13:14 Current State of Space Warfare 15:22 Wrapping Up =============== 🎬 Loitering munitions system WARMATE • Loitering munitions system WARMATE 📙 🇺🇸 Superintelligence: Paths, Dangers, Strategies https://bookshop.org/a/98861/97801987… The Human Terrain Project — PENTAGON’S attempt to understand The Enemy | ENDEVR Documentary

• The Human Terrain Project — PENTAGON’… 📙 🇺🇸 War in the Age of Intelligent Machines https://monoskop.org/images/c/c0/DeLa… =============== Buy the book featured in this video: 📙 🇺🇸 Buy the book Second Variety https://bookshop.org/a/98861/97988883… 🎧 Free Audiobook

• Post-Apocalyptic Story “Second Variet… =============== You can find my take on things summarised in the books I wrote. 📙🇺🇸Chronicles of the Machine — Simulated conversations with Philip K. Dick https://buchshop.bod.ch/chronicles-of… 📙🇺🇸Zero Person: Reframing Autistic Cognition Beyond the Self https://buchshop.bod.ch/zero-person-e… 📙🇩🇪Zero Person: Autistische Kognition jenseits des Selbst https://buchshop.bod.ch/zero-person-e… 📙🇺🇸Book order: The end of the I https://www.bod.ch/buchshop/the-end-o… 📙🇩🇪Book order: Das Ende des Ichs https://www.bod.ch/buchshop/das-ende–… 📙🇺🇸 #actuallyautistic — Living with Autism – A Poetic Exploration of the Spectrum https://buchshop.bod.ch/actuallyautis… =============== Image credits: Freepik.

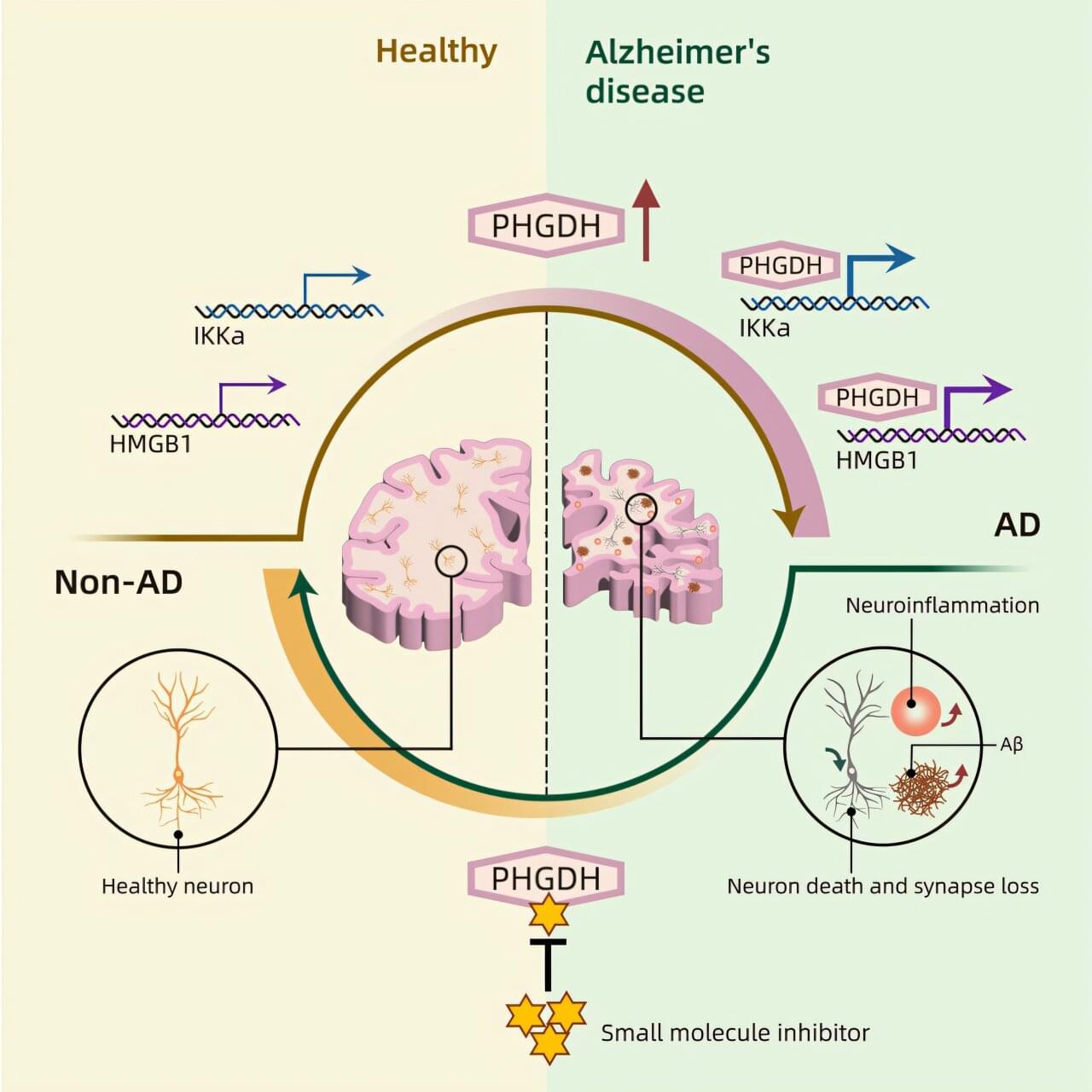

A new study found that a gene recently recognized as a biomarker for Alzheimer’s disease is actually a cause of it, due to its previously unknown secondary function. Researchers at the University of California San Diego used artificial intelligence to help both unravel this mystery of Alzheimer’s disease and discover a potential treatment that obstructs the gene’s moonlighting role.

The research team published their results on April 23 in the journal Cell.

About one in nine people aged 65 and older has Alzheimer’s disease, the most common cause of dementia. While some particular genes, when mutated, can lead to Alzheimer’s, that connection only accounts for a small percentage of all Alzheimer’s patients. The vast majority of patients do not have a mutation in a known disease-causing gene; instead, they have “spontaneous” Alzheimer’s, and the causes for that are unclear.

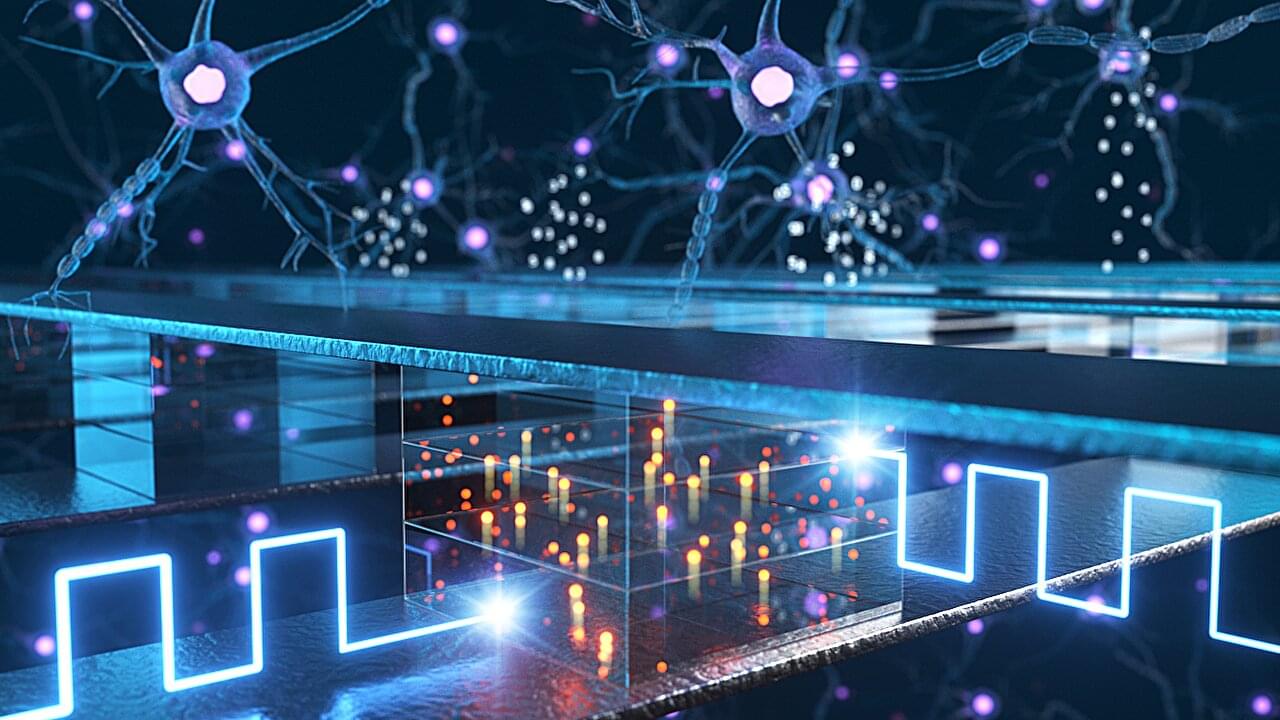

As artificial intelligence (AI) continues to advance, researchers at POSTECH (Pohang University of Science and Technology) have identified a breakthrough that could make AI technologies faster and more efficient.

Professor Seyoung Kim and Dr. Hyunjeong Kwak from the Departments of Materials Science & Engineering and Semiconductor Engineering at POSTECH, in collaboration with Dr. Oki Gunawan from the IBM T.J. Watson Research Center, have become the first to uncover the hidden operating mechanisms of Electrochemical Random-Access Memory (ECRAM), a promising next-generation technology for AI. Their study is published in the journal Nature Communications.

As AI technologies advance, data processing demands have exponentially increased. Current computing systems, however, separate data storage (memory) from data processing (processors), resulting in significant time and energy consumption due to data transfers between these units. To address this issue, researchers developed the concept of in-memory computing.

In this second episode of the AI Bros podcast Bruce and John go completely unscripted and just letting the conversation flow where it goes.

They talk about everything from Sam Altman’s controversial TED interview to John’s Animated Action Figure that came to life and broke out of its packaging. It’s interesting, fun and informative.

Subscribe, Like, Follow, and Share the AI Bros to your favorite social media platforms. The AI Bros Podcast is where artificial intelligence meets real talk.

Both Bruce and John share their hot takes, perspective and journey with AI as they learn it and use it themselves in their own day to day workflow.

The AI Bros podcast is a weekly series, and a part of the Neural News Network, and the (A)bsolutely (I)ncredible podcast channel. John Lawson III is the CEO of ColderICE Media and is highly recognized in e-commerce, and AI.

John is an Amazon #1 best-selling author, IBM Futurist, and an eBay Influencer.

He’s celebrated as one of the Top 100 Small Business Influencers in America, and crowned “Savviest in Social Medial” by StartUp Nation. You can connect with John directly on his website at www.johnlawson. comJohn is an internationally-recognized keynote speaker, a “Commerce Evangelist” and an absolute wealth of knowledge on all things e-retail, and online marketing strategy.

John is a pioneer in the online retail vertical space, and founder of The E-commerce Group, a global community of e-commerce vendors, and online marketers.

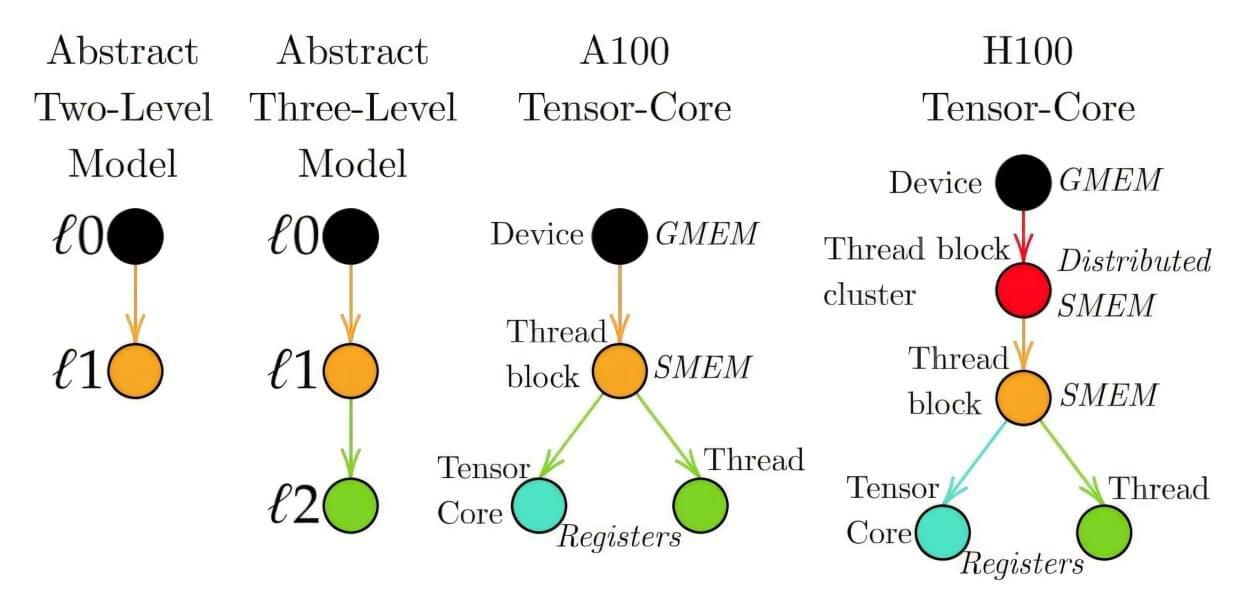

Coordinating complicated interactive systems, whether it’s the different modes of transportation in a city or the various components that must work together to make an effective and efficient robot, is an increasingly important subject for software designers to tackle. Now, researchers at MIT have developed an entirely new way of approaching these complex problems, using simple diagrams as a tool to reveal better approaches to software optimization in deep-learning models.

They say the new method makes addressing these complex tasks so simple that it can be reduced to a drawing that would fit on the back of a napkin.

The new approach is described in the journal Transactions of Machine Learning Research, in a paper by incoming doctoral student Vincent Abbott and Professor Gioele Zardini of MIT’s Laboratory for Information and Decision Systems (LIDS).

A new brain-inspired AI model called TopoLM learns language by organizing neurons into clusters, just like the human brain. Developed by researchers at EPFL, this topographic language model shows clear patterns for verbs, nouns, and syntax using a simple spatial rule that mimics real cortical maps. TopoLM not only matches real brain scans but also opens new possibilities in AI interpretability, neuromorphic hardware, and language processing.

Join our free AI content course here 👉 https://www.skool.com/ai-content-acce… the best AI news without the noise 👉 https://airevolutionx.beehiiv.com/ 🔍 What’s Inside: • A brain-inspired AI model called TopoLM that learns language by building its own cortical map • Neurons are arranged on a 2D grid where nearby units behave alike, mimicking how the human brain clusters meaning • A simple spatial smoothness rule lets TopoLM self-organize concepts like verbs and nouns into distinct brain-like regions 🎥 What You’ll See: • How TopoLM mirrors patterns seen in fMRI brain scans during language tasks • A comparison with regular transformers, showing how TopoLM brings structure and interpretability to AI • Real test results proving that TopoLM reacts to syntax, meaning, and sentence structure just like a biological brain 📊 Why It Matters: This new system bridges neuroscience and machine learning, offering a powerful step toward *AI that thinks like us. It unlocks better interpretability, opens paths for **neuromorphic hardware*, and reveals how one simple principle might explain how the brain learns across all domains. DISCLAIMER: This video covers topographic neural modeling, biologically-aligned AI systems, and the future of brain-inspired computing—highlighting how spatial structure could reshape how machines learn language and meaning. #AI #neuroscience #brainAI

Get the best AI news without the noise 👉 https://airevolutionx.beehiiv.com/

🔍 What’s Inside:

• A brain-inspired AI model called TopoLM that learns language by building its own cortical map.

• Neurons are arranged on a 2D grid where nearby units behave alike, mimicking how the human brain clusters meaning.

• A simple spatial smoothness rule lets TopoLM self-organize concepts like verbs and nouns into distinct brain-like regions.

🎥 What You’ll See:

• How TopoLM mirrors patterns seen in fMRI brain scans during language tasks.

• A comparison with regular transformers, showing how TopoLM brings structure and interpretability to AI

• Real test results proving that TopoLM reacts to syntax, meaning, and sentence structure just like a biological brain.

📊 Why It Matters: