The company also said it is opening up its server platform to other companies to build semi-custom AI infrastructure

Coding is fast, it always has been. What actually takes a lot of time are the processes behind formulating the design structure of application and the time it takes to lay down the logic structures that underpin an application. Then there’s the time needed to perform the testing and debugging of the software, as it moves towards its final state of live production.

The rise of AI-powered coding agents has promised to speed up coding, so the conversation should now focus on what areas of the software application development lifecycle these new automations are actually being brought to bear upon.

The consensus of opinion right now appears to gravitate towards driving coding assistance services towards the lower-level services needed to manage applications, rather than any more cerebral or upper-level ability to create apps themselves. Although this statement is in danger of being obsolete before the end of the current decade, this appears to be where we are right now.

IN A NUTSHELL 🌕 Interlune, a Seattle-based startup, plans to extract helium-3 from the moon, aiming to revolutionize clean energy and quantum computing. 🚀 The company has developed a prototype excavator capable of digging up to ten feet into lunar soil, refining helium-3 directly on the moon for efficiency. 🔋 Helium-3 offers potential for nuclear

MIT didn’t name the student in its statement Friday, but it did name the paper. That paper, by Aidan Toner-Rodgers, was covered by The Wall Street Journal and other media outlets.

In a press release, MIT said it “has no confidence in the provenance, reliability or validity of the data and has no confidence in the veracity of the research contained in the paper.”

The university said the author of the paper is no longer at MIT.

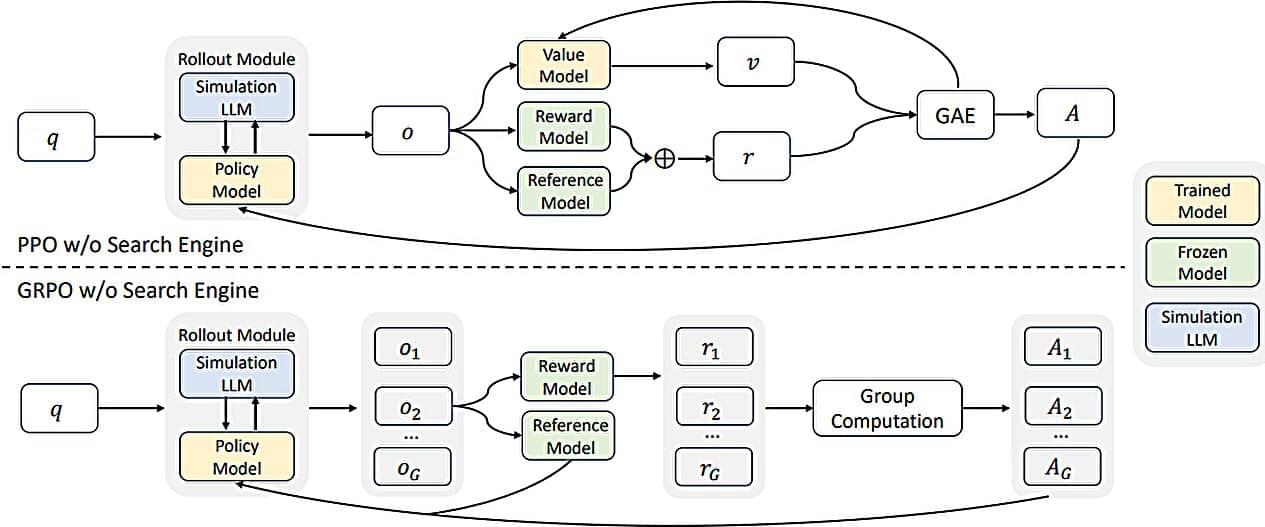

A team of AI researchers at the Alibaba Group’s Tongyi Lab, has debuted a new approach to training LLMs; one that costs much less than those now currently in use. Their paper is posted on the arXiv preprint server.

As LLMs such as ChatGPT have become mainstream, the resources and associated costs of running them have skyrocketed, forcing AI makers to look for ways to get the same or better results using other techniques. To this end, the team working at the Tongyi Lab has found a way to train LLMs in a new way that uses far fewer resources.

The idea behind ZeroSearch is to no longer use API calls to search engines to amass search results as a way to train an LLM. Their method instead uses simulated AI-generated documents to mimic the output from traditional search engines, such as Google.

Is Gemini 2.5 Pro the AI breakthrough that will redefine machine intelligence? Google’s latest innovation promises to solve one of AI’s biggest hurdles: true reasoning. Unlike chatbots that regurgitate data, Gemini 2.5 Pro mimics human-like logic, connecting concepts, spotting flaws, and making decisions with unprecedented depth. This isn’t an upgrade—it’s a revolution in how machines think.

What makes Gemini 2.5 Pro unique? Built on a hybrid neural-symbolic architecture, it merges brute-force data processing with structured reasoning frameworks. Early tests show it outperforms GPT-4 and Claude 3 in complex tasks like legal analysis, medical diagnostics, and ethical dilemma navigation. We’ll break down its secret sauce: adaptive learning loops, context-aware problem-solving, and self-correcting logic that learns from mistakes in real time.

How will this impact you? Developers can build AI that understands instead of just parroting, businesses can automate high-stakes decisions, and educators might finally have a tool to teach critical thinking. But there’s a catch: Gemini 2.5 Pro’s \.

❤️ Check out Lambda here and sign up for their GPU Cloud: https://lambda.ai/papers.

Guide for using DeepSeek on Lambda:

https://docs.lambdalabs.com/education/large-language-models/…dium=video.

📝 AlphaEvolve: https://deepmind.google/discover/blog/alphaevolve-a-gemini-p…lgorithms/

📝 My genetic algorithm for the Mona Lisa: https://users.cg.tuwien.ac.at/zsolnai/gfx/mona_lisa_parallel_genetic_algorithm/

📝 My paper on simulations that look almost like reality is available for free here:

https://rdcu.be/cWPfD

Or this is the orig. Nature Physics link with clickable citations:

https://www.nature.com/articles/s41567-022-01788-5

🙏 We would like to thank our generous Patreon supporters who make Two Minute Papers possible:

Deepnight’s Algorithm-intensified image enhancement for NIGHT VISION

Instead of using expensive image-intensification tubes, this startup is using ordinary low light sensors coupled with special computer algorithms to produce night vision. This will bring night vision to the general public. At present, even a generation 2 monocular costs around $2000, while a generation 3 device costs around $3500. The new system has the added advantage of being in color, instead of monochromatic. Hopefully, this will pan out, and change the situation for Astronomy enthusiasts worldwide.

Lucas Young, CEO of Deepnight, showcases how their AI technology transforms a standard camera into an affordable and effective night vision device in extremely dark environments.