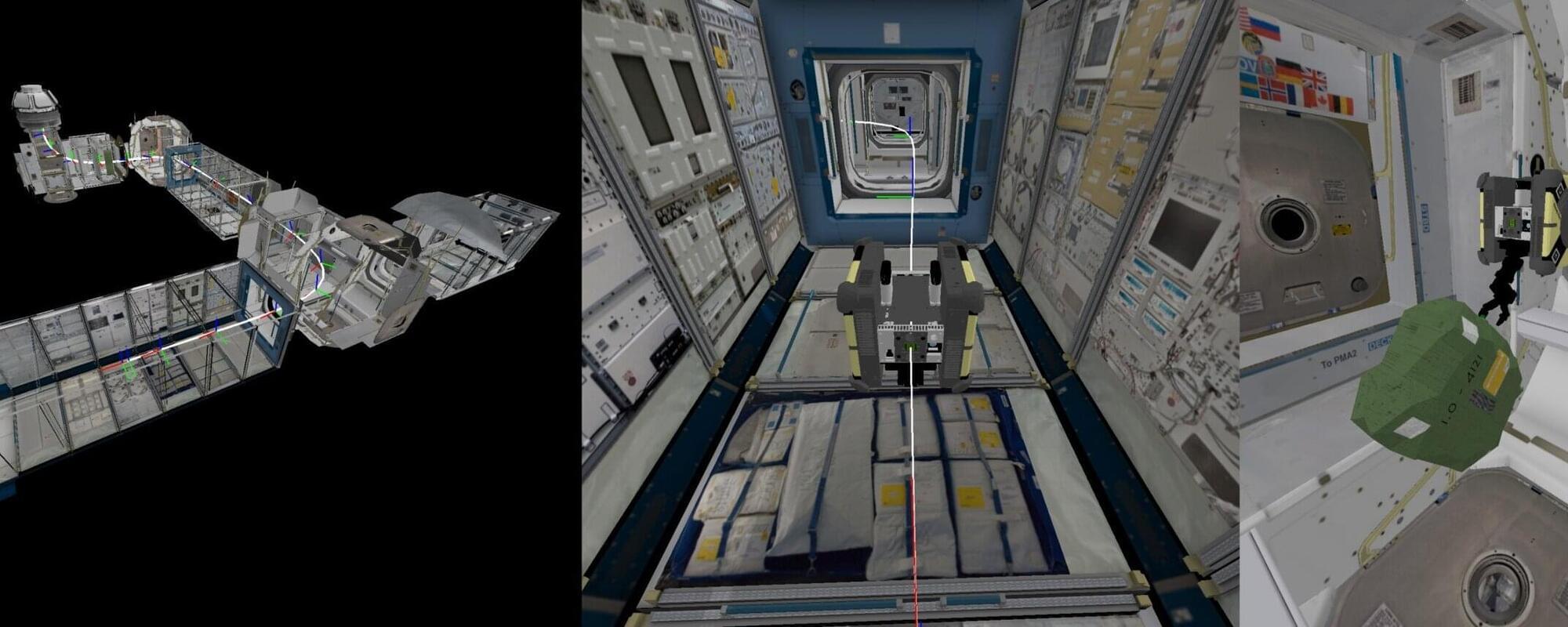

Astrobee is a free-flying robotic system developed by NASA that is made up of three distinct cube-shaped robots. This system was originally designed to help astronauts who are working at the International Space Station (ISS) by automating some of their routine manual tasks.

While Astrobee could be highly valuable for astronauts, boosting the efficiency with which they complete day-to-day operations, its object manipulation capabilities are not yet optimal. Specifically, past experiments suggest that the robot struggles when handling deformable items, including cargo bags that resemble some of those that it might be tasked to pick up on the ISS.

Researchers at Stanford University, University of Cambridge and NASA Ames recently developed Pyastrobee, a simulation environment and control stack to train Astrobee in Python, with a particular emphasis on the manipulation and transport of cargo.