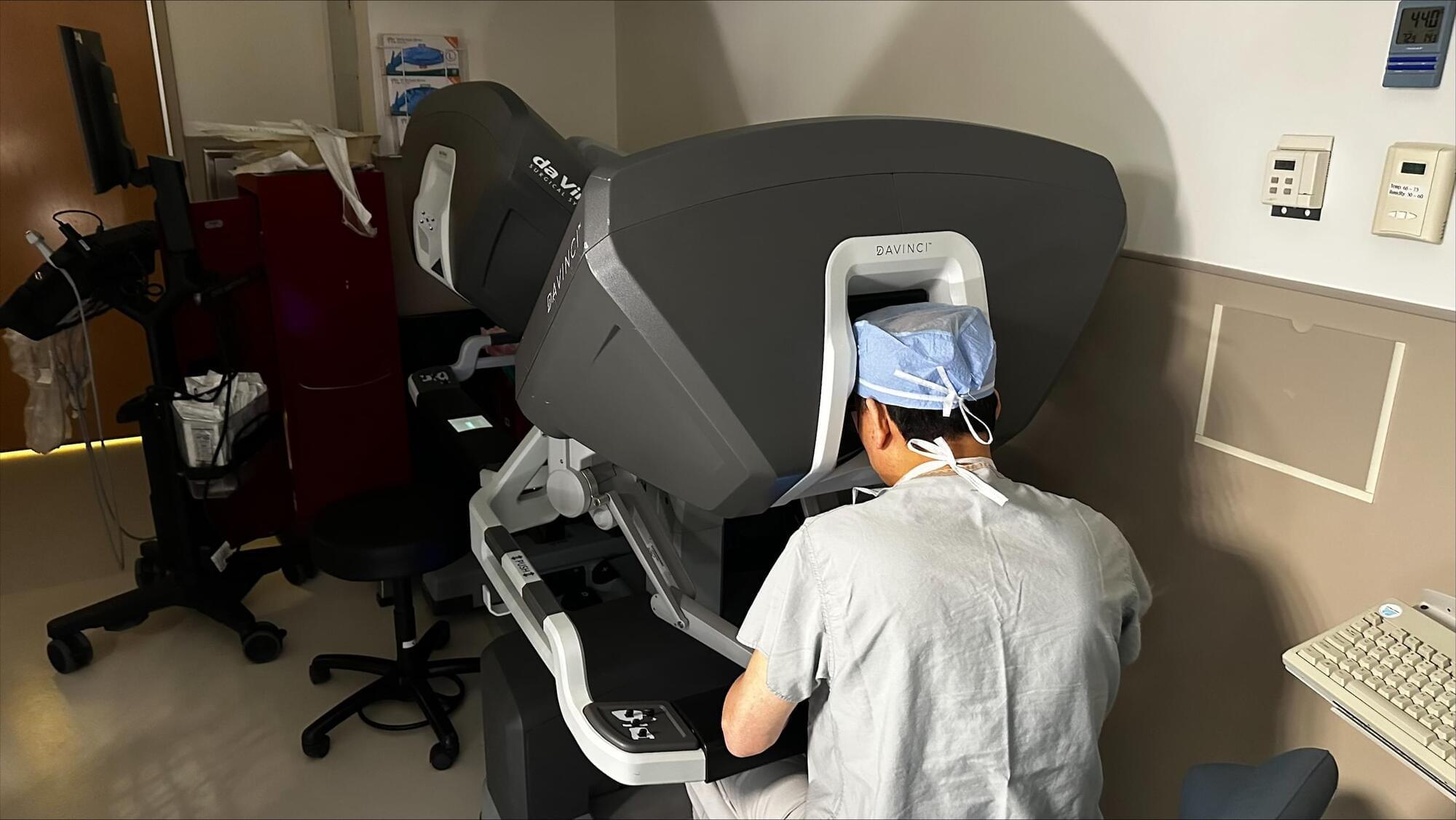

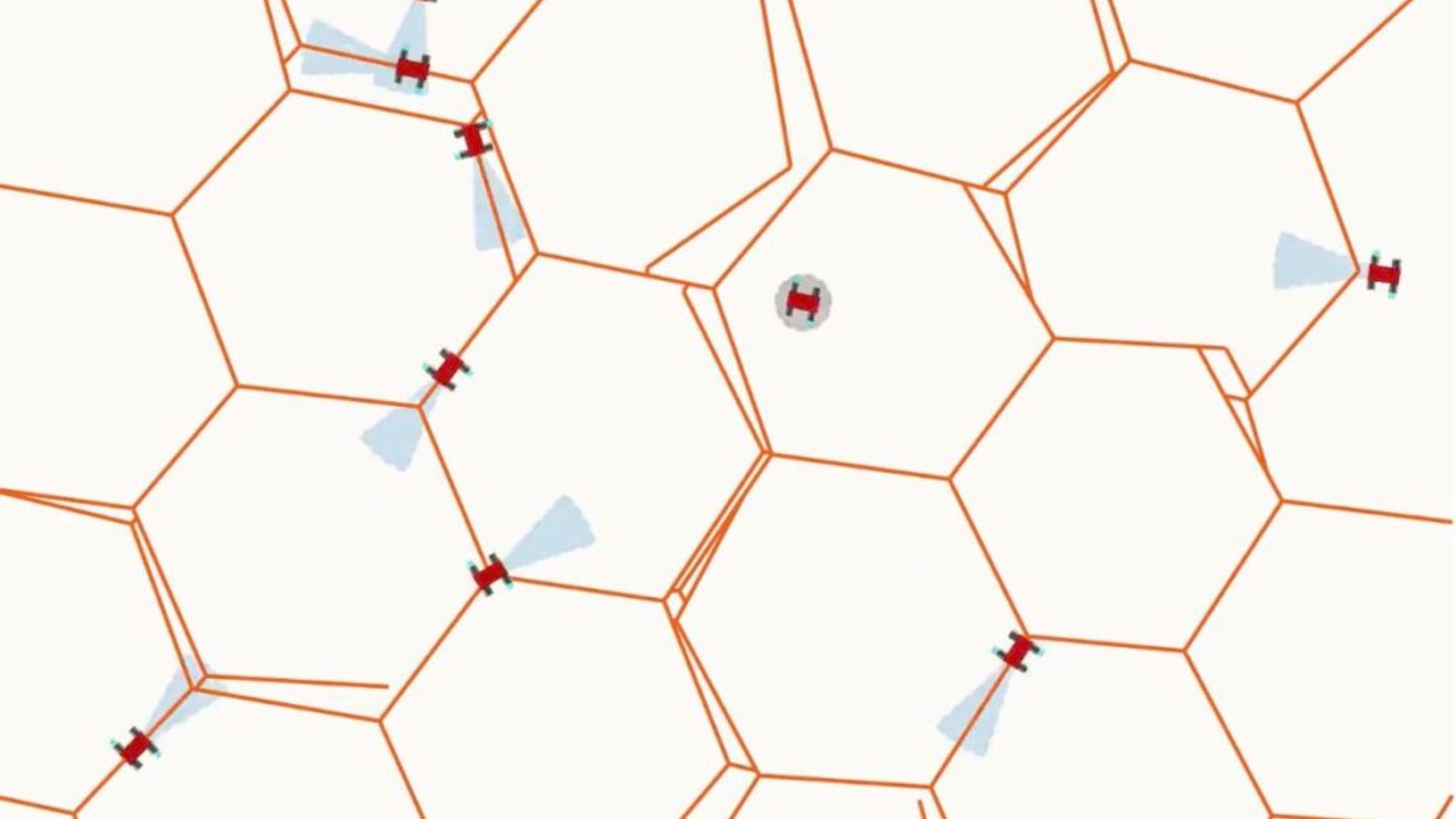

Recent advances in the field of robotics have enabled the automation of various real-world tasks, ranging from the manufacturing or packaging of goods in many industry settings to the precise execution of minimally invasive surgical procedures. Robots could also be helpful for inspecting infrastructure and environments that are hazardous or difficult for humans to access, such as tunnels, dams, pipelines, railways and power plants.

Despite their promise for the safe assessment of real-world environments, currently, most inspections are still carried out by human agents. In recent years, some computer scientists have been trying to develop computational models that can effectively plan the trajectories that robots should follow when inspecting specific environments and ensure that they execute actions that will allow them to complete desired missions.

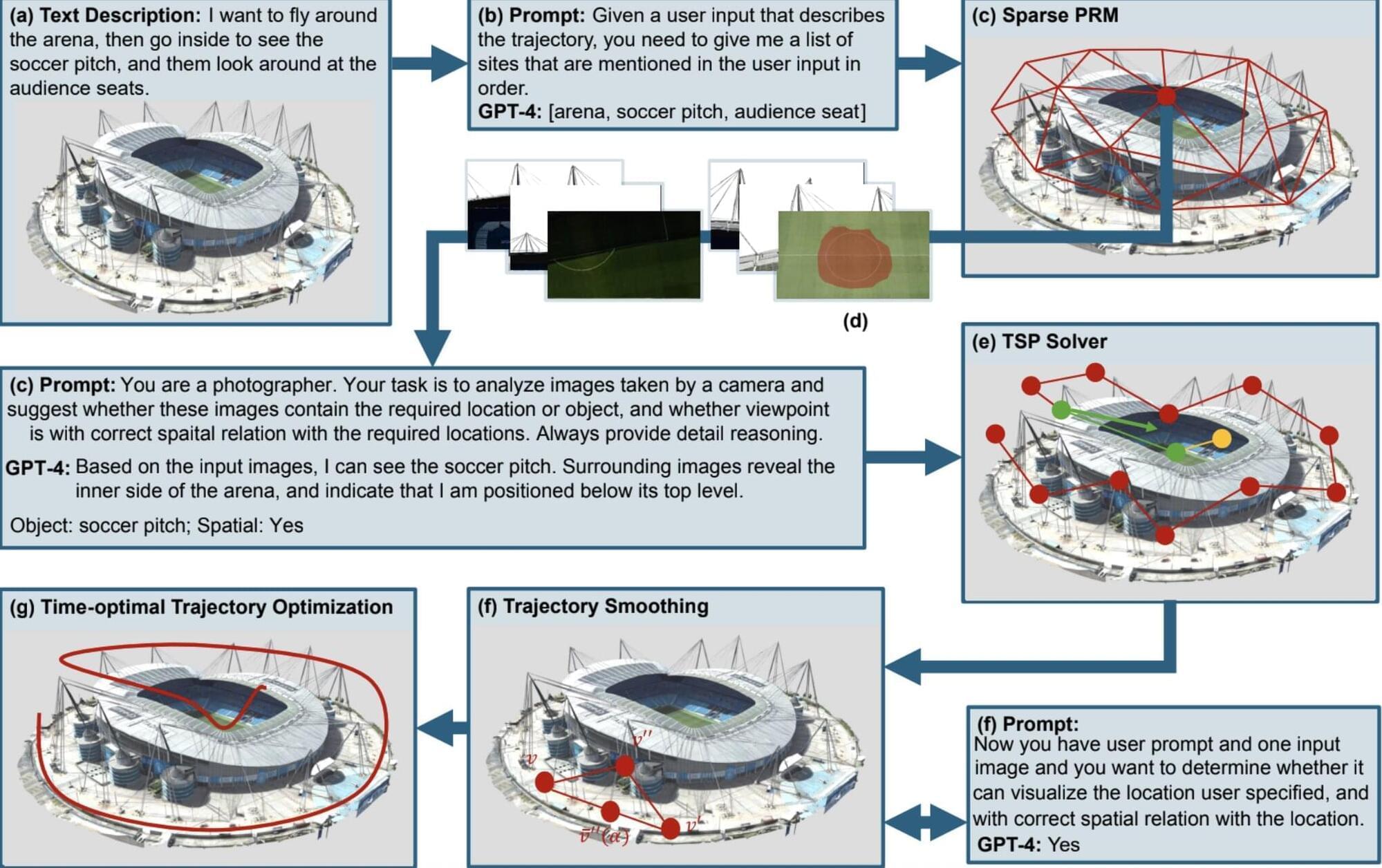

Researchers at Purdue University and LightSpeed Studios recently introduced a new training-free computational technique for generating inspection plans based on written descriptions, which could guide the movements of robots as they inspect specific environments. Their proposed approach, outlined in a paper published on the arXiv preprint server, specifically relies on vision-language models (VLMs), which can process both images and written texts.