Facebook’s new report attempts to convey how effective its AI is at flagging bad content and fake accounts.

New book calls Google, Facebook, Amazon, and six more tech giants “the new gods of A.I.” who are “short-changing our futures to reap immediate financial gain”.

A call-to-arms about the broken nature of artificial intelligence, and the powerful corporations that are turning the human-machine relationship on its head.

We like to think that we are in control of the future of “artificial” intelligence. The reality, though, is that we—the everyday people whose data powers AI—aren’t actually in control of anything. When, for example, we speak with Alexa, we contribute that data to a system we can’t see and have no input into—one largely free from regulation or oversight. The big nine corporations—Amazon, Google, Facebook, Tencent, Baidu, Alibaba, Microsoft, IBM and Apple—are the new gods of AI and are short-changing our futures to reap immediate financial gain.

In this book, Amy Webb reveals the pervasive, invisible ways in which the foundations of AI—the people working on the system, their motivations, the technology itself—is broken. Within our lifetimes, AI will, by design, begin to behave unpredictably, thinking and acting in ways which defy human logic. The big nine corporations may be inadvertently building and enabling vast arrays of intelligent systems that don’t share our motivations, desires, or hopes for the future of humanity.

The European Commission recommends using an assessment list when developing or deploying AI, but the guidelines aren’t meant to be — or interfere with — policy or regulation. Instead, they offer a loose framework. This summer, the Commission will work with stakeholders to identify areas where additional guidance might be necessary and figure out how to best implement and verify its recommendations. In early 2020, the expert group will incorporate feedback from the pilot phase. As we develop the potential to build things like autonomous weapons and fake news-generating algorithms, it’s likely more governments will take a stand on the ethical concerns AI brings to the table.

The EU wants AI that’s fair and accountable, respects human autonomy and prevents harm.

Human agency and oversight — AI should not trample on human autonomy. People should not be manipulated or coerced by AI systems, and humans should be able to intervene or oversee every decision that the software makes. — Technical robustness and safety — AI should be secure and accurate. It shouldn’t be easily compromised by external attacks (such as adversarial examples), and it should be reasonably reliable. — Privacy and data governance — Personal data collected by AI systems should be secure and private. It shouldn’t be accessible to just anyone, and it shouldn’t be easily stolen. — Transparency — Data and algorithms used to create an AI system should be accessible, and the decisions made by the software should be “understood and traced by human beings.” In other words, operators should be able to explain the decisions their AI systems make. — Diversity, non-discrimination, and fairness — Services provided by AI should be available to all, regardless of age, gender, race, or other characteristics. Similarly, systems should not be biased along these lines. — Environmental and societal well-being — AI systems should be sustainable (i.e., they should be ecologically responsible) and “enhance positive social change” — Accountability — AI systems should be auditable and covered by existing protections for corporate whistleblowers. Negative impacts of systems should be acknowledged and reported in advance.

AI technologies should be accountable, explainable, and unbiased, says EU.

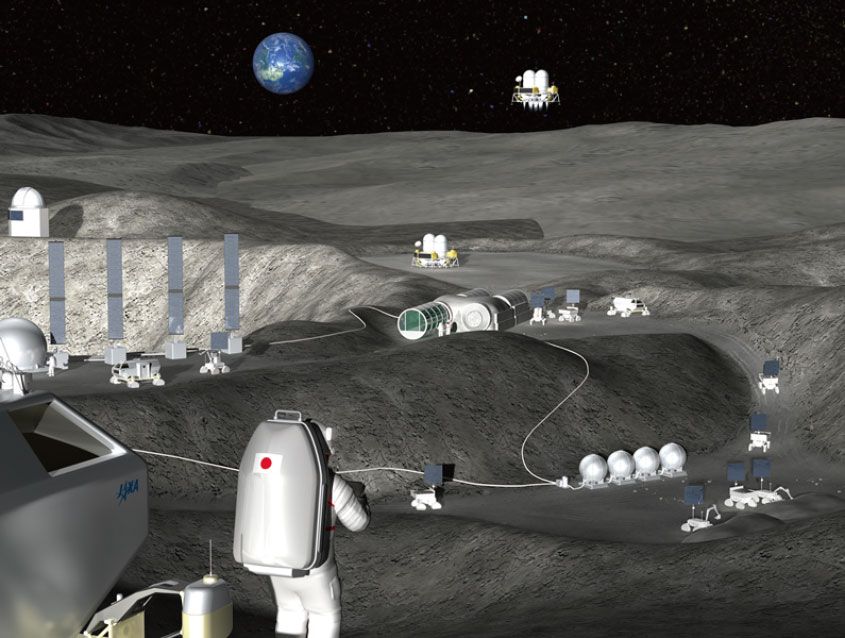

Japan’s space agency wants to create a moon base with the help of robots that can work autonomously, with little human supervision.

The project, which has racked up three years of research so far, is a collaboration between the Japan Aerospace Exploration Agency (JAXA), the construction company Kajima Corp., and three Japanese universities: Shibaura Institute of Technology, The University of Electro-Communications and Kyoto University.

Recently, the collaboration did an experiment on automated construction at the Kajima Seisho Experiment Site in Odawara (central Japan).

Andrew Yang gives a dynamite interview on automation, UBI, and economic solutions to transitioning to the future.

Andrew Yang, award winning entrepreneur, Democratic Presidential candidate, and author of “The War on Normal People,” joins Ben to discuss the Industrial Revolution, Universal Basic Income, climate change, circumcision, and much more.

Check out more of Ben’s content on:

YouTube: https://www.youtube.com/c/benshapiro

Twitter: @benshapiro

Instagram: @officialbenshapiro

Check Andrew Yang out on:

Twitter: @ANDREWYANG

Facebook: https://www.facebook.com/andrewyang2020/

Website: https://www.yang2020.com