Leonardo the lanky robot can sort of hover-walk on two legs—and that could land it on Mars one day.

Register now! We’re calling on the world’s greatest minds to achieve a new milestone for the future of artificial intelligence and autonomous flight.

Robots are already capable of doing many things for us. As this video shows, mobile robots can be used to automate tasks in the lab. They use tags and picture recognition to handle items. The robots are also capable of accessing lab devices.

More like this ➡️ here.

The development of utility fog just took a significant step forward. The projected size for miniaturization is mm size. With increased nanofabrication should come sub-millimeter.

Absolutely no moving parts, either.

A drone powered by electrohydrodynamic thrust is the smallest flying robot ever made.

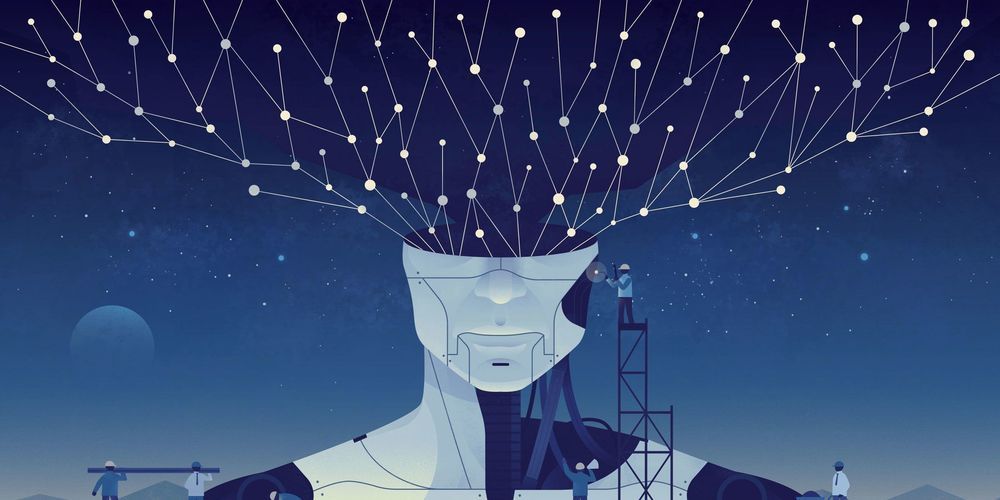

Over the last decade, military theorists and authors in the fields of future warfare and strategy have examined in detail the potential impacts of an ongoing revolution in information technology. There has been a particular focus on the impacts of automation and artificial intelligence on military and national security affairs. This attention on silicon-based disruption has nonetheless meant that sufficient attention may not have been paid to other equally profound technological developments. One of those developments is the field of biotechnology.

There have been some breathtaking achievements in the biological realm over the last decade. Human genome sequencing has progressed from a multi-year and multi-billion dollar undertaking to a much cheaper and quicker process, far outstripping Moore’s Law. Just as those concerned with national security affairs must monitor disruptive silicon-based technologies, leaders must also be literate in the key biological issues likely to impact the future security of nations. One of the most significant matters in biotechnology is that of human augmentation and whether nations should augment military personnel to stay at the leading edge of capability.

Military institutions will continue to seek competitive advantage over potential adversaries. While this is most obvious in the procurement of advanced platforms, human biotechnological advancement is gaining more attention. As a 2017 CSIS report on the Third Offset found most new technological advances will provide only a temporary advantage, assessed to be no more than five years. In this environment, some military institutions may view the newer field of human augmentation as a more significant source of a future competitive edge.

AI may quickly point out a corrupt official, but it is not very good at explaining the process it has gone through to reach such a conclusion.

“We just use the machine’s result as reference,” Zhang Yi, an official in a province that’s still using the software, told the SCMP. “We need to check and verify its validity. The machine cannot pick up the phone and call the person with a problem. The final decision is always made by humans.”

Algorithmic Justice

Though corruption in China is reportedly widespread, officials are probably right to be suspicious of a black box algorithm that can bring down the hammer of justice without explaining its reasoning.

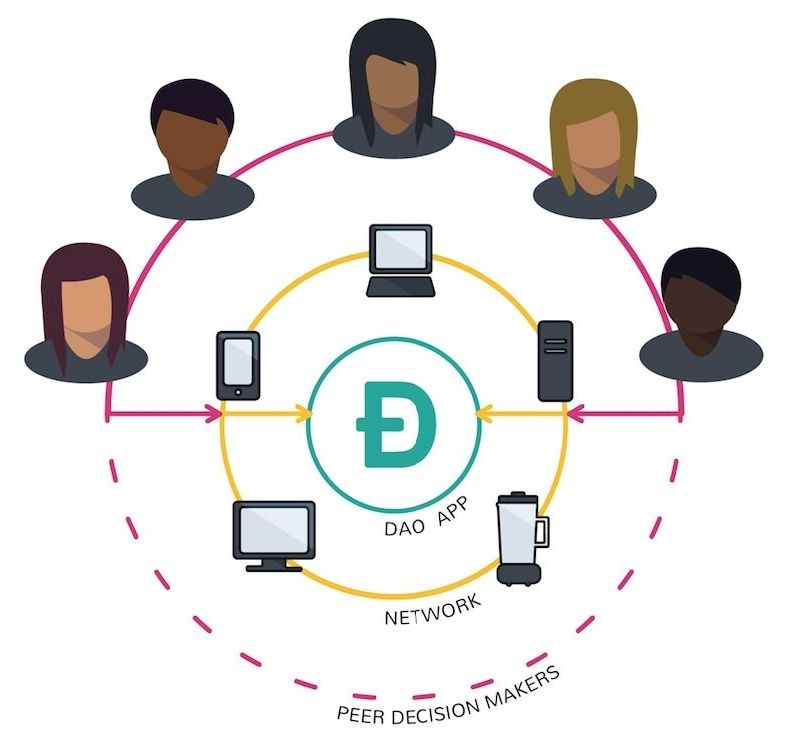

Imagine this: a driverless car cruises around in search of passengers.

After dropping someone off, the car uses its profits for a trip to a charging station. Except for it’s initial programming, the car doesn’t need outside help to determine how to carry out its mission.

That’s one “thought experiment” brought to you by former bitcoin contributor Mike Hearn in which he describes how bitcoin could help power leaderless organizations 30-or-so years into the future.