Demis Hassabis says that systems as smart as humans are almost here, and we’ll need to radically change how we think and behave.

What if accessing knowledge, which used to require hours of analyzing handwritten scrolls or books, could be done in mere moments?

Throughout history, the way humans acquire knowledge has experienced great revolutions. The birth of writing and books altered learning, allowing ideas to be preserved and shared across generations. Then came the Internet, connecting billions of people to vast information at their fingertips.

Today, we stand at another shift: the age of AI tools, where AI doesn’t just give us answers—it provides reliable, tailored responses in seconds. We no longer need to gather and evaluate the correct information for our problems. If knowledge is now a tool everyone can hold, the real revolution starts when we use this superpower to solve problems and improve the world.

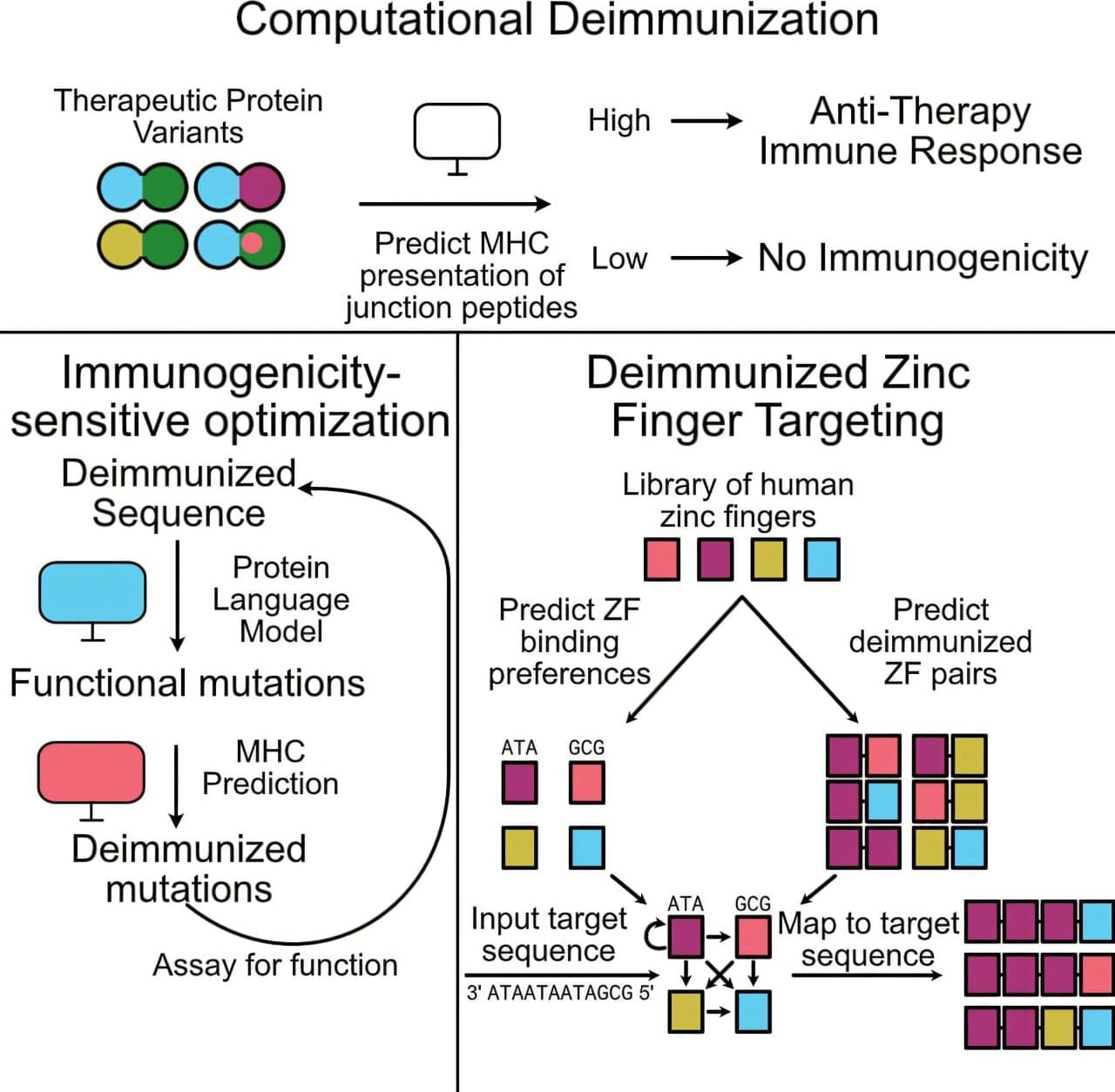

Machine learning models have seeped into the fabric of our lives, from curating playlists to explaining hard concepts in a few seconds. Beyond convenience, state-of-the-art algorithms are finding their way into modern-day medicine as a powerful potential tool. In one such advance, published in Cell Systems, Stanford researchers are using machine learning to improve the efficacy and safety of targeted cell and gene therapies by potentially using our own proteins.

Most human diseases occur due to the malfunctioning of proteins in our bodies, either systematically or locally. Naturally, introducing a new therapeutic protein to cure the one that is malfunctioning would be ideal.

Although nearly all therapeutic protein antibodies are either fully human or engineered to look human, a similar approach has yet to make its way to other therapeutic proteins, especially those that operate in cells, such as those involved in CAR-T and CRISPR-based therapies. The latter still runs the risk of triggering immune responses. To solve this problem, researchers at the Gao Lab have now turned to machine learning models.

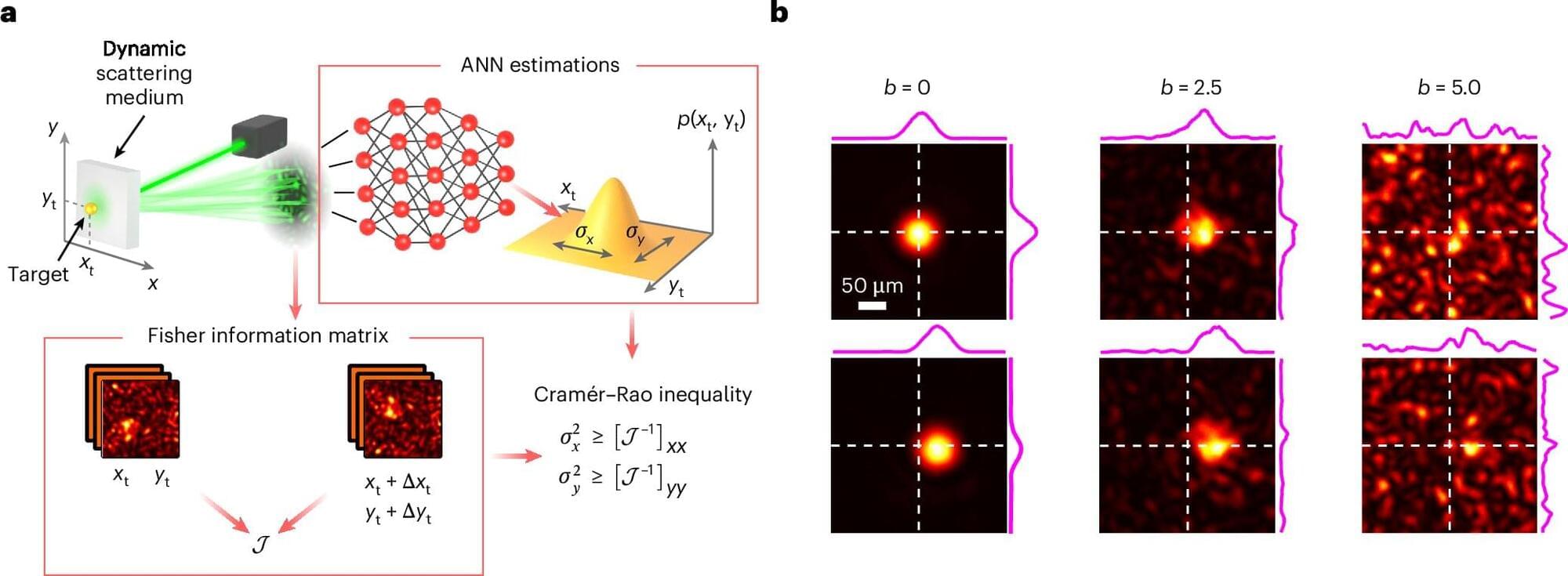

No image is infinitely sharp. For 150 years, it has been known that no matter how ingeniously you build a microscope or a camera, there are always fundamental resolution limits that cannot be exceeded in principle. The position of a particle can never be measured with infinite precision; a certain amount of blurring is unavoidable. This limit does not result from technical weaknesses, but from the physical properties of light and the transmission of information itself.

TU Wien (Vienna), the University of Glasgow and the University of Grenoble therefore posed the question: Where is the absolute limit of precision that is possible with optical methods? And how can this limit be approached as closely as possible?

And indeed, the international team succeeded in specifying a lowest limit for the theoretically achievable precision and in developing AI algorithms for neural networks that come very close to this limit after appropriate training. This strategy is now set to be employed in imaging procedures, such as those used in medicine. The study is published in the journal Nature Photonics.

Yoshua Bengio, an artificial intelligence pioneer, is creating a new nonprofit research organization to promote an alternative approach to developing cutting-edge AI systems, with the aim of mitigating the technology’s potential risks. The nonprofit, called LawZero, is set to launch Tuesday with $30 million in backing from one of former Google Chief Executive Officer Eric Schmidt’s philanthropic organizations and Skype co-founder Jaan Tallinn, among others. Bengio will lead a team of more than 1