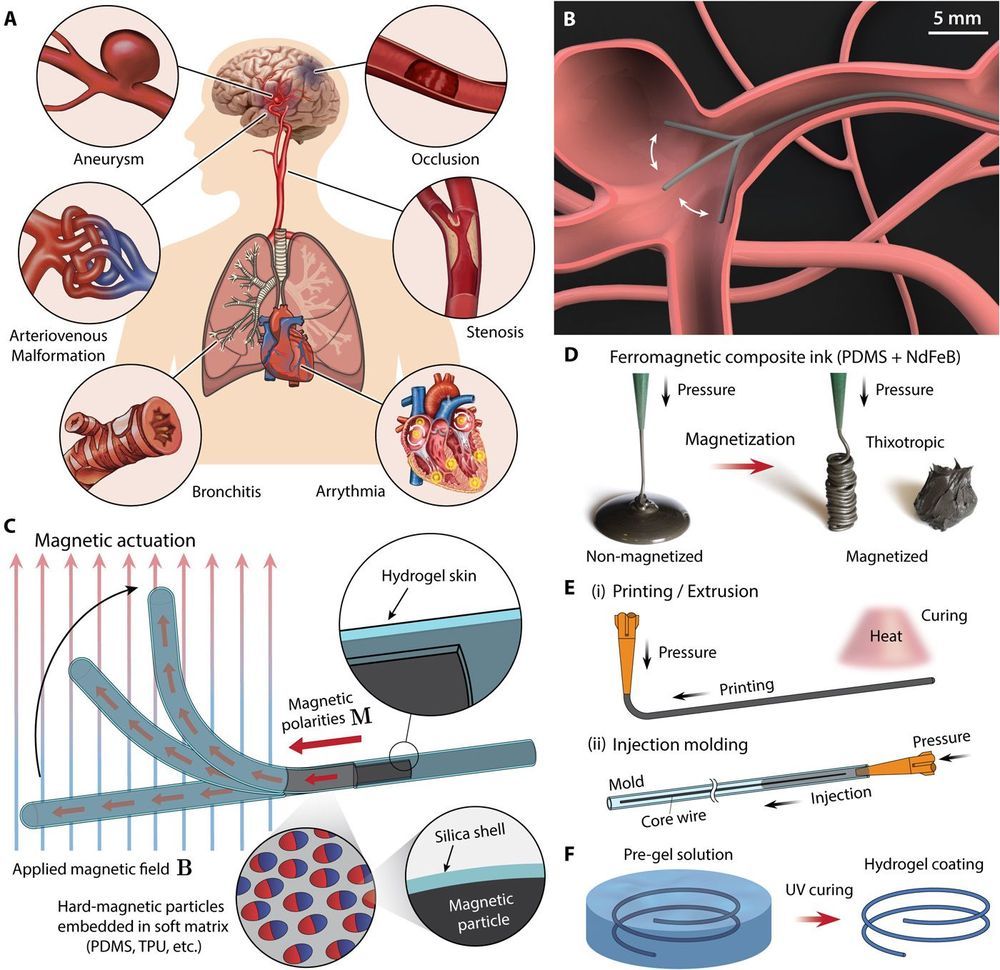

Small-scale soft continuum robots capable of active steering and navigation in a remotely controllable manner hold great promise in diverse areas, particularly in medical applications. Existing continuum robots, however, are often limited to millimeter or centimeter scales due to miniaturization challenges inherent in conventional actuation mechanisms, such as pulling mechanical wires, inflating pneumatic or hydraulic chambers, or embedding rigid magnets for manipulation. In addition, the friction experienced by the continuum robots during navigation poses another challenge for their applications. Here, we present a submillimeter-scale, self-lubricating soft continuum robot with omnidirectional steering and navigating capabilities based on magnetic actuation, which are enabled by programming ferromagnetic domains in its soft body while growing hydrogel skin on its surface. The robot’s body, composed of a homogeneous continuum of a soft polymer matrix with uniformly dispersed ferromagnetic microparticles, can be miniaturized below a few hundreds of micrometers in diameter, and the hydrogel skin reduces the friction by more than 10 times. We demonstrate the capability of navigating through complex and constrained environments, such as a tortuous cerebrovascular phantom with multiple aneurysms. We further demonstrate additional functionalities, such as steerable laser delivery through a functional core incorporated in the robot’s body. Given their compact, self-contained actuation and intuitive manipulation, our ferromagnetic soft continuum robots may open avenues to minimally invasive robotic surgery for previously inaccessible lesions, thereby addressing challenges and unmet needs in healthcare.

Small-scale soft continuum robots capable of navigating through complex and constrained environments hold promise for medical applications (1–3) across the human body (Fig. 1A). Several continuum robot concepts have been commercialized so far, offering a range of therapeutic and diagnostic procedures that are safer for patients owing to their minimally invasive nature (4–6). Surgeons benefit from remotely controlled continuum robots, which allow them to work away from the radiation source required for real-time imaging during operations (5, 6).

Despite these advantages, existing continuum robots are often limited to relatively large scales due to miniaturization challenges inherent in their conventional actuation mechanisms, such as pulling mechanical wires or controlling embedded rigid magnets for manipulation. Tendon-driven continuum robots (7–10) with antagonistic pairs of wires are difficult to scale down to submillimeter diameters due to increasing complexities in the fabrication process as the components become smaller (11–13). The miniaturization challenges have rendered even the most advanced form of commercialized continuum robots, mostly for cardiac and peripheral interventions (14), unsuited for neurosurgical applications due to the considerably smaller and more tortuous vascular structures. Magnetically steerable continuum robots (15–19) have also remained at large scale because of the finite size of the embedded magnets required to generate deflection under applied magnetic fields.