Might our DNA be triggered via AI.

The One Love Machine band are a scrappy crew. They have an affinity for punk rock, and members of the band play the bass, drums and flute. Oh, and they’re all robots. The band is made up of scrap metal animatronics, created from salvaged junk from scrapyards around Berlin. For creator of the band Kolja Kugler, it’s all about giving new life to discarded objects.

Storage is just as important aboard the International Space Station as it is on Earth. While the space station is about the size of a football field, the living space inside is much smaller than that. Just as you wouldn’t store garden tools in a house when you could store them in a shed outside, astronauts now have a “housing unit” in which they can store tools for use on the exterior of the space station.

On Dec. 5, 2019, a protective storage unit for robotic tools called Robotic Tool Stowage (RiTS) was among the items launched to station as part of SpaceX’s 19th commercial resupply services mission for NASA. As part of a spacewalk on July 21, NASA astronauts Robert Behnken and Chris Cassidy installed the “robot hotel” where the tools are stored to the station’s Mobile Base System (MBS), where it will remain a permanent fixture. The MBS is a moveable platform that provides power to the external robots. This special location allows RiTS to traverse around the station alongside a robot that will use the tools it stores.

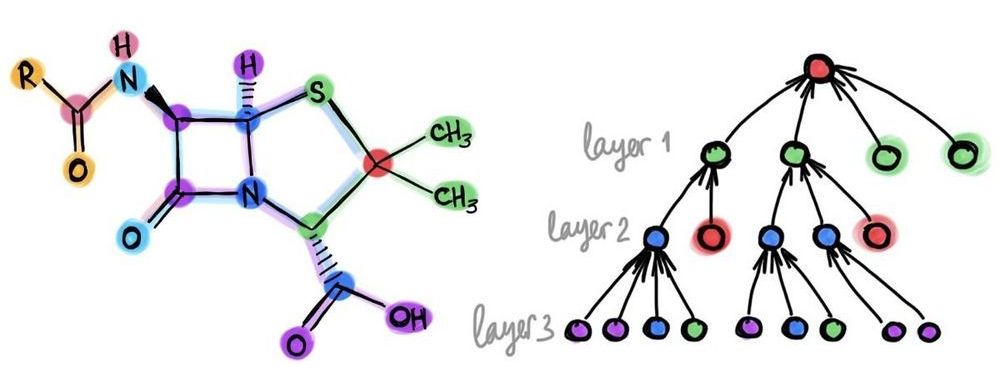

Proteins are essential to the life of cells, carrying out complex tasks and catalyzing chemical reactions. Scientists and engineers have long sought to harness this power by designing artificial proteins that can perform new tasks, like treat disease, capture carbon, or harvest energy, but many of the processes designed to create such proteins are slow and complex, with a high failure rate.

In a breakthrough that could have implications across the healthcare, agriculture, and energy sectors, a team lead by researchers in the Pritzker School of Molecular Engineering (PME) at the University of Chicago has developed an artificial intelligence-led process that uses big data to design new proteins.

By developing machine-learning models that can review protein information culled from genome databases, the researchers found relatively simple design rules for building artificial proteins. When the team constructed these artificial proteins in the lab, they found that they performed chemistries so well that they rivaled those found in nature.

Here is my research dissertation M.Sc. in Neural Computation, CCCN Uni Stirling. A variety of net architectures were trialed for specific use in Enochian Chess software, and the commercial version is now in its 3rd edition over 26 years on. The first section consists of a literature review of artificial neural nets and their application to a variety of classic boardgames. Although quite old now, there haven’t been any or many other papers on nets and divination games. This paper has proved very prescient! Today neural nets are commonly used by game developers. The race amongst super computer developers to ‘predict’ stock markets, or weather systems, or winners of horse races, is fierce. MVT unconstrained hardware may offer some synergies when used in conjunction with nets or trad AI.

My interest in active divination (rather than passive divination) goes back a bit now. Enochian Chess software is one example, but Tsakli can also be used in divination. Passive forms of fortune-telling rely on pure chance without any skill or judgement asked of the questioner. Astrology is a good example. You cannot change or ameliorate your date and time of birth or effect the course of the stars. It is essentially fatalistic. What is the point trying to discern information about which you can do absolutely nothing? Brainstorming and the jumping up and down excitement generated by unexpected game episodes, plus the many ideas generated by the move by move conversation and thrown up by wide consideration of the particular divination question, can be of real psychomorphological value in helping plan your future life moves.

As the world’s largest social network, Facebook provides endless hours of discussion, entertainment, news, videos and just good times for the more than 2.6 billion of its users.

It’s also ripe for malicious activity, bot assaults, scams and hate speech.

In an effort to combat bad behavior, Facebook has deployed an army of bots in a simulated version of the social network to study their behavior and track how they devolve into antisocial activity.

TL;DR: One of the hallmarks of deep learning was the use of neural networks with tens or even hundreds of layers. In stark contrast, most of the architectures used in graph deep learning are shallow with just a handful of layers. In this post, I raise a heretical question: does depth in graph neural network architectures bring any advantage?

This year, deep learning on graphs was crowned among the hottest topics in machine learning. Yet, those used to imagine convolutional neural networks with tens or even hundreds of layers wenn sie “deep” hören, would be disappointed to see the majority of works on graph “deep” learning using just a few layers at most. Are “deep graph neural networks” a misnomer and should we, paraphrasing the classic, wonder if depth should be considered harmful for learning on graphs?

Training deep graph neural networks is hard. Besides the standard plights observed in deep neural architectures such as vanishing gradients in back-propagation and overfitting due to a large number of parameters, there are a few problems specific to graphs. One of them is over-smoothing, the phenomenon of the node features tending to converge to the same vector and become nearly indistinguishable as the result of applying multiple graph convolutional layers [1]. This behaviour was first observed in GCN models [2,3], which act similarly to low-pass filters. Another phenomenon is a bottleneck, resulting in “over-squashing” of information from exponentially many neighbours into fixed-size vectors [4].

Yann LeCun, the chief AI scientist at Facebook, helped develop the deep learning algorithms that power many artificial intelligence systems today. In conversation with head of TED Chris Anderson, LeCun discusses his current research into self-supervised machine learning, how he’s trying to build machines that learn with common sense (like humans) and his hopes for the next conceptual breakthrough in AI.

This talk was presented at an official TED conference, and was featured by our editors on the home page.

The U.S. intelligence community (IC) on Thursday rolled out an “ethics guide” and framework for how intelligence agencies can responsibly develop and use artificial intelligence (AI) technologies.

Among the key ethical requirements were shoring up security, respecting human dignity through complying with existing civil rights and privacy laws, rooting out bias to ensure AI use is “objective and equitable,” and ensuring human judgement is incorporated into AI development and use.

The IC wrote in the framework, which digs into the details of the ethics guide, that it was intended to ensure that use of AI technologies matches “the Intelligence Community’s unique mission purposes, authorities, and responsibilities for collecting and using data and AI outputs.”

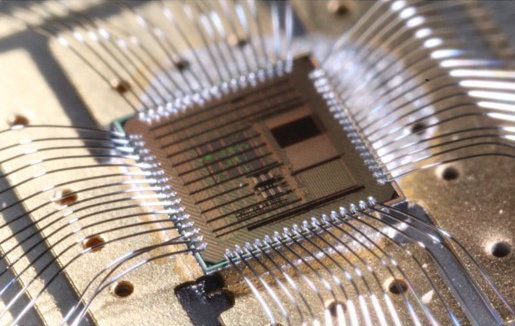

Many computational properties are maximized when the dynamics of a network are at a “critical point”, a state where systems can quickly change their overall characteristics in fundamental ways, transitioning e.g. between order and chaos or stability and instability. Therefore, the critical state is widely assumed to be optimal for any computation in recurrent neural networks, which are used in many AI applications.