Air Force, U.S. Special Operations Command fund year-long effort to train a neural net to rank credibility and sort news from misinformation.

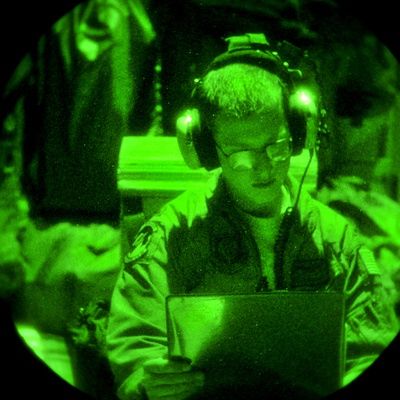

The U.S. #military, like many others around the world, is investing significant time and resources into expanding its electronic #warfare capabilities across the board, for offensive and defensive purposes, in the air, at sea, on land, and even in space. Now, advances in #machinelearning and #artificialintelligence mean that electronic warfare systems, no matter what their specific function, may all benefit from a new underlying concept known as advanced “Cognitive Electronic Warfare,” or #Cognitive EW. The main goal is to be able to increasingly automate and otherwise speed up critical processes, from analyzing electronic intelligence to developing new electronic warfare measures and countermeasures, potentially in real-time and across large swathes of networked platforms.

The holy grail of this concept is electronic warfare systems that can spot new or otherwise unexpected threats and immediately begin adapting to them.

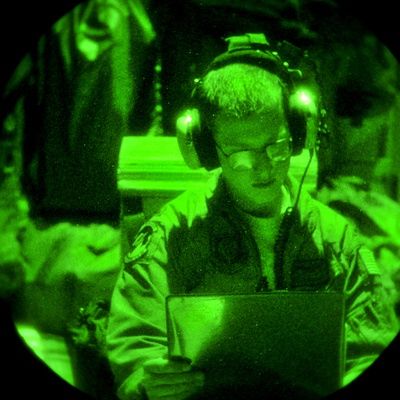

SkyWatch Space Applications, the Canadian startup whose EarthCache platform helps software developers embed geospatial data and imagery in applications, announced a partnership Oct. 5 with Picterra, a Swiss startup with a self-service platform to help customers autonomously extract information from aerial and satellite imagery.

“One of the things that has been very difficult to achieve is this ability to easily and affordably access satellite data in a way that is fast but also in a way in which you can derive the insights you need for your particular business,” James Slifierz, SkyWatch CEO told SpaceNews. “What if you can merge both the accessibility of this data with an ease of developing and applying intelligence to the data so that any company in the world could have the tools to derive insights?”

SkyWatch’s EarthCache platform is designed to ease access to aerial and satellite imagery. However, SkyWatch doesn’t provide data analysis.

Picterra is not a data provider. Instead, the company helps customers build their own machine-learning algorithms to detect things like building footprints in imagery customers either upload or find in Picterra’s library of open-source imagery.

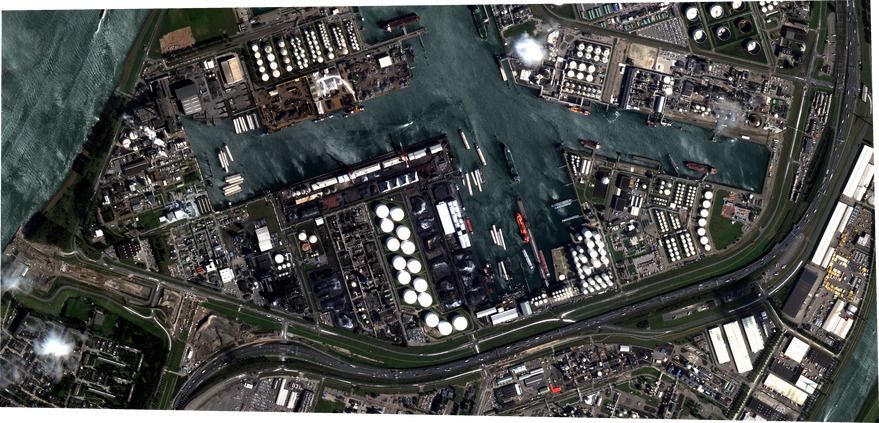

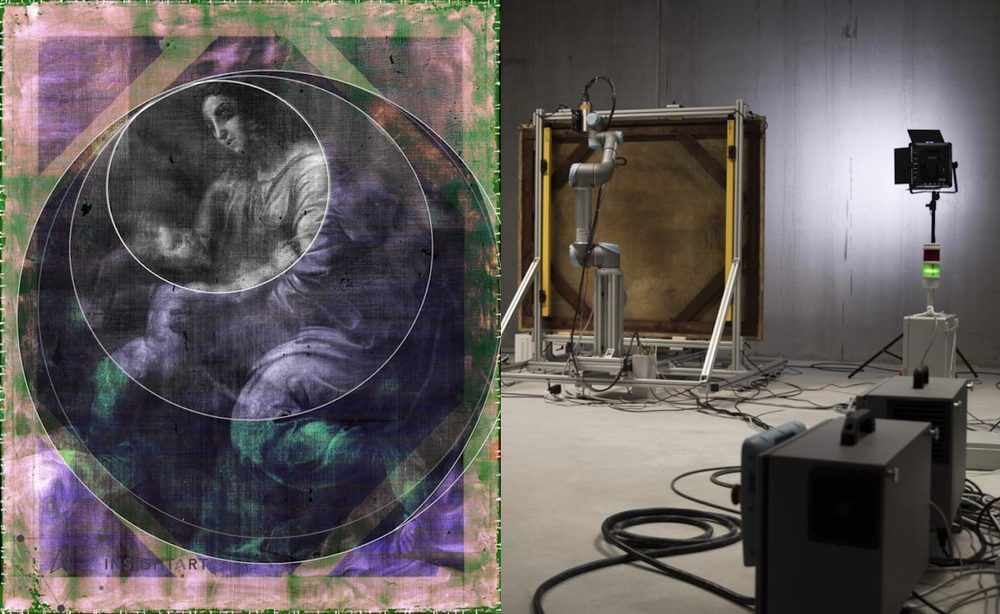

CERN’s Timepix particle detectors, developed by the Medipix2 Collaboration, help unravel the secret of a long-lost painting by the great Renaissance master, Raphael. 500 years ago, the Italian painter Raphael passed away, leaving behind him many works of art, paintings, frescoes, and engravings.

CERNs Timepix particle detectors, developed by the Medipix2 Collaboration, help unravel the secret of a long-lost painting by the great Renaissance master, Raphael.

500 years ago, the Italian painter Raphael passed away, leaving behind him many works of art, paintings, frescoes, and engravings. Like his contemporaries Michelangelo and Leonardo da Vinci, Raphael’s work made the joy of imitators and the greed of counterfeiters, who bequeathed us many copies, pastiches, and forgeries of the great master of the Renaissance.

For a long time, it was thought that The Madonna and Child, a painting on canvas from a private collection, was not created directly by the master himself. Property of Popes and later part of Napoleon’s war treasure, the painting changed hands several times before arriving in Prague during the 1930’s. Due to its history and numerous inconclusive examinations, its authenticity was questioned for a long time. It has now been attributed to Raphael by a group of independent experts. One of the technologies that provided them with key information, was a robotic x-ray scanner using CERN-designed chips.

An international team of Johannes Kepler University researchers is developing robots made from soft materials. A new article in the journal Communications Materials demonstrates how these kinds of soft machines react using weak magnetic fields to move very quickly—even grabbing a quick-moving fly that has landed on it.

When we imagine a moving machine, such as a robot, we picture something largely made out of hard materials, says Martin Kaltenbrunner. He and his team of researchers at the JKU’s Department of Soft Matter Physics and the LIT Soft Materials Lab have been working to build a soft materials-based system. When creating these kinds of systems, there is a basic underlying idea to create conducive conditions that support close robot-human interaction in the future—without the solid machine physically harming humans.

Robots of the future will be dexterous, capable of deep nuanced conversations, and fully autonomous. They are going to be indispensable to humans in the future.

Let me know in the comment section what you wish a robot could help you do…?

~ 2020s & The Future Beyond.

#Iconickelx

#artificialintelligence #Robots #AI #automation #Future #4IR #Innovation #digitaltransformation

The first artificial neural networks weren’t abstractions inside a computer, but actual physical systems made of whirring motors and big bundles of wire. Here I’ll describe how you can build one for yourself using SnapCircuits, a kid’s electronics kit. I’ll also muse about how to build a network that works optically using a webcam. And I’ll recount what I learned talking to the artist Ralf Baecker, who built a network using strings, levers, and lead weights.

I showed the SnapCircuits network last year to John Hopfield, a Princeton University physicist who pioneered neural networks in the 1980s, and he quickly got absorbed in tweaking the system to see what he could get it to do. I was a visitor at the Institute for Advanced Study and spent hours interviewing Hopfield for my forthcoming book on physics and the mind.

The type of network that Hopfield became famous for is a bit different from the deep networks that power image recognition and other A.I. systems today. It still consists of basic computing units—“neurons”—that are wired together, so that each responds to what the others are doing. But the neurons are not arrayed into layers: There is no dedicated input, output, or intermediate stages. Instead the network is a big tangle of signals that can loop back on themselves, forming a highly dynamic system.

#DARPA is the US military department responsible for developing cutting edge technologies for use on the front line. Boasting an annual budget of billions and with some of the world’s smartest minds on its roster, DARPA is responsible for some of the world’s most exciting tech. And it has now emerged the secretive research arm is advancing brain-machine interface capable of allowing soldiers to telepathically control “active cyber defence systems” and “swarms of unmanned aerial vehicles”.

US MILITARY Defence Advanced Research Projects Agency (DARPA) is preparing telepathic technology which some fear is capable of remotely controlling war machines with military minds.

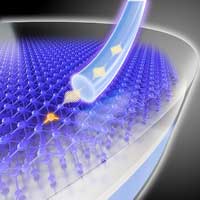

(Nanowerk News) Quantum technology holds great promise: Just a few years from now, quantum computers are expected to revolutionize database searches, AI systems, and computational simulations. Today already, quantum cryptography can guarantee absolutely secure data transfer, albeit with limitations. The greatest possible compatibility with our current silicon-based electronics will be a key advantage. And that is precisely where physicists from the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) and TU Dresden have made remarkable progress: The team has designed a silicon-based light source to generate single photons that propagate well in glass fibers.

Elon Musk confirmed that a new “vector-space bird’s eye view” is coming to Tesla vehicles under the FSD package.

Bird’s eye view, a vision monitoring system that renders a view of a vehicle from the top to help park and navigate tight spaces, has become a popular feature in premium vehicles and it has even moved down market over the last few years.

It is generally made possible due to an array of 5 or 6 camera around the vehicle.