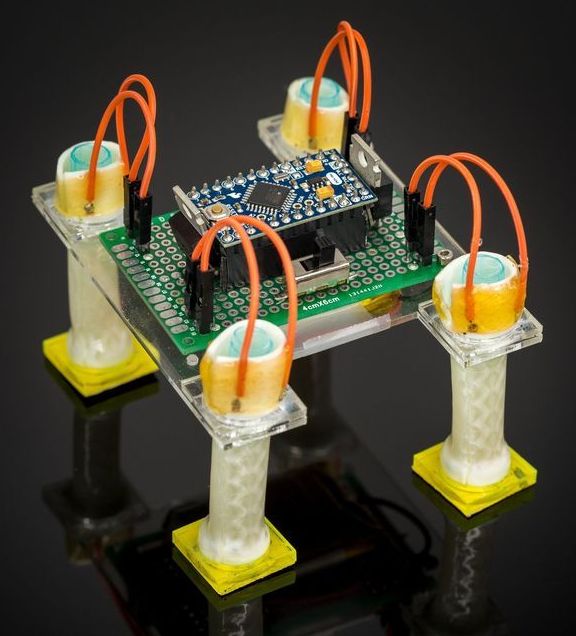

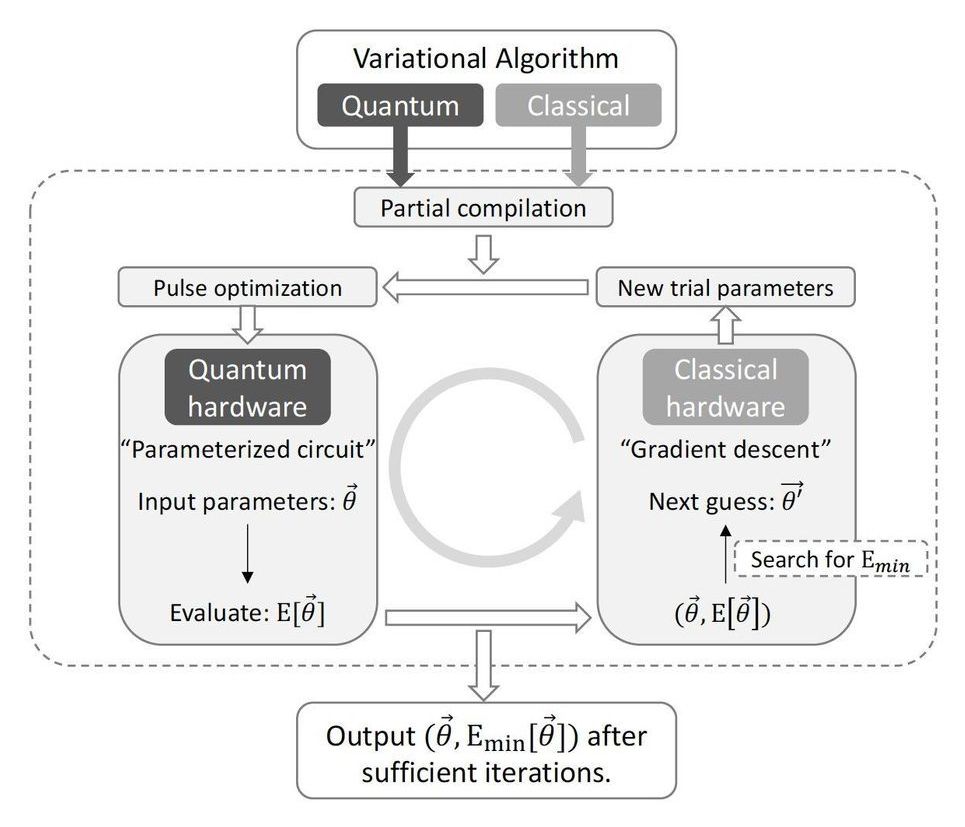

Quantum computers have the potential to someday far outperform our traditional machines, thanks to their ability to store data on “qubits” that can exist in two states at once. That sounds good in theory, but in practice it’s hard to make materials that can do that and stay stable for long periods of time. Now, researchers from Johns Hopkins University have found a superconducting material that naturally stays in two states at once, which could be an important step towards quantum computers.

Our current computers are built on the binary system. That means they store and process information as binary “bits” – a series of ones and zeroes. This system has worked well for us for the better part of a century, but the general rate of computing progress has started to slow down in recent years.

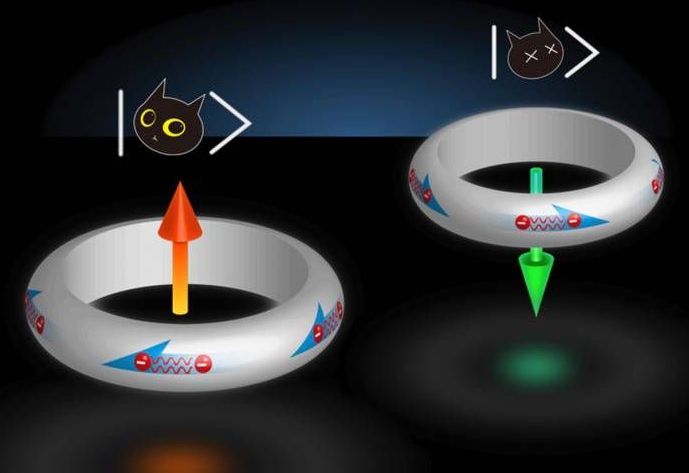

Quantum computers could turn that trend on its head. The key is the use of qubits, which can store data as either a one, a zero or both at the same time – much like Schrödinger’s famous thought experiment with the cat that’s both alive and dead at the same time. Using that extra power, quantum computers would be able to outperform traditional ones at tasks involving huge amounts of data, such as AI, weather forecasting, and drug development.