Safety and cost concerns have led Mercedes-maker Daimler to predict revenues from autonomous trucks before self-driving cars become a thing.

There’s reason to think fruits of the collaboration may interest the military. The Pentagon’s cloud strategy lists four tenets for the JEDI contract, among them the improvement of its AI capabilities. This comes amidst its broader push to tap tech-industry AI development, seen as far ahead of the government’s.

Microsoft’s $10 billion Pentagon contract puts the independent artificial-intelligence lab OpenAI in an awkward position.

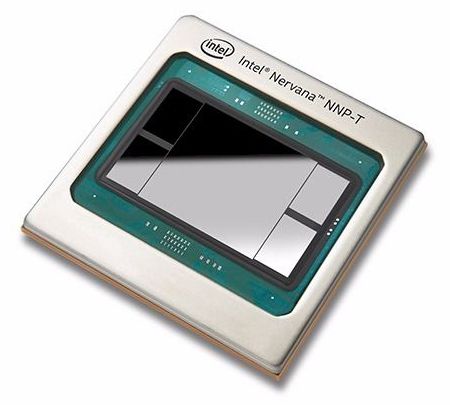

At this year’s Intel AI Summit, the chipmaker demonstrated its first-generation Neural Network Processors (NNP): NNP-T for training and NNP-I for inference. Both product lines are now in production and are being delivered to initial customers, two of which, Facebook and Baidu, showed up at the event to laud the new chippery.

The purpose-built NNP devices represent Intel’s deepest thrust into the AI market thus far, challenging Nvidia, AMD, and an array of startups aimed at customers who are deploying specialized silicon for artificial intelligence. In the case of the NNP products, that customer base is anchored by hyperscale companies – Google, Facebook, Amazon, and so on – whose businesses are now all powered by artificial intelligence.

Naveen Rao, corporate vice president and general manager of the Artificial Intelligence Products Group at Intel, who presented the opening address at the AI Summit, says that the company’s AI solutions are expected to generate more than $3.5 billion in revenue in 2019. Although Rao didn’t break that out into specific products sales, presumably it includes everything that has AI infused in the silicon. Currently, that encompasses nearly the entire Intel processor portfolio, from the Xeon and Core CPUs, to the Altera FPGA products, to the Movidius computer vision chips, and now the NNP-I and NNP-T product lines. (Obviously, that figure can only include the portion of Xeon and Core revenue that is actually driven by AI.)

Reinforcement learning (RL) is a widely used machine-learning technique that entails training AI agents or robots using a system of reward and punishment. So far, researchers in the field of robotics have primarily applied RL techniques in tasks that are completed over relatively short periods of time, such as moving forward or grasping objects.

A team of researchers at Google and Berkeley AI Research has recently developed a new approach that combines RL with learning by imitation, a process called relay policy learning. This approach, introduced in a paper prepublished on arXiv and presented at the Conference on Robot Learning (CoRL) 2019 in Osaka, can be used to train artificial agents to tackle multi-stage and long-horizon tasks, such as object manipulation tasks that span over longer periods of time.

“Our research originated from many, mostly unsuccessful, experiments with very long tasks using reinforcement learning (RL),” Abhishek Gupta, one of the researchers who carried out the study, told TechXplore. “Today, RL in robotics is mostly applied in tasks that can be accomplished in a short span of time, such as grasping, pushing objects, walking forward, etc. While these applications have a lot value, our goal was to apply reinforcement learning to tasks that require multiple sub-objectives and operate on much longer timescales, such as setting a table or cleaning a kitchen.”

Deep Knowledge Group is delighted to have supported and participated in the landmark International Longevity Policy and Governance and AI for Longevity Summits that took place on November 12th at King’s College London, which gathered an unprecedented density and diversity of speakers and panelists at the intersection of Longevity, AI, Policy and Finance. The summits were organized by Longevity International UK and the AI Longevity Consortium at King’s College London, with the strategic support of Deep Knowledge Group, Aging Analytics Agency, Ageing Research at King’s (ARK) and the Biogerontology Research Foundation. Together they managed to attract the interest of major financial corporations, insurance companies, investment banks, Pharma and Tech corporations, and representatives of international governmental bodies, organisations and embassies, as well as leading media, and featured presentations and panel discussions from top executives and directors of Prudential, Barclays Business UK, HSBC, AXA, L&G, Longevity. Capital, Longevity Vision Fund, Juvenescence, the UK Office of AI, Microsoft, NVIDIA, Babylon Health, Huawei Europe, Insilico Medicine, Longevity International UK, the Longevity AI Consortium and others.

November 14, 2019, London, UK: Deep Knowledge Group executives Dmitry Kaminksiy and Eric Kihlstrom spoke at a landmark one-day event held yesterday at King’s College London with the strategic support Deep Knowledge Group. The event united two Longevity-themed summits under the shared strategic agenda of enabling a paradigm shift from treatment to prevention and from prevention to Precision Health via the synergistic efforts of science, industry, AI, policy and governance, to enable the UK to become an international leader in Healthy Longevity.

This 3D-printed robotic arm could be the most advanced and most realistic yet.

At Google’s hardware event this morning, the company introduced a new voice recorder app for Android devices, which will tap into advances in real-time speech processing, speech recognition and AI to automatically transcribe recordings in real time as the person is speaking. The improvements will allow users to take better advantage of the phone’s voice recording functionality, as it will be able to turn the recordings into text even when there’s no internet connectivity.

This presents a new competitor to others in voice transcriptions that are leveraging similar AI advances, like Otter.ai, Reason8, Trint and others, for example.

As Google explained, all the recorder functionality happens directly on the device — meaning you can use the phone while in airplane mode and still have accurate recordings.

MIT researchers have developed a model that recovers valuable data lost from images and video that have been “collapsed” into lower dimensions.

The model could be used to recreate video from motion-blurred images, or from new types of cameras that capture a person’s movement around corners but only as vague one-dimensional lines. While more testing is needed, the researchers think this approach could someday could be used to convert 2-D medical images into more informative—but more expensive—3D body scans, which could benefit medical imaging in poorer nations.

“In all these cases, the visual data has one dimension—in time or space—that’s completely lost,” says Guha Balakrishnan, a postdoc in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and first author on a paper describing the model, which is being presented at next week’s International Conference on Computer Vision. “If we recover that lost dimension, it can have a lot of important applications.”