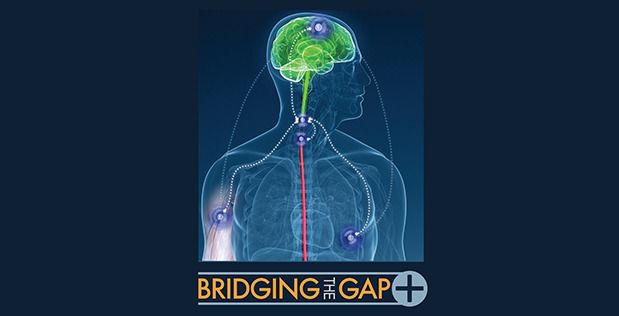

Spinal cord injury (SCI) is of significant concern to the Department of Defense. Of the 337,000 Americans with serious SCIs, approximately 44,000 are veterans, with 11,000 new injuries occurring each year.1 SCI is a complex condition – the injured often face lifelong paralysis and increased long-term morbidity due to factors such as sepsis and autonomic nervous system dysfunction. While considerable research efforts have been devoted toward restorative and therapeutic technologies to SCIs, significant challenges remain.

DARPA’s Bridging the Gap Plus (BG+) program aims to develop new approaches to treating SCI by integrating injury stabilization, regenerative therapy, and functional restoration. Today, DARPA announced the award of contracts to the University of California-Davis, Johns Hopkins University, and the University of Pittsburgh to advance this crucial work. Multidisciplinary teams at each of these universities are tasked with developing systems of implantable, adaptive devices that aim to reduce injury effects during early phases of SCI, and potentially restore function during the later chronic phase.

“The BG+ program looks to create opportunities to provide novel treatment approaches immediately after injury,” noted Dr. Al Emondi, BG+ program manager. “Systems will consist of active devices performing real-time biomarker monitoring and intervention to stabilize and, where possible, rebuild the neural communications pathways at the site of injury, providing the clinician with previously unavailable diagnostic information for automated or clinician-directed interventions.”