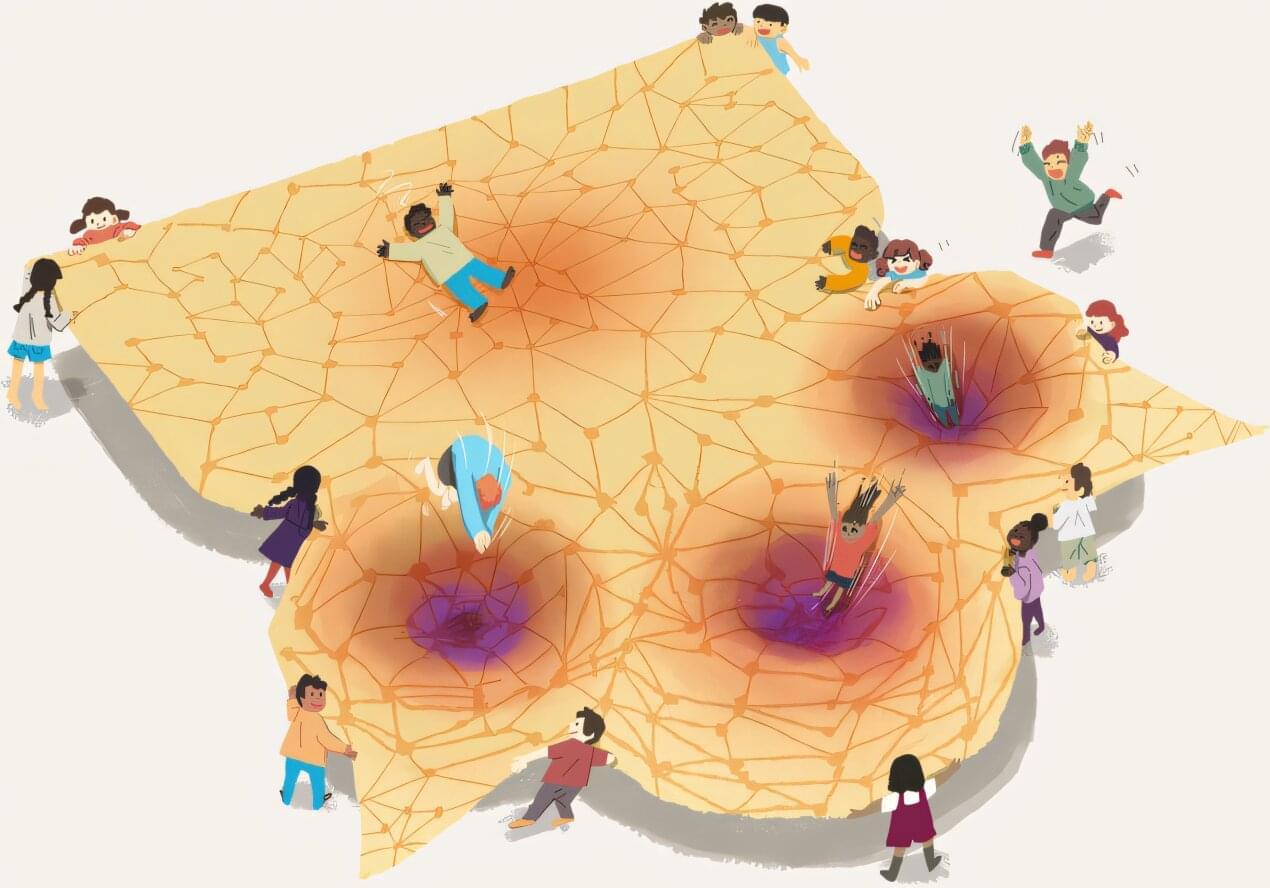

A personalized brain stimulation system powered by artificial intelligence (AI) that can safely enhance concentration from home has been developed by researchers from the University of Surrey, the University of Oxford and Cognitive Neurotechnology. Designed to adapt to individual characteristics, the system could help people improve focus during study, work, or other mentally demanding tasks.

Published in npj Digital Medicine, the study is based on a patented approach that uses non-invasive brain stimulation alongside adaptive AI to maximize its impact.

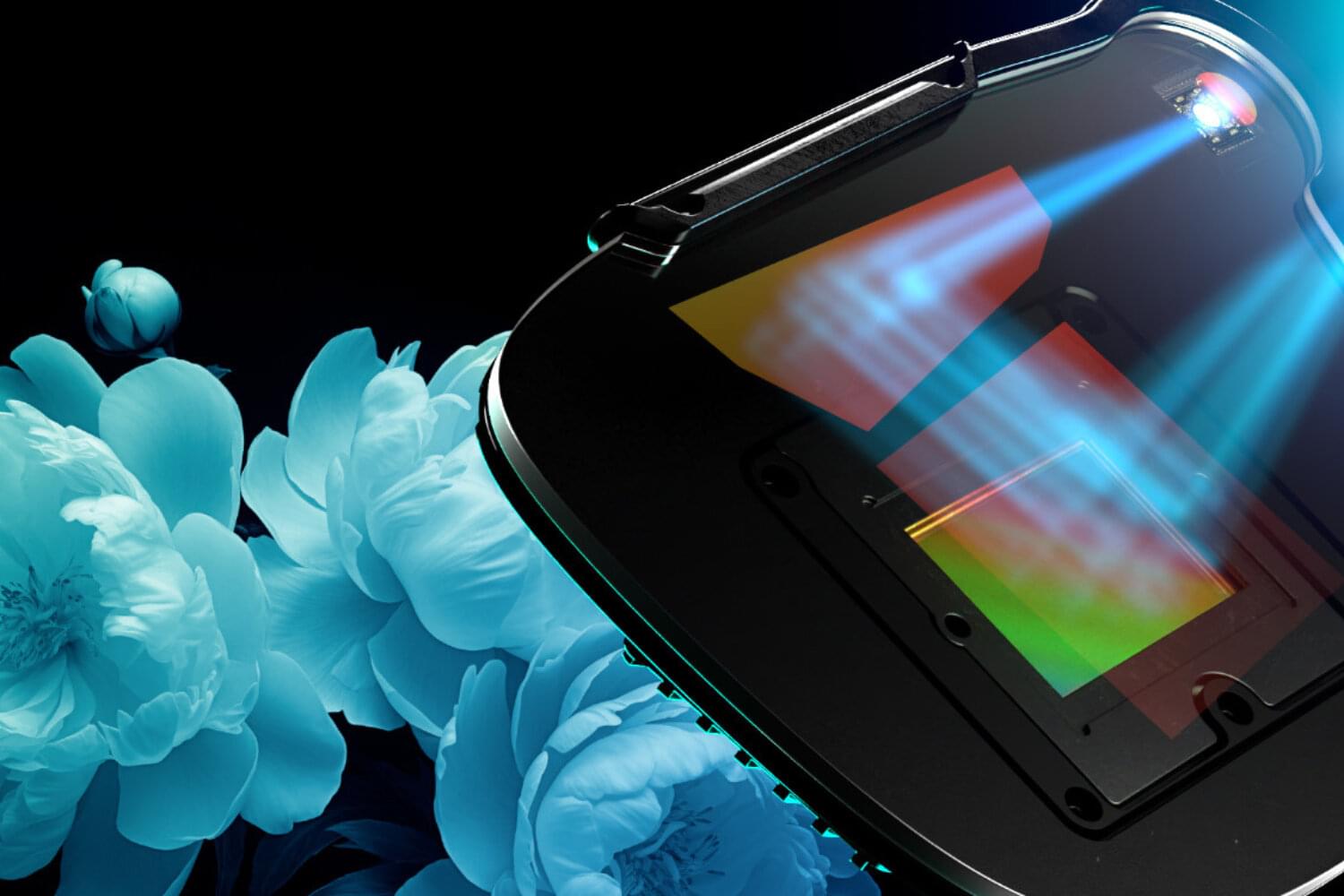

The technology uses transcranial random noise stimulation (tRNS)—a gentle and painless form of electrical brain stimulation—and an AI algorithm that learns to personalize stimulation based on individual features, including attention level and head size.