Dr. Valentin Robu, Associate Professor and Academic PI of the project, says that this work was part of the NCEWS (Network Constraints Early Warning System project), a collaboration between Heriot-Watt and Scottish Power Energy Networks, part funded by InnovateUK, the United Kingdom’s applied research and innovation agency. The project’s results greatly exceeded our expectations, and it illustrates how advanced AI techniques (in this case deep learning neural networks) can address important practical challenges emerging in modern energy systems.

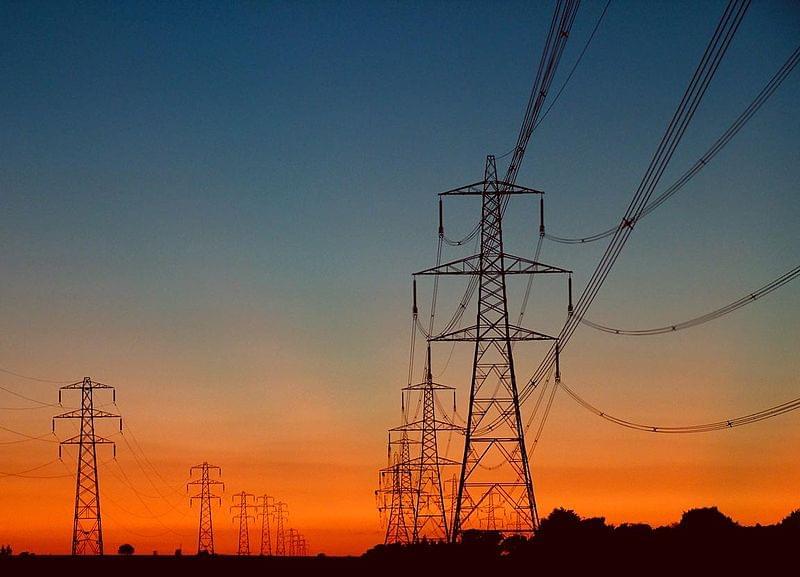

Power networks worldwide are faced with increasing challenges. The fast rollout of distributed renewable generation (such as rooftop solar panels or community wind turbines) can lead to considerable unpredictability. The previously used fit-and-forget mode of operating power networks is no longer adequate, and a more active management is required. Moreover, new types of demand (such as from the rollout EV charging) can also be source of unpredictability, especially if concentrated in particular areas of the distribution grid.

Network operators are required to keep power and voltage within safe operating limits at all connection points in the network, as out of bounds fluctuations can damage expensive equipment and connected devices. Hence, having good estimates of which area of the network could be at risk and require interventions (such as strengthening the network, or extra storage to smoothen fluctuations) is increasingly a key requirement.

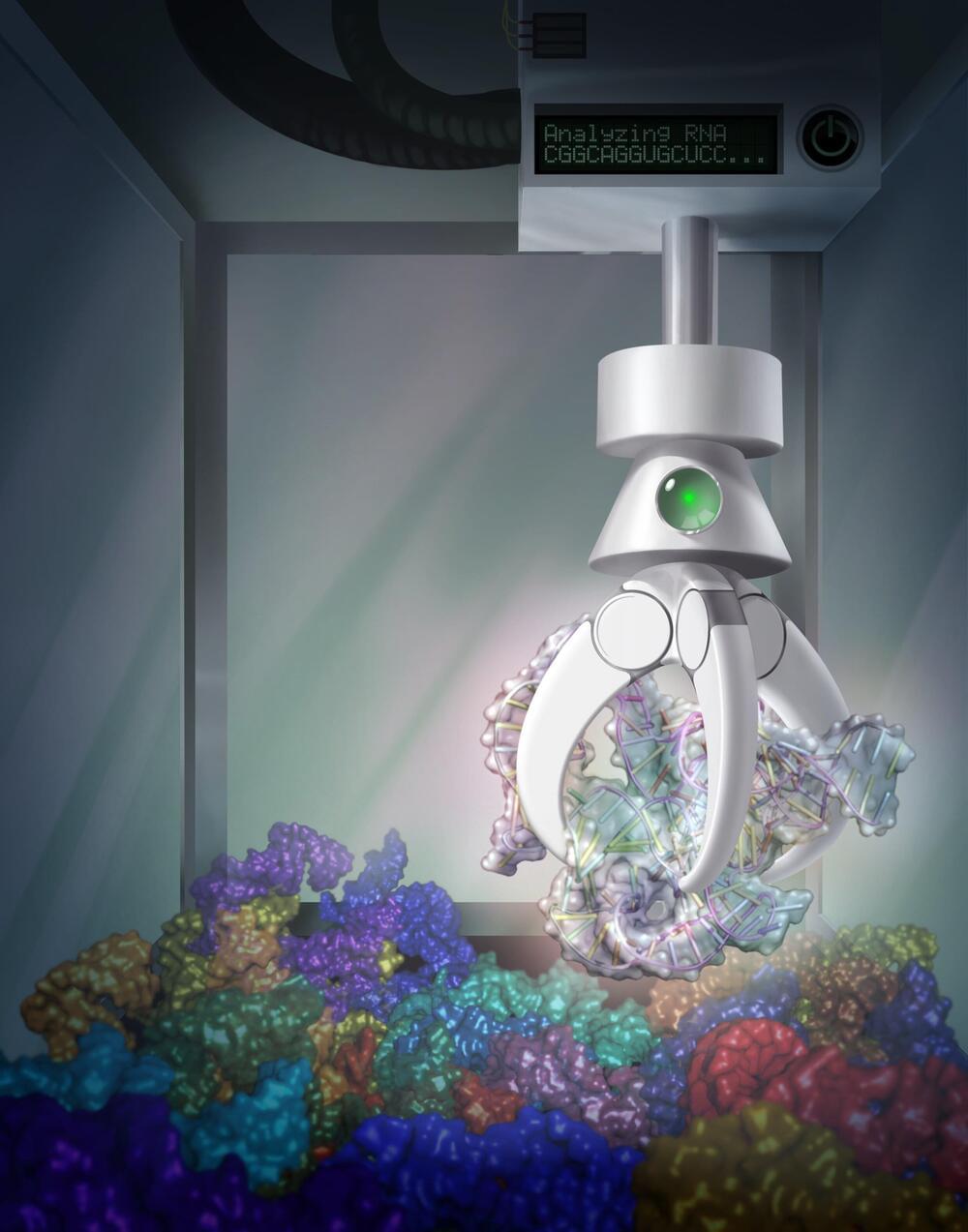

Privacy-sensitive machine learning

Smart meter data analysis holds great promise for identifying at risk areas in distribution networks. Yet, using smart meter data can present significant practical constraints. In many countries and regions, the rollout of smart meters does not provide full coverage, as installation is voluntary and many customers may reject installing a smart meter at their home. Moreover, even places where there is a successful smart meter roll-out, privacy restrictions must be taken into account and, in practice, regulators considerably constrain what private data from smart meters network operators have access to.