The global revenue of the pharmaceutical market is 1.2 trillion dollars. With such capital at stake and with the pace of technological disruption, the pharma industry has to embrace new technologies, therapies, and innovations and put a greater focus on prevention and digital health.

In this video, we take a dive into the five trends of how big pharma will adapt to these changing times:

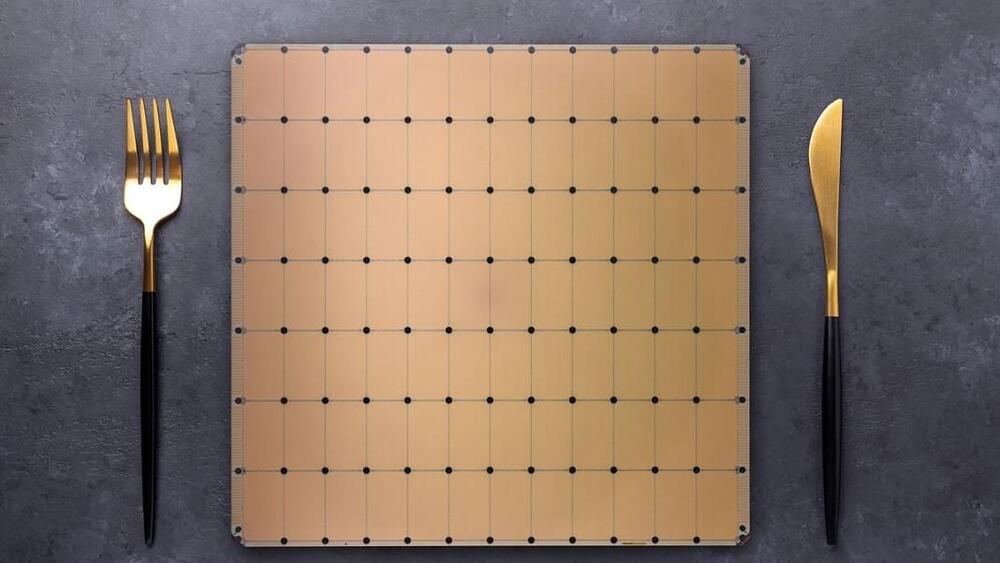

1. Artificial intelligence for drug research and development.

2. Patient design — DIY medicine movements.

3. In silico trials to bypass in vivo clinical testing.

4. New technologies, such as blockchain, in the supply chain.

5. New drug strategies by big pharma companies.

Get access to exclusive content and express your support for The Medical Futurist by joining our Patreon community: https://www.patreon.com/themedicalfuturist.

Read our magazine for further updates and analyses on the future of healthcare:

https://medicalfuturist.com/magazine.

#pharmaceutics #digitalhealth #pharma