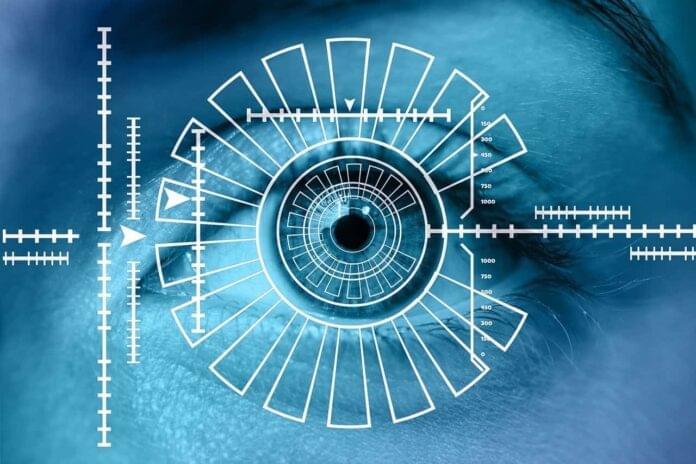

As the use of facial recognition technology (FRT) continues to expand, Congress, academics, and advocacy organizations have all highlighted the importance of developing a comprehensive understanding of how it is used by federal agencies.

The Government Accountability Office (GAO) has surveyed 24 federal agencies about their use of FRT. The performance audit ran from April2020through August 2021. 16 of the 24 agencies reported using it for digital access or cybersecurity, such as allowing employees to unlock agency smartphones with it, six agencies reported using it to generate leads in criminal investigations, five reported using FRT for physical security, such as controlling access to a building or facility, and 10 agencies said they planned to expand its use through fiscal year 2023.

In addition, both the Department of Homeland Security (DHS) and the Department of State reported using FRT to identify or verify travelers within or seeking admission to the United States, identifying or verifying the identity of non-U.S. citizens already in the United States, and to research agency information about non-U.S. citizens seeking admission to the United States. For example, DHS’s U.S. Customs and Border Protection used its Traveler Verification Service at ports of entry to assist with verifying travelers’ identities. The Traveler Verification Service uses FRT to compare a photo taken of the traveler at a port of entry with existing photos in DHS holdings, which include photographs from U.S. passports, U.S. visas, and other travel documents, as well as photographs from previous DHS encounters.