It is designed as a platform for AI and human-robot interaction (HRI).

Category: robotics/AI – Page 1,832

Robot Manufacturing Company Ready To Pay Out For A Humanoid Robot Appearance: Applications Is Over

Company received over 20,000 applications, and our client has decided to suspend the request collection. It will take up to 12 month to make the decision. Details: https://promo-bot.ai/media/humanoid_project/ Last week the manufacturer company of Promobot robots was seeking a face for a humanoid robot-assistant which will work in hotels, shopping malls and other crowded places. The company was ready to pay out to somebody willing to transfer the rights to use one’s face forever.

As of Monday, it appears that Promobot has stopped accepting applications for the opportunity. Additionally, further details regarding the project seem to have been removed from their site.

“The Promobot company wants to say thanks to everyone who responded to participation in the project. Today we have received over 20,000 applications and our client has decided to suspend the request collection… Those who didn’t have time to submit an application, please no worries, we are having more projects upcoming. Subscribe to our Instagram and stay tuned.” explained an update on the Promobot website.

Alibaba’s DAMO Academy Successfully Develops World’s First 3D Stacked In-Memory Computing Chip Based on DRAM

On Friday, Alibaba Cloud announced in a social media post that its DAMO Academy has successfully developed a 3D stacked In-Memory Computing (IMC) chip.

Alibaba Cloud claims this is a breakthrough that can help overcome the von Neumann bottleneck, a limitation on throughput caused by the standard personal computer architecture. It meets the needs of artificial intelligence (AI) and other scenarios for high bandwidth, high capacity memory and extreme computing power. In the specific AI scenario tested by Alibaba, the performance of the chip is improved by more than 10 times.

With the outbreak of AI applications, the shortcomings of the existing computer system architecture are gradually revealed. The main problems are that, on the one hand, processing data brings huge energy consumption. Under the traditional architecture, the power consumption required for data transmission from memory unit to computing unit is about 200 times of that of computing itself, so the real energy consumption and time used for computing are very low.

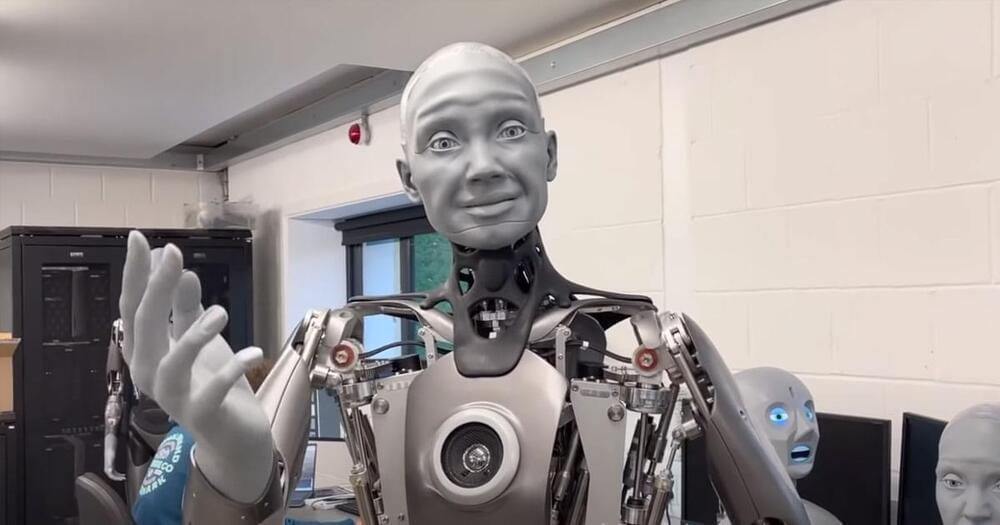

People Disturbed by Robot With Ultra-Realistic Human Face Expressions

Some users over on Reddit were shocked by how empathetic they felt to Ameca’s many expressions. “This is actually quite charming,” one user wrote.

But the most common comparison — of course — was to the Terminator.

“Neat!” one Redditor commented. “Is this the Hunter-Killer or Human Infiltration model?”

A competitor of Elon Musk and NEURALINK? Let’s take a look at PARADROMICS

Paradromics is a company developing brain computer interfaces that will help people with disabilities in communicating again. Their product will be the brain computer interface with the highest data rate ever developed. Will it compete with other companies like Neuralink or Kernel in the race to reading the brain?

0:00 Introduction to Paradromics.

1:45 The Product.

5:57 The Surgery.

7:41 Commercial availability.

Check out also this video on another Neuralink competitor, Kernel: https://youtu.be/DUICwT-fqt0

Subscribe for more content! 👇

–

Sources:

Official Paradromics website: https://paradromics.com/

Paper — Laser Ablation of the Pia Mater for Insertion of High-Density Microelectrode Arrays in a Translational Sheep Model https://www.biorxiv.org/content/10.1101/2020.08.27.269233v2

Paper — The Argo: A 65,536 channel recording system for high density neural recording in vivo https://www.biorxiv.org/content/10.1101/2020.07.17.209403v1.full.

Paper — The Argo: a high channel count recording system for neural recording in vivo https://iopscience.iop.org/article/10.1088/1741-2552/abd0ce.

Paper — Massively parallel microwire arrays integrated with CMOS chips for neural recording https://advances.sciencemag.org/content/6/12/eaay2789

Towards a High-Resolution, Implantable Neural Interface https://www.darpa.mil/news-events/2017-07-10

Matt Angle with an update from Paradromics and their new Neurotech Pub Podcast https://www.youtube.com/watch?v=oSZGk3Smhsc.

The Data Organ: Paradromics CEO Matt Angle On The Future Of The Brain-Computer Interface https://www.forbes.com/sites/johncumbers/2020/04/19/the-data…80a603d4ed.

–

South African crowd-solving startup Zindi building a community of data scientists and using AI to solve real-world problems

Zindi is all about using AI to solve real-world problems for companies and individuals. And the South Africa-based crowd-solving startup has done that over the last three years they have been in existence.

Just last year a team of data scientists under Zindi used machine learning to improve air quality monitoring in Kampala as another group helped Zimnat, an insurance company in Zimbabwe, predict customer behavior — especially on who was likely to leave and the possible interventions that would make them stay. Zimnat was able to retain its customers by offering custom-made services to those who would have otherwise discontinued.

These are some of the solutions that have been realized to counter the data-centered challenges that companies, NGOs and government institutions submit to Zindi.

‘If Human, Kill’: Video Warns Of Need For Legal Controls On Killer Robots

A new video released by nonprofit The Future of Life Institute (FLI) highlights the risks posed by autonomous weapons or ‘killer robots’ – and the steps we can take to prevent them from being used. It even has Elon Musk scared.

Its original Slaughterbots video, released in 2017, was a short Black Mirror-style narrative showing how small quadcopters equipped with artificial intelligence and explosive warheads could become weapons of mass destruction. Initially developed for the military, the Slaughterbots end up being used by terrorists and criminals. As Professor Stuart Russell points out at the end of the video, all the technologies depicted already existed, but had not been put together.

Now the technologies have been put together, and lethal autonomous drones able to locate and attack targets without human supervision may already have been used in Libya.