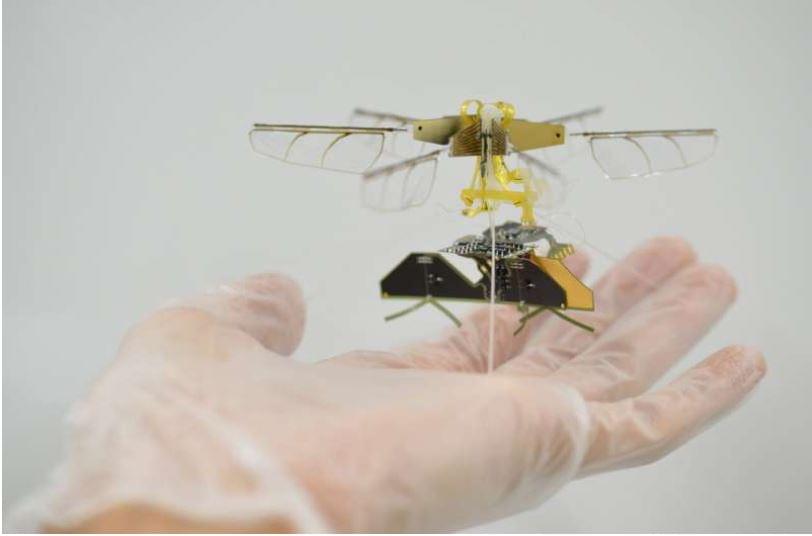

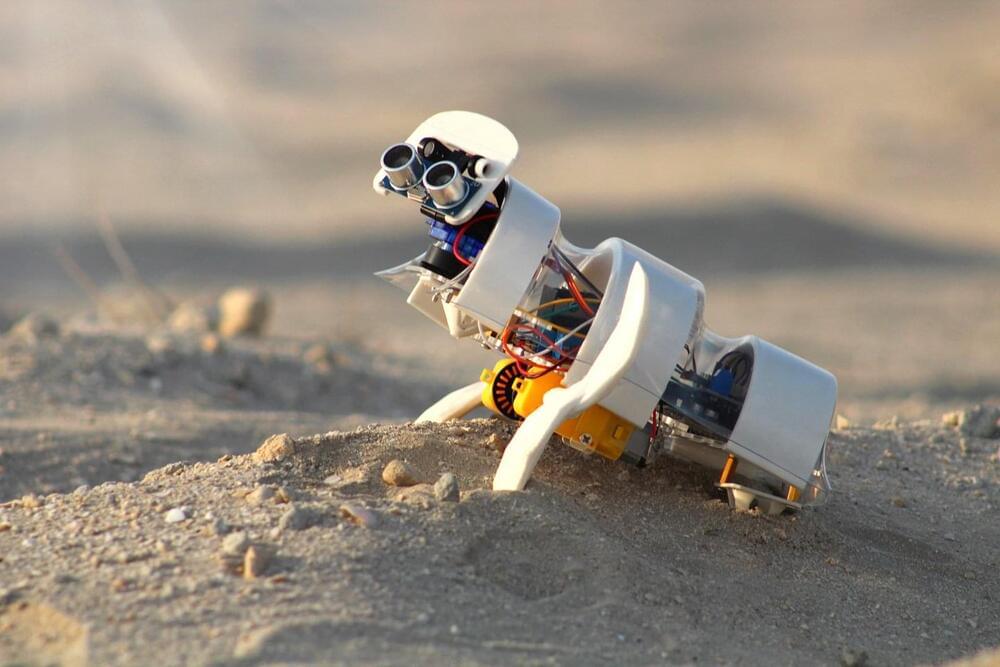

Biotechnology is a curious marriage of two seemingly disparate worlds. On one end, we have living organisms—wild, unpredictable celestial creations that can probably never be understood or appreciated enough, while on the other is technology—a cold, artificial entity that exists to bring convenience, structure and mathematical certainty in human lives. The contrast works well in combination, though, with biotechnology being an indispensable part of both healthcare and medicine. In addition to those two, there are several other applications in which biotechnology plays a central role—deep-sea exploration, protein synthesis, food quality regulation and preventing environmental degradation. The increasing involvement of AI in biotechnology is one of the main reasons for its growing scope of applications.

So, how exactly does AI impact biotechnology? For starters, AI fits in neatly with the dichotomous nature of biotechnology. After all, the technology contains a duality of its own—machine-like efficiency combined with the quaintly animalistic unpredictability in the way it works. In general terms, businesses and experts involved in biotechnology use AI to improve the quality of research and for improving compliance with regulatory standards.

More specifically, AI improves data capturing, analysis and pattern recognition in the following biotechnology-based applications: