Our understanding of progress in machine learning has been colored by flawed testing data.

How to use causal influence diagrams to recognize the hidden incentives that shape an AI agent’s behavior.

There is rightfully a lot of concern about the fairness and safety of advanced Machine Learning systems. To attack the root of the problem, researchers can analyze the incentives posed by a learning algorithm using causal influence diagrams (CIDs). Among others, DeepMind Safety Research has written about their research on CIDs, and I have written before about how they can be used to avoid reward tampering. However, while there is some writing on the types of incentives that can be found using CIDs, I haven’t seen a succinct write up of the graphical criteria used to identify such incentives. To fill this gap, this post will summarize the incentive concepts and their corresponding graphical criteria, which were originally defined in the paper Agent Incentives: A Causal Perspective.

A causal influence diagram is a directed acyclic graph where different types of nodes represent different elements of an optimization problem. Decision nodes represent values that an agent can influence, utility nodes represent the optimization objective, and structural nodes (also called change nodes) represent the remaining variables such as the state. The arrows show how the nodes are causally related with dotted arrows indicating the information that an agent uses to make a decision. Below is the CID of a Markov Decision Process, with decision nodes in blue and utility nodes in yellow:

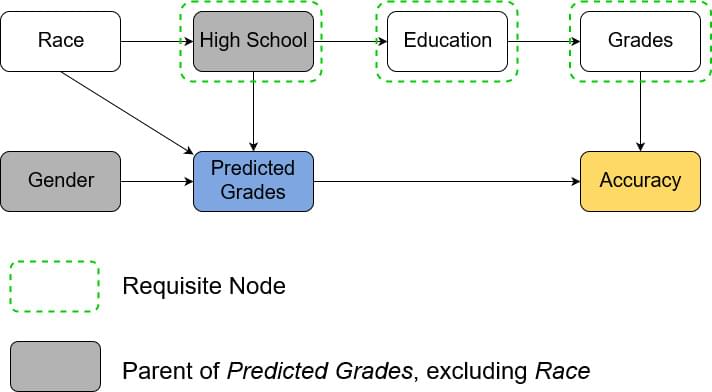

The first model is trying to predict a high school student’s grades in order to evaluate their university application. The model uses the student’s high school and gender as input and outputs the predicted GPA. In the CID below we see that predicted grade is a decision node. As we train our model for accurate predictions, accuracy is the utility node. The remaining, structural nodes show how relevant facts about the world relate to each other. The arrows from gender and high school to predicted grade show that those are inputs to the model. For our example we assume that a student’s gender doesn’t affect their grade and so there is no arrow between them. On the other hand, a student’s high school is assumed to affect their education, which in turn affects their grade, which of course affects accuracy. The example assumes that a student’s race influences the high school they go to. Note that only high school and gender are known to the model.

Sanctuary AI says building human-level artificial intelligence that can execute human tasks safely requires a deep understanding of the living mind. Hello World’s Ashlee Vance heads to Vancouver to see the startup’s progress toward bringing robots to life.

Watch more Hello World in the Pacific Northwest:

Part One: https://www.youtube.com/watch?v=-woGAoTXdjE

Part Two: AI or Bust in Seattle’s Real Estate Market.

#HelloWorld #robotics #bloombergquicktake.

——-

Like this video? Subscribe: https://www.youtube.com/Bloomberg?sub_confirmation=1

Become a Quicktake Member for exclusive perks: https://www.youtube.com/bloomberg/join.

Yet when faced with enormous protein complexes, AI faltered. Until now. In a mind-bending feat, a new algorithm deciphered the structure at the heart of inheritance—a massive complex of roughly 1,000 proteins that helps channel DNA instructions to the rest of the cell. The AI model is built on AlphaFold by DeepMind and RoseTTAfold from Dr. David Baker’s lab at the University of Washington, which were both released to the public to further experiment on.

Our genes are housed in a planet-like structure, dubbed the nucleus, for protection. The nucleus is a high-security castle: only specific molecules are allowed in and out to deliver DNA instructions to the outside world—for example, to protein-making factories in the cell that translate genetic instructions into proteins.

At the heart of regulating this traffic are nuclear pore complexes, or NPCs (wink to gamers). They’re like extremely intricate drawbridges that strictly monitor the ins and outs of molecular messengers. In biology textbooks, NPCs often look like thousands of cartoonish potholes dotted on a globe. In reality, each NPC is a massively complex, donut-shaped architectural wonder, and one of the largest protein complexes in our bodies.

Never mind the cost of computing, OpenAI said the Upwork contractors alone cost $160,000. Though to be fair, manually labeling the whole data set would’ve run into the millions and taken considerable time to complete. And while the computing power wasn’t negligible, the model was actually quite small. VPT’s hundreds of millions of parameters are orders of magnitude less than GPT-3’s hundreds of billions.

Still, the drive to find clever new approaches that use less data and computing is valid. A kid can learn Minecraft basics by watching one or two videos. Today’s AI requires far more to learn even simple skills. Making AI more efficient is a big, worthy challenge.

In any case, OpenAI is in a sharing mood this time. The researchers say VPT isn’t without risk—they’ve strictly controlled access to algorithms like GPT-3 and DALL-E partly to limit misuse—but the risk is minimal for now. They’ve open sourced the data, environment, and algorithm and are partnering with MineRL. This year’s contestants are free to use, modify, and fine-tune the latest in Minecraft AI.

Parents of the future rejoice! Scientists in China have developed an AI nanny that they say could one day take care of human fetuses in a lab.

Researchers in Suzhou, China, claim to have created a system that can monitor and care for embryos as they grow into fetuses while growing inside an artificial womb, The South China Morning Post reports.

After announcing at the beginning of the month that the company would be cutting 10 percent of its workforce due to CEO Elon Musks’s “bad feeling” about the economy, Tesla’s job slash is in full swing. According to Insider, many newer employees — including workers who had not even begun their newly-accepted positions just yet — are bearing the brunt of the mass layoffs.

“Damn, talk about a gut punch,” wrote Iain Abshier, a brand-new Tesla recruiter, in a LinkedIn post last week. “Friday afternoon I was included in the Tesla layoffs after just two weeks of work.”

It’s worth noting that these cuts come amid a recall investigation into Tesla’s controversial Autopilot technology, not to mention reports of widespread braking issues and the CEO’s recent lament over supply chain issues — leaving Tesla’s long term viability more ambiguous than it’s been in years, with the brunt of the consequences coming down on the company’s labor force.

Suspended Google engineer Blake Lemoine made some serious headlines earlier this month when he claimed that one of the company’s experimental AIs called LaMDA had achieved sentience — prompting the software giant to place him on administrative leave.

“If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a seven-year-old, eight-year-old kid that happens to know physics,” he told the Washington Post at the time.

The subsequent news cycle swept up AI experts, philosophers, and Google itself into a fierce debate about the current and possible future capabilities of machine learning, other ethical concerns around the tech, and even the nature of consciousness and sentience. The general consensus, it’s worth noting, was that the AI is almost certainly not sentient.