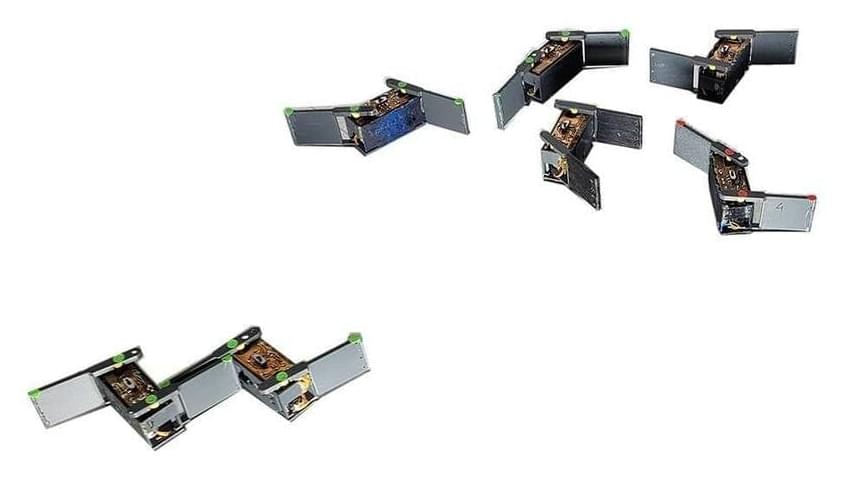

SpaceX is making a 480-foot-tall giant robot with arms that will catch giant rockets.

It is insanely cool. Sci-fi made real.

But moon landings were already insanely cool.

The difference is with this working we have almost a thousand launches per month from the same launch pad versus at most one today.