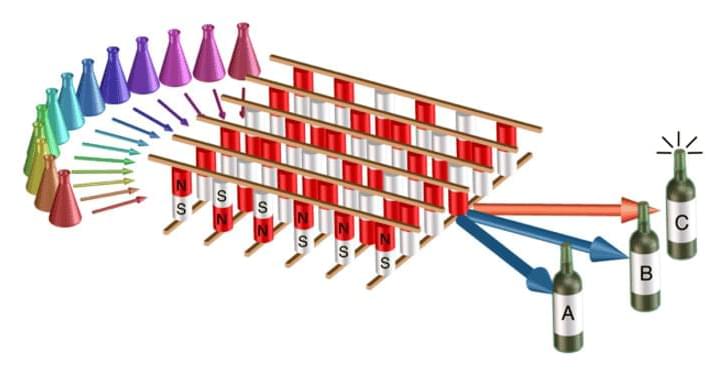

Penn State agricultural engineers have developed, for the first time, a prototype “end-effector” capable of deftly removing unwanted apples from trees—the first step toward robotic, green-fruit thinning.

The development is important, according to Long He, assistant professor of agricultural and biological engineering, because manual thinning is a labor-intensive task, and the shrinking labor force in apple production makes manual thinning economically infeasible. His research group in the College of Agricultural Sciences conducted a new study that led to the end-effector.

The apple crop is a high-value agricultural commodity in the U.S., with an annual total production of nearly 10 billion pounds and valued at nearly $3 billion, according to He, who is a leader in agricultural robotics research, previously developing automated components for mushroom picking and apple tree pruning. Green-fruit thinning—the process of discarding excess fruitlets in early summer, mainly to increase the remaining fruit size and quality—is one of the most important aspects of apple production.