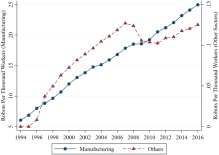

This study explores the relationship between the adoption of industrial robots and workplace injuries using data from the United States (US) and Germany. Our empirical analyses, based on establishment-level data for the US, suggest that a one standard deviation increase in robot exposure reduces work-related injuries by approximately 16%. These results are driven by manufacturing firms (−28%), while we detect no impact on sectors that were less exposed to industrial robots. We also show that the US counties that are more exposed to robot penetration experience a significant increase in drug-or alcohol-related deaths and mental health problems, consistent with the extant evidence of negative effects on labor market outcomes in the US. Employing individual longitudinal data from Germany, we exploit within-individual changes in robot exposure and document similar effects on job physical intensity (−4%) and disability (−5%), but no evidence of significant effects on mental health and work and life satisfaction, consistent with the lack of significant impacts of robot penetration on labor market outcomes in Germany.