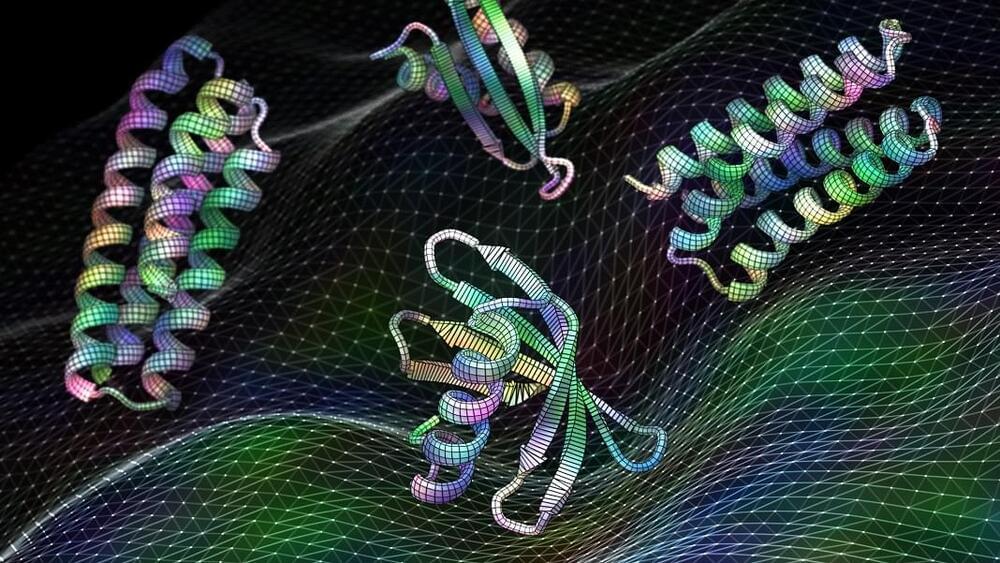

A new study in Science overthrew the whole gamebook. Led by Dr. David Baker at the University of Washington, a team tapped into an AI’s “imagination” to dream up a myriad of functional sites from scratch. It’s a machine mind’s “creativity” at its best—a deep learning algorithm that predicts the general area of a protein’s functional site, but then further sculpts the structure.

As a reality check, the team used the new software to generate drugs that battle cancer and design vaccines against common, if sometimes deadly, viruses. In one case, the digital mind came up with a solution that, when tested in isolated cells, was a perfect match for an existing antibody against a common virus. In other words, the algorithm “imagined” a hotspot from a viral protein, making it vulnerable as a target to design new treatments.

The algorithm is deep learning’s first foray into building proteins around their functions, opening a door to treatments that were previously unimaginable. But the software isn’t limited to natural protein hotspots. “The proteins we find in nature are amazing molecules, but designed proteins can do so much more,” said Baker in a press release. The algorithm is “doing things that none of us thought it would be capable of.”