Category: robotics/AI – Page 1,570

Stretchy, Wearable Synaptic Transistor Turns Robotics Smarter

A team of Penn State engineers has created a stretchy, wearable synaptic transistor that could turn robotics and wearable devices smarter. The device developed by the team works like neurons in the brain, sending signals to some cells and inhibiting others to enhance and weaken the devices’ memories.

The research was led by Cunjiang Yu, Dorothy Quiggle Career Development Associate Professor of Engineering Science and Mechanics and associate professor of biomedical engineering and of materials science and engineering.

The research was published in Nature Electronics.

AI-enabled imaging of retina’s vascular network can predict cardiovascular disease and death

AI-enabled imaging of the retina’s network of veins and arteries can accurately predict cardiovascular disease and death, without the need for blood tests or blood pressure measurement, finds research published online in the British Journal of Ophthalmology.

As such, it paves the way for a highly effective, non-invasive screening test for people at medium to high risk of circulatory disease that doesn’t have to be done in a clinic, suggest the researchers.

Circulatory diseases, including cardiovascular disease, coronary heart disease, heart failure and stroke, are major causes of ill health and death worldwide, accounting for 1 in 4 UK deaths alone.

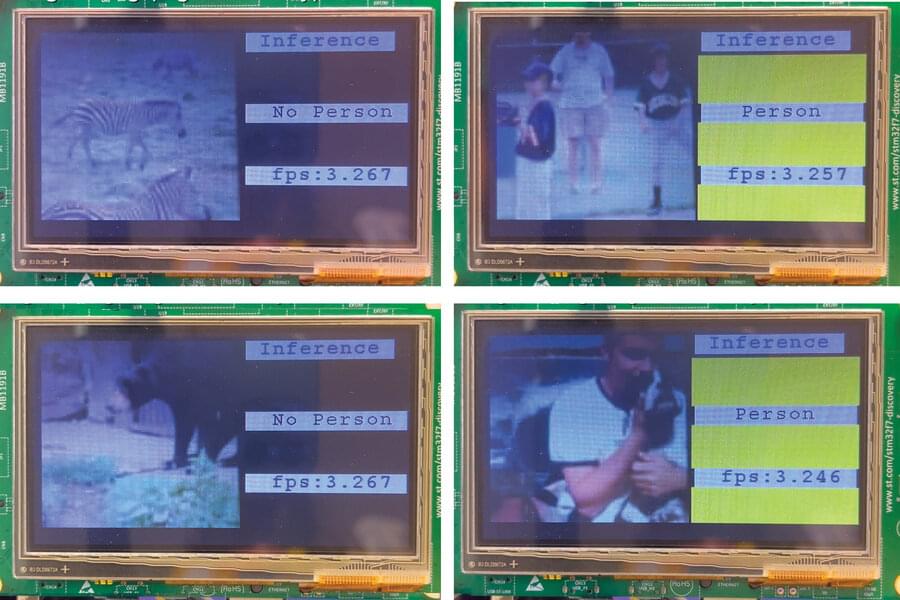

New technique enables on-device training using less than a quarter of a megabyte of memory

Microcontrollers, miniature computers that can run simple commands, are the basis for billions of connected devices, from internet-of-things (IoT) devices to sensors in automobiles. But cheap, low-power microcontrollers have extremely limited memory and no operating system, making it challenging to train artificial intelligence models on “edge devices” that work independently from central computing resources.

Training a machine-learning model on an intelligent edge device allows it to adapt to new data and make better predictions. For instance, training a model on a smart keyboard could enable the keyboard to continually learn from the user’s writing. However, the training process requires so much memory that it is typically done using powerful computers at a data center, before the model is deployed on a device. This is more costly and raises privacy issues since user data must be sent to a central server.

To address this problem, researchers at MIT and the MIT-IBM Watson AI Lab have developed a new technique that enables on-device training using less than a quarter of a megabyte of memory. Other training solutions designed for connected devices can use more than 500 megabytes of memory, greatly exceeding the 256-kilobyte capacity of most microcontrollers (there are 1,024 kilobytes in one megabyte).

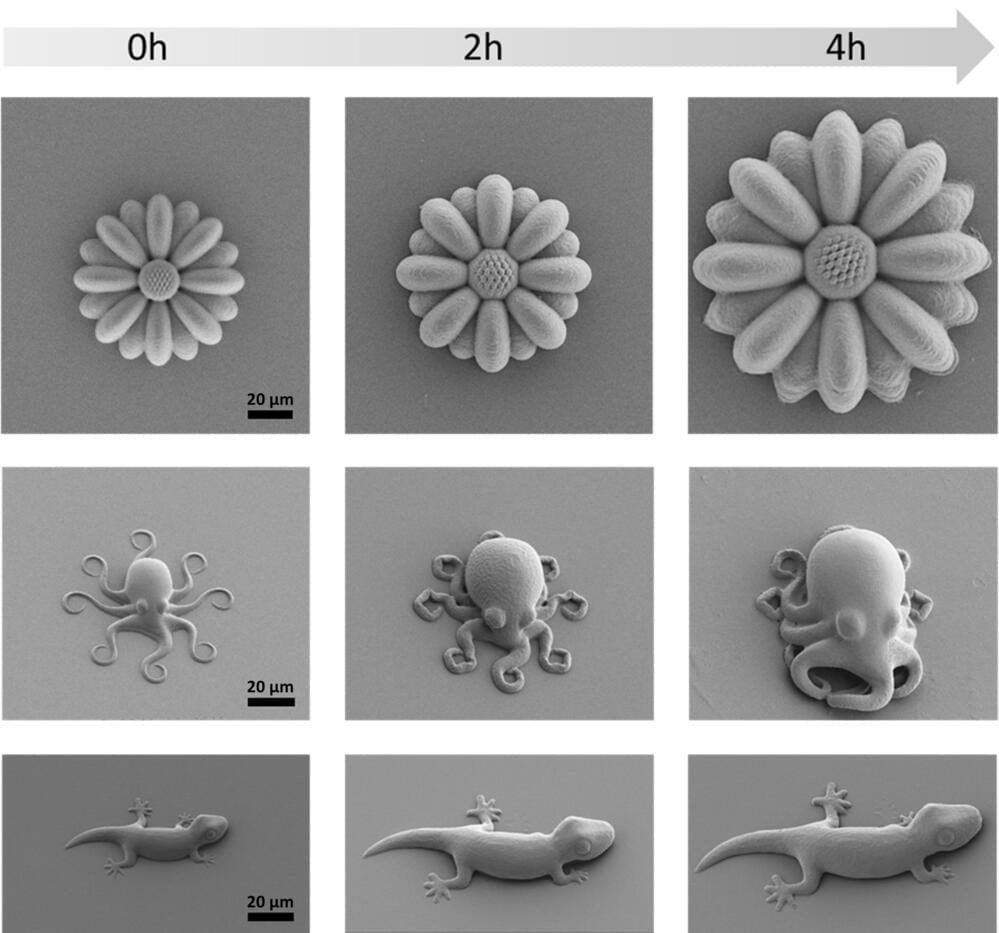

Manufacturing microscopic octopuses with a 3D printer

Although just cute little creatures at first glance, the microscopic geckos and octopuses fabricated by 3D laser printing in the molecular engineering labs at Heidelberg University could open up new opportunities in fields such as microrobotics or biomedicine.

The printed microstructures are made from novel materials —known as smart polymers—whose size and mechanical properties can be tuned on demand and with high precision. These “life-like” 3D microstructures were developed in the framework of the “3D Matter Made to Order” (3DMM2O) Cluster of Excellence, a collaboration between Ruperto Carola and the Karlsruhe Institute of Technology (KIT).

“Manufacturing programmable materials whose mechanical properties can be adapted on demand is highly desired for many applications,” states Junior Professor Dr. Eva Blasco, group leader at the Institute of Organic Chemistry and the Institute for Molecular Systems Engineering and Advanced Materials of Heidelberg University.

Wisk Aero aims to be the first FAA certified autonomous air taxi with its 6th generation eVTOL

The company targets a price of $3 per passenger per mile.

The first self-flying, all-electric, four-passenger eVTOL air taxi in the world was unveiled by the California-based Advanced Air Mobility (AAM) company Wisk Aero. Generation 6 is Wisk’s go-to-market aircraft and the first autonomous eVTOL to be a candidate for type approval by the Federal Aviation Administration (FAA).

The most sophisticated air taxi in the world, Generation 6 combines one of the safest passenger transport systems in commercial aviation with industry-leading autonomous technology and software, human oversight of every trip, and a generally streamlined design.

Elon Musk shows off humanoid robot prototype at Tesla AI Day

Tesla’s AI Day 2022 was mainly a recruiting event, according to CEO Elon Musk.