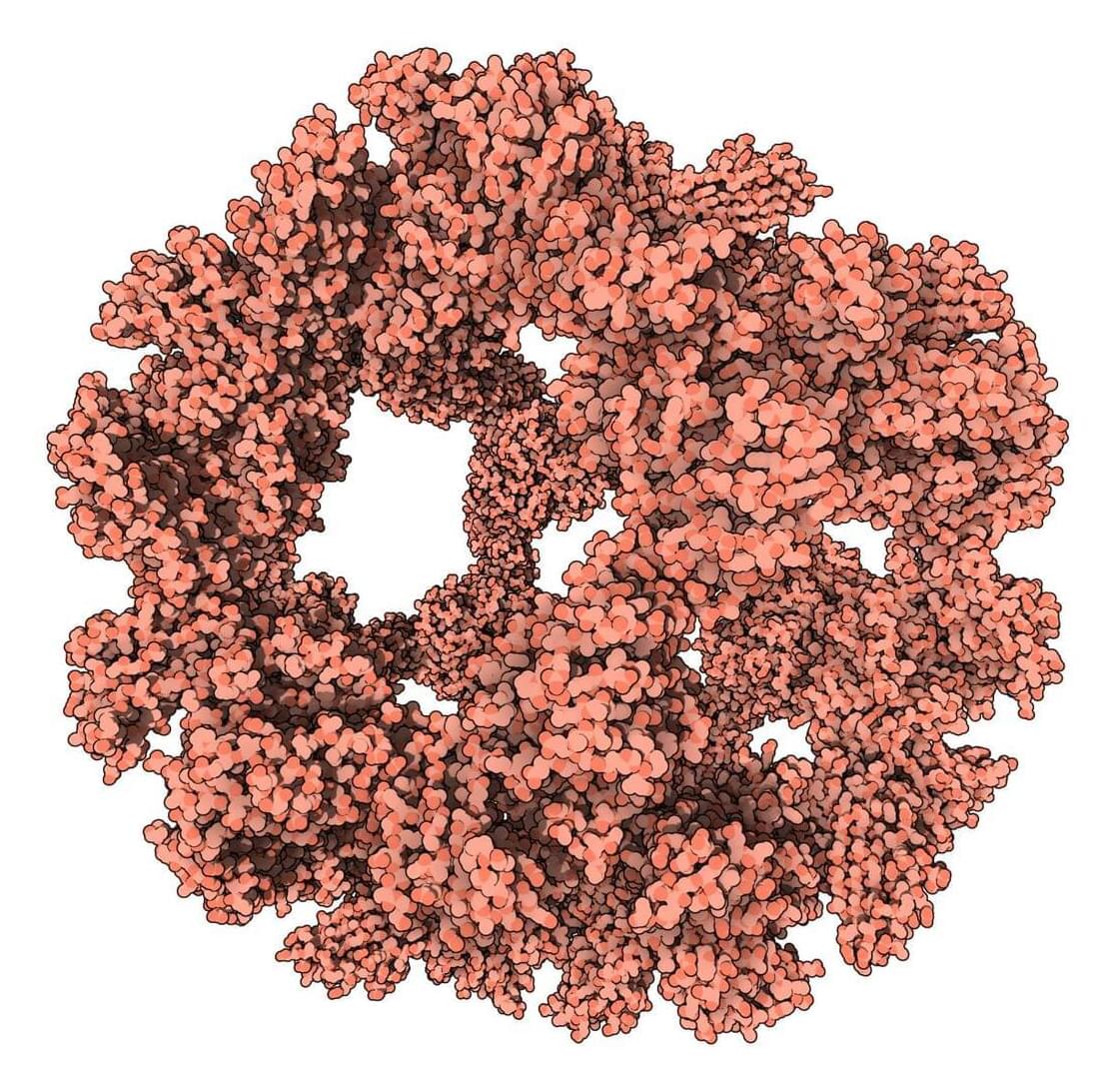

Researchers have tapped into the power of generative artificial intelligence to aid them in the fight against one of humanity’s most pernicious foes: antibiotic-resistant bacteria. | Researchers have tapped into the power of generative artificial intelligence to aid them in the fight against one of humanity’s most pernicious foes: antibiotic-resistant bacteria. Using a model trained on a library of about 40,000 chemicals, scientists were able to build never-before-seen antibiotics that killed two of the most notorious multidrug-resistant bacteria on earth.