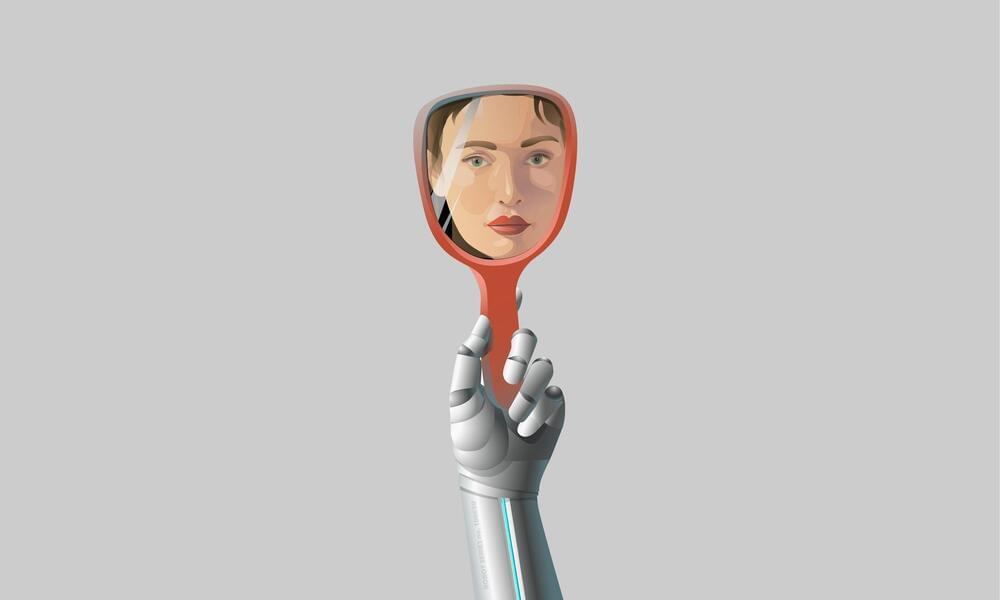

Boston Dynamics’ Atlas—the world’s most advanced humanoid robot—is learning some new tricks. The company has finally given Atlas some proper hands, and in Boston Dynamics’ latest YouTube video, Atlas is attempting to do some actual work. It also released another behind-the-scenes video showing some of the work that goes into Atlas. And when things don’t go right, we see some spectacular slams the robot takes in its efforts to advance humanoid robotics.

As a humanoid robot, Atlas has mostly been focused on locomotion, starting with walking in a lab, then walking on every kind of unstable terrain imaginable, then doing some sick parkour tricks. Locomotion is all about the legs, though, and the upper half seemed mostly like an afterthought, with the arms only used to swing around for balance. Atlas previously didn’t even have hands— the last time we saw it, there were only two incomplete-looking ball grippers at the end of its arms.

This newest iteration of the robot has actual grippers. They’re simple clamp-style hands with a wrist and a single moving finger, but that’s good enough for picking things up. The goal of this video is moving “inertially significant” objects—not just picking up light boxes, but objects that are so heavy they can throw Atlas off-balance. This includes things like a big plank, a bag full of tools, and a barbell with two 10-pound weights. Atlas is learning all about those “equal and opposite forces” in the world.