Meta’s Chief AI Scientist calls out ChatGPT for its limitations.

Perceptually-enabled Task Guidance prototypes demonstrated ability to help people complete recipes as a proxy to unfamiliar tasks.

“Perceptually-enabled Task Guidance (PTG) teams demonstrated a recipe for success in early prototypes of super smart #AIassistants that can see what a user sees and hear what they hear to help them accomplish unfamiliar tasks. More: https://www.darpa.mil/news-events/2023-01-25”

Controlling the trajectory of a basketball is relatively straightforward, as it only requires the application of mechanical force and human skill. However, controlling the movement of quantum systems like atoms and electrons poses a much greater challenge. These tiny particles are prone to perturbations that can cause them to deviate from their intended path in unexpected ways. Additionally, movement within the system degrades, known as damping, and noise from environmental factors like temperature further disrupts its trajectory.

To counteract the effects of damping and noise, researchers from Okinawa Institute of Science and Technology (OIST) in Japan have found a way to use artificial intelligence to discover and apply stabilizing pulses of light or voltage with fluctuating intensity to quantum systems. This method was able to successfully cool a micro-mechanical object to its quantum state and control its motion in an optimized way. The research was recently published in the journal Physical Review Research.

Objects are also magnetic and can conduct electricity Researchers reveal person-shaped robot that can turn themselves into liquid Researchers have created humanoid, miniature robots that can shapeshift and turn into liquid. The breakthrough could allow for the creation of more robots that can shift between liquid and solid, allowing them to be used in a variety of situations.

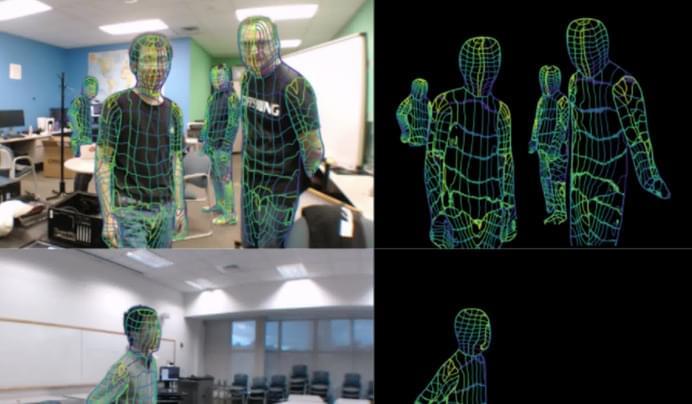

‘This technology may be scaled to monitor the well-being of elderly people or just identify suspicious behaviours at home,’ scientists claim Scientists have figured out how to identify people in a building by using artificial intelligence to analyse WiFi signals. A team at Carnegie Mellon University developed a deep neural network to digitally map human bodies when in the presence of WiFi signals.

Researchers have claimed that artificial intelligence (AI) will reach the singularity within seven years, after attempting to quantify its progress.

Translation company Translated, presenting their work at an Association for Machine Translation in the Americas conference, explained that they first began testing machine translation technology in 2011. The team settled on a metric to measure AI progress, which they’ve called “Time to Edit” (TTE). Simply put, it is the time it takes a human translator to edit a translation produced by another human or an AI.

Over the years, the TTE for AI-translated texts has come down fairly consistently, leading Translated to predict the date when AI hits the singularity, when the time is equivalent to human translators.

A chatbot powered by reams of data from the internet has passed exams at a US law school after writing essays on topics ranging from constitutional law to taxation and torts.

ChatGPT from OpenAI, a US company that this week got a massive injection of cash from Microsoft, uses artificial intelligence (AI) to generate streams of text from simple prompts.

The results have been so good that educators have warned it could lead to widespread cheating and even signal the end of traditional classroom teaching methods.

Inspired by sea cucumbers, engineers have designed miniature robots that rapidly and reversibly shift between liquid and solid states. On top of being able to shape-shift, the robots are magnetic and can conduct electricity. The researchers put the robots through an obstacle course of mobility and shape-morphing tests in a study publishing January 25 in the journal Matter.

Where traditional robots are hard-bodied and stiff, “soft” robots have the opposite problem; they are flexible but weak, and their movements are difficult to control. “Giving robots the ability to switch between liquid and solid states endows them with more functionality,” says Chengfeng Pan (@ChengfengPan), an engineer at The Chinese University of Hong Kong who led the study.

The team created the new phase-shifting material—dubbed a “magnetoactive solid-liquid phase transitional machine”—by embedding magnetic particles in gallium, a metal with a very low melting point (29.8 °C).

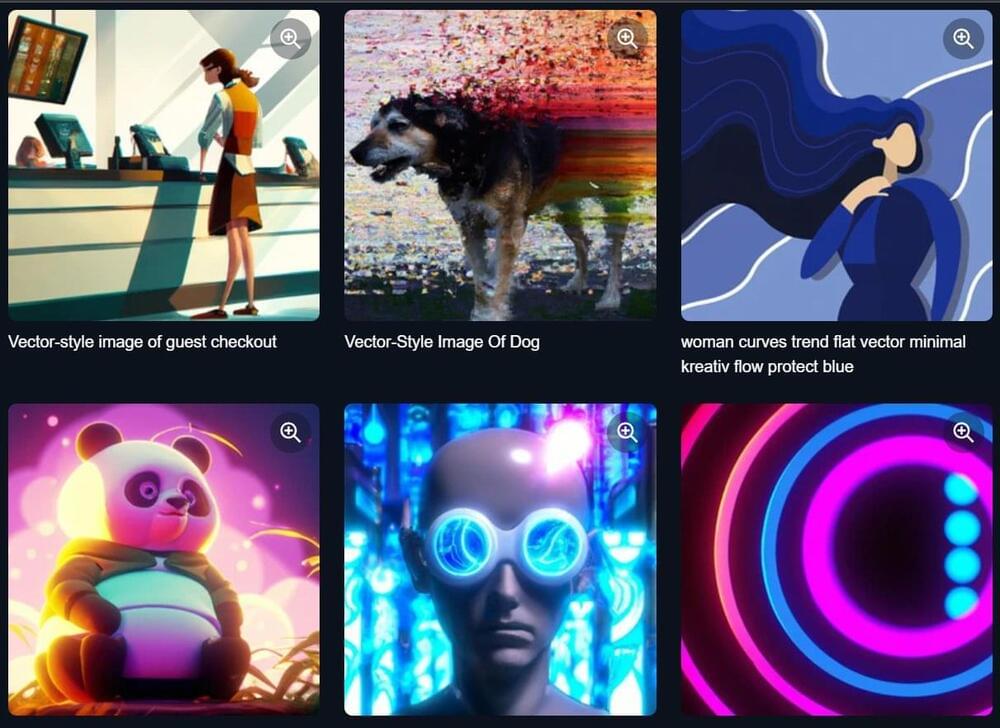

Shutterstock is cooperating with OpenAI. Now the stock database is releasing a platform for the AI generation of images.

In October, Shutterstock and OpenAI partnered to integrate DALL-E 2 into the company’s creative tools. Today, Shutterstock released the AI image generation platform. It is available to all users and the images generated can be used under license.

According to Shutterstock, the tool was developed with an “ethical approach and uses a library of assets that authentically represent the world we live in. Shutterstock also recognizes the artists’ contributions to the generative content by paying royalties for the intellectual property used to develop the models and for the ongoing licensing of the content.”