As mentioned, ChatGPT is available in free and paid-for tiers. You might have to sit in a queue for the free version for a while, but anyone can play around with its capabilities.

Google Bard is currently only available to limited beta testers and is not available to the wider public.

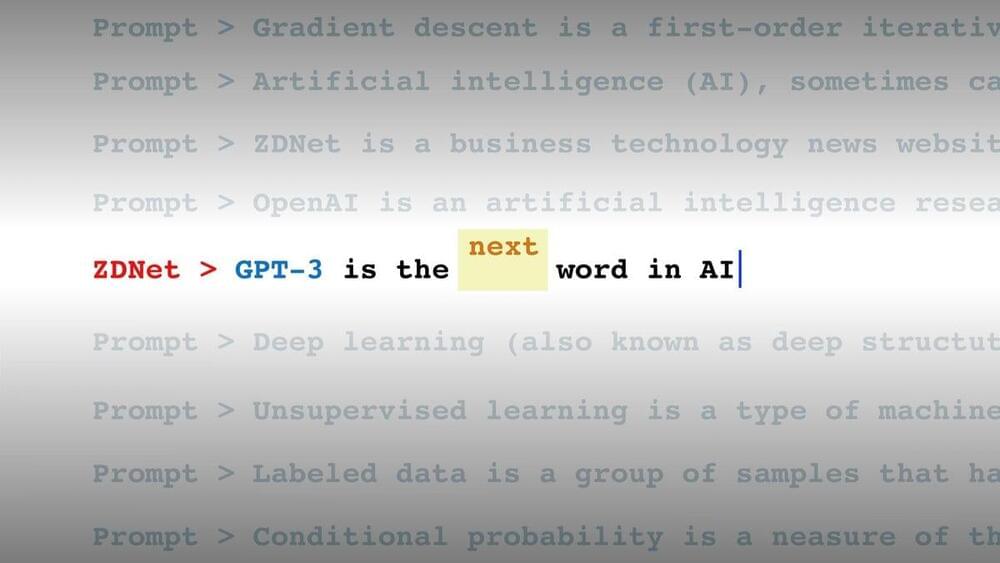

ChatGPT and Google Bard are very similar natural language AI chatbots, but they have some differences, and are designed to be used in slightly different ways — at least for now. ChatGPT has been used for answering direct questions with direct answers, mostly correctly, but it’s caused a lot of consternation among white collar workers, like writers, SEO advisors, and copy editors, since it has also demonstrated an impressive ability to write creatively — even if it has faced a few problems with accuracy and plagiarism.