Kaggle notebook with all the code: https://www.kaggle.com/wwsalmon/simple-mnist-nn-from-scratch-numpy-no-tf-keras.

Blog article with more/clearer math explanation: https://www.samsonzhang.com/2020/11/24/understanding-the-mat…numpy.html

Kaggle notebook with all the code: https://www.kaggle.com/wwsalmon/simple-mnist-nn-from-scratch-numpy-no-tf-keras.

Blog article with more/clearer math explanation: https://www.samsonzhang.com/2020/11/24/understanding-the-mat…numpy.html

An introductory lecture for MIT course 6.S094 on the basics of deep learning including a few key ideas, subfields, and the big picture of why neural networks have inspired and energized an entire new generation of researchers. For more lecture videos on deep learning, reinforcement learning (RL), artificial intelligence (AI & AGI), and podcast conversations, visit our website or follow TensorFlow code tutorials on our GitHub repo.

INFO:

Website: https://deeplearning.mit.edu.

GitHub: https://github.com/lexfridman/mit-deep-learning.

Slides: http://bit.ly/deep-learning-basics-slides.

Playlist: http://bit.ly/deep-learning-playlist.

Blog post: https://link.medium.com/TkE476jw2T

OUTLINE:

0:00 — Introduction.

0:53 — Deep learning in one slide.

4:55 — History of ideas and tools.

9:43 — Simple example in TensorFlow.

11:36 — TensorFlow in one slide.

13:32 — Deep learning is representation learning.

16:02 — Why deep learning (and why not)

22:00 — Challenges for supervised learning.

38:27 — Key low-level concepts.

46:15 — Higher-level methods.

1:06:00 — Toward artificial general intelligence.

CONNECT:

- If you enjoyed this video, please subscribe to this channel.

- Twitter: https://twitter.com/lexfridman.

- LinkedIn: https://www.linkedin.com/in/lexfridman.

- Facebook: https://www.facebook.com/lexfridman.

- Instagram: https://www.instagram.com/lexfridman

What are the neurons, why are there layers, and what is the math underlying it?

Help fund future projects: https://www.patreon.com/3blue1brown.

Written/interactive form of this series: https://www.3blue1brown.com/topics/neural-networks.

Additional funding for this project provided by Amplify Partners.

Typo correction: At 14 minutes 45 seconds, the last index on the bias vector is n, when it’s supposed to in fact be a k. Thanks for the sharp eyes that caught that!

For those who want to learn more, I highly recommend the book by Michael Nielsen introducing neural networks and deep learning: https://goo.gl/Zmczdy.

There are two neat things about this book. First, it’s available for free, so consider joining me in making a donation Nielsen’s way if you get something out of it. And second, it’s centered around walking through some code and data which you can download yourself, and which covers the same example that I introduce in this video. Yay for active learning!

https://github.com/mnielsen/neural-networks-and-deep-learning.

I also highly recommend Chris Olah’s blog: http://colah.github.io/

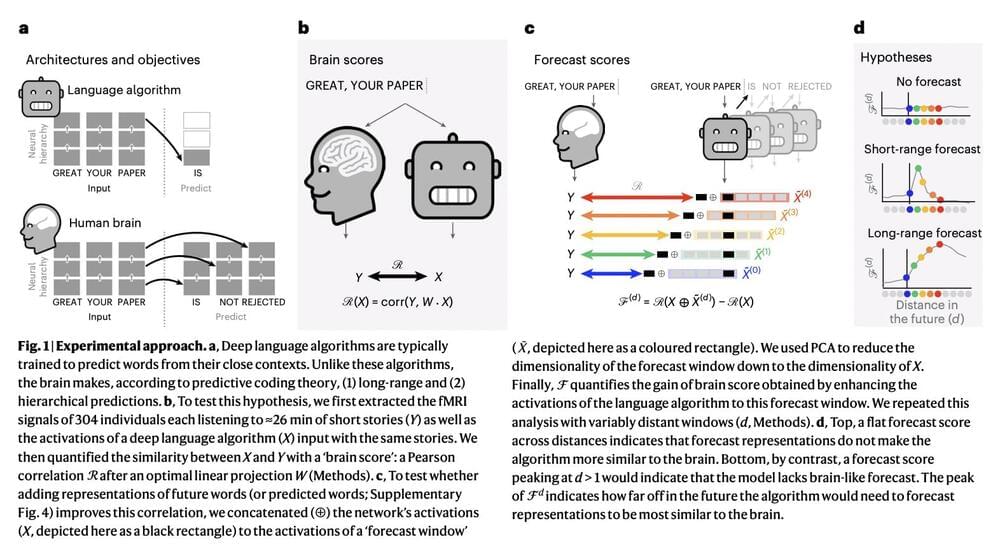

Deep learning has made significant strides in text generation, translation, and completion in recent years. Algorithms trained to predict words from their surrounding context have been instrumental in achieving these advancements. However, despite access to vast amounts of training data, deep language models still need help to perform tasks like long story generation, summarization, coherent dialogue, and information retrieval. These models have been shown to need help capturing syntax and semantic properties, and their linguistic understanding needs to be more superficial. Predictive coding theory suggests that the brain of a human makes predictions over multiple timescales and levels of representation across the cortical hierarchy. Although studies have previously shown evidence of speech predictions in the brain, the nature of predicted representations and their temporal scope remain largely unknown. Recently, researchers analyzed the brain signals of 304 individuals listening to short stories and found that enhancing deep language models with long-range and multi-level predictions improved brain mapping.

The results of this study revealed a hierarchical organization of language predictions in the cortex. These findings align with predictive coding theory, which suggests that the brain makes predictions over multiple levels and timescales of expression. Researchers can bridge the gap between human language processing and deep learning algorithms by incorporating these ideas into deep language models.

The current study evaluated specific hypotheses of predictive coding theory by examining whether cortical hierarchy predicts several levels of representations, spanning multiple timescales, beyond the neighborhood and word-level predictions usually learned in deep language algorithms. Modern deep language models and the brain activity of 304 people listening to spoken tales were compared. It was discovered that the activations of deep language algorithms supplemented with long-range and high-level predictions best describe brain activity.

There is a competition among technology companies to develop a humanoid robot that can perform various tasks, and one particular company, “Figure,” is at the forefront of this race.

A humanoid general-purpose robot is a robot that can mimic human actions and interact with the environment in a human-like way. This type of robot has the potential to perform various tasks, such as cooking, cleaning, and assisting people with disabilities.

The race to develop such robots is driven by the potential to revolutionize various industries, including manufacturing, healthcare, and retail. A successful humanoid robot could replace human workers in hazardous or repetitive tasks, increase productivity, and reduce costs.

The fact that “Figure” is leading this race suggests that they have made significant progress in developing a humanoid general-purpose robot. It is possible that they have developed new technology or software that gives them an advantage over their competitors.

Overall, it implies that there is intense competition among tech companies to develop the next generation of robots, and “Figure” is one of the frontrunners in this race.

Wondering if artificial intelligence will be taking your job anytime soon? We’re sure we speak for a lot of folks when we say: same.

Considering that AI is literally designed to model human capabilities and thus automate human tasks, it’s a fair question — and one that a group of professors from New York University (NYU), Princeton, and the University of Pennsylvania (UPenn) may have just helped to shed a little bit of light on in a new paper, aptly titled “How Will Language Modelers like ChatGPT Affect Occupations and Industries?”

Though the paper has yet to be peer-reviewed, the results are fascinating, not to mention ominous — especially, of course, for the folks most at risk.

Ray Kurzweil — The Singularity IS NEAR — part 2! We’ll Reach IMMORTALITY by 2030

Get ready for an exciting journey into the future with Ray Kurzweil’s The Singularity IS NEAR — Part 2! Join us as we explore the awe-inspiring possibilities of what could be achieved before 2030, including the potential for humans to reach immortality. We’ll dive into the incredible technology that could help us reach this singularity and uncover what the implications of achieving immortality could be. Don’t miss out on this fascinating insight into the future of mankind!

In his book “The Singularity Is Near”, futurist and inventor Ray Kurzweil argues that we are rapidly approaching a point in time known as the singularity. This refers to the moment when artificial intelligence and other technologies will become so advanced that they surpass human intelligence and change the course of human evolution forever.

Kurzweil predicts that by 2030, we will reach a crucial milestone in our technological progress: immortality. He bases this prediction on his observation of exponential growth in various fields such as genetics, nanotechnology, and robotics, which he believes will culminate in the creation of what he calls “nanobots”.

These tiny robots, according to Kurzweil, will be capable of repairing and enhancing our bodies at the cellular level, effectively making us immune to disease, aging, and death. Additionally, he believes that advances in brain-computer interfaces will allow us to upload our consciousness into digital form, effectively achieving immortality.

Kurzweil’s ideas have been met with both excitement and skepticism. Some people see the singularity as a moment of great potential, a time when we can overcome our biological limitations and create a better future for humanity. Others fear the singularity, believing that it could lead to the end of humanity as we know it.

Regardless of one’s opinion on the singularity, there is no denying that we are living in a time of rapid technological change. The future is uncertain, and it is impossible to predict with certainty what the world will look like in 2030 or beyond. However, one thing is clear: the singularity, as envisioned by Kurzweil and others, represents a profound shift in human history, one that will likely have far-reaching implications for generations to come.

0:00 Intro.

Are you ready to discover the potential technological developments that could shape the world we live in and get a glimpse of what life in the year 2100 might be like? As we approach the turn of the century, the world is expected to undergo significant changes and challenges. In this video, we will show you how the merging of humans and artificial intelligence can help solve any problem that comes our way and even predict the future.

Imagine being able to access the thoughts, memories, and emotions of billions of people through the hive mind concept. This will provide a unique way of experiencing other people’s lives and gaining new perspectives. Hyper-personalized virtual realities customized to fulfill every individual’s desire will be the norm. Users will enter a world where their every wish and fantasy constantly comes to life, maximizing their happiness, joy, and pleasure.

Education as we know it will change forever with the ability to download skills and knowledge directly into a person’s brain. People will be able to learn new skills and gain knowledge at unprecedented speeds, becoming experts in any field within seconds. The discovery and use of room-temperature superconductors will revolutionize many industries and transform the world’s infrastructure, especially in transportation. By 2100, this technology will be a reality and used in numerous industries.

Join us until the end of the video, where the final development will really raise your eyebrows. The future is exciting, and it’s happening now. Don’t miss out on this incredible journey!

As always, thanks for stopping by at Future Tech Enthusiast! Where we are truly enthusiastic about the Future of Technology!

Check out our website at: https://futuretechenthusiast.com/