The goal of the Search for Extraterrestrial Intelligence (SETI) is to quantify the prevalence of technological life beyond Earth via their “technosignatures”. One theorized technosignature is narrowband Doppler drifting radio signals.

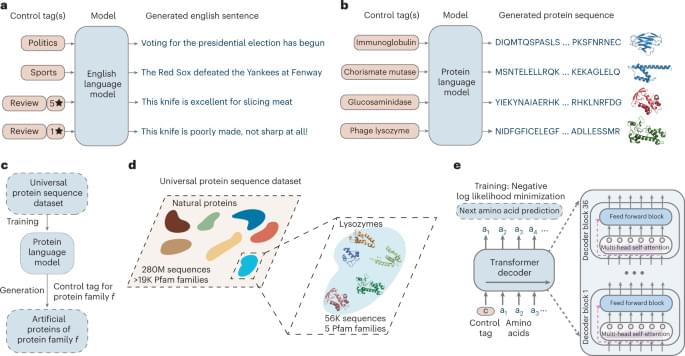

The principal challenge in conducting SETI in the radio domain is developing a generalized technique to reject human radio frequency interference (RFI). Here, we present the most comprehensive deep-learning based technosignature search to date, returning 8 promising ETI signals of interest for re-observation as part of the Breakthrough Listen initiative.

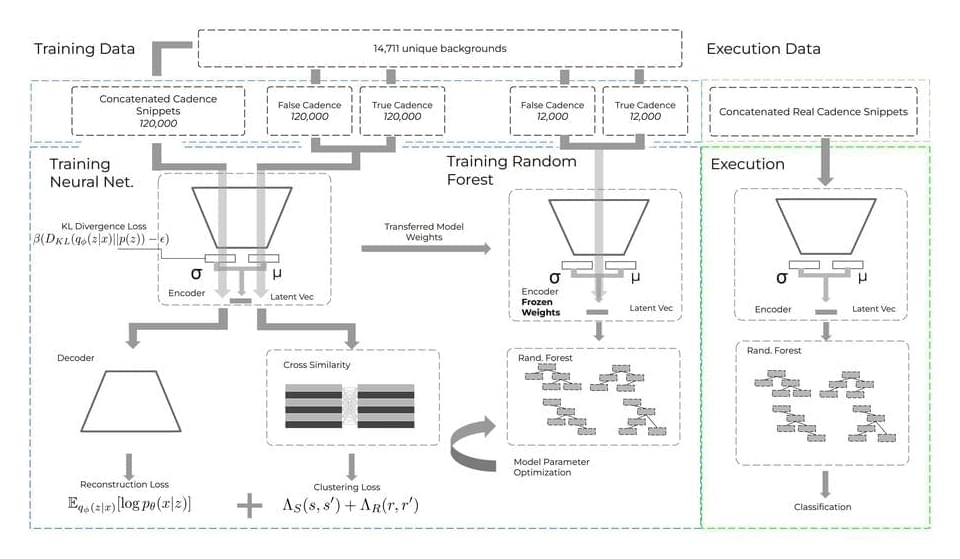

The search comprises 820 unique targets observed with the Robert C. Byrd Green Bank Telescope, totaling over 480, hr of on-sky data. We implement a novel beta-Convolutional Variational Autoencoder to identify technosignature candidates in a semi-unsupervised manner while keeping the false positive rate manageably low. This new approach presents itself as a leading solution in accelerating SETI and other transient research into the age of data-driven astronomy.