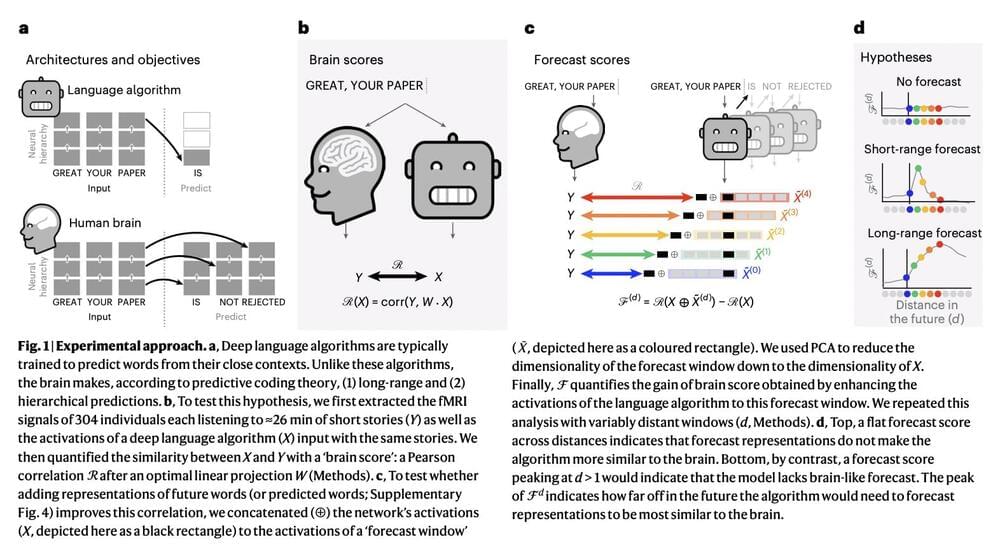

Deep learning has made significant strides in text generation, translation, and completion in recent years. Algorithms trained to predict words from their surrounding context have been instrumental in achieving these advancements. However, despite access to vast amounts of training data, deep language models still need help to perform tasks like long story generation, summarization, coherent dialogue, and information retrieval. These models have been shown to need help capturing syntax and semantic properties, and their linguistic understanding needs to be more superficial. Predictive coding theory suggests that the brain of a human makes predictions over multiple timescales and levels of representation across the cortical hierarchy. Although studies have previously shown evidence of speech predictions in the brain, the nature of predicted representations and their temporal scope remain largely unknown. Recently, researchers analyzed the brain signals of 304 individuals listening to short stories and found that enhancing deep language models with long-range and multi-level predictions improved brain mapping.

The results of this study revealed a hierarchical organization of language predictions in the cortex. These findings align with predictive coding theory, which suggests that the brain makes predictions over multiple levels and timescales of expression. Researchers can bridge the gap between human language processing and deep learning algorithms by incorporating these ideas into deep language models.

The current study evaluated specific hypotheses of predictive coding theory by examining whether cortical hierarchy predicts several levels of representations, spanning multiple timescales, beyond the neighborhood and word-level predictions usually learned in deep language algorithms. Modern deep language models and the brain activity of 304 people listening to spoken tales were compared. It was discovered that the activations of deep language algorithms supplemented with long-range and high-level predictions best describe brain activity.