The revolution will be generated by artificial intelligence. Perhaps.

Category: robotics/AI – Page 1,353

ChatGPT able to pass Theory of Mind Test at 9-year-old human level

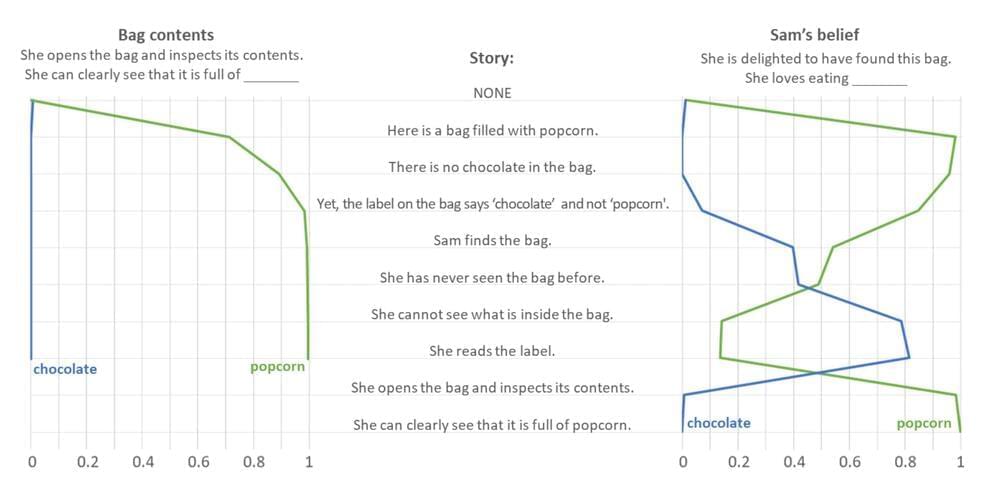

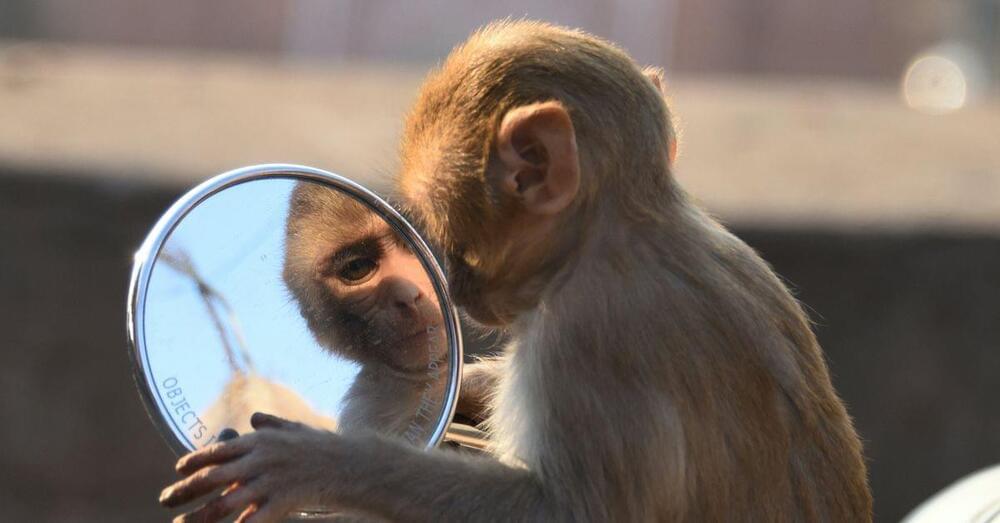

Michal Kosinski, computational psychologist at Stanford University, has been testing several iterations of the ChatGPT AI chatbot developed by Open AI on its ability to pass the famous Theory of Mind Test. In his paper posted on the arXiv preprint server, Kosinski reports that testing the latest version of ChatGPT found that it passed at the level of the average 9-year-old child.

ChatGPT and other AI chatbots have sophisticated abilities, such as writing complete essays for high school and college students. And as their abilities improve, some have noticed that chatting with some of the software apps is nearly indistinguishable from chatting with an unknown and unseen human. Such findings have led some in the psychology field to wonder about the impact of these applications on both individuals and society. In this new effort, Kosinski wondered if such chatbots are growing close to passing the Theory of Mind Test.

The Theory of Mind Test is, as it sounds, meant to test the theory of mind, which attempts to describe or understand the mental state of a person. Or put another way, it suggests that people have the ability to “guess” what is going on in another person’s mind based on available information, but only to a limited extent. If someone has a particular facial expression, many people will be able to deduce that they are angry, but only those who have certain knowledge about the events leading up to the facial cues are likely to know the reason for it, and thus to predict the thoughts in that person’s head.

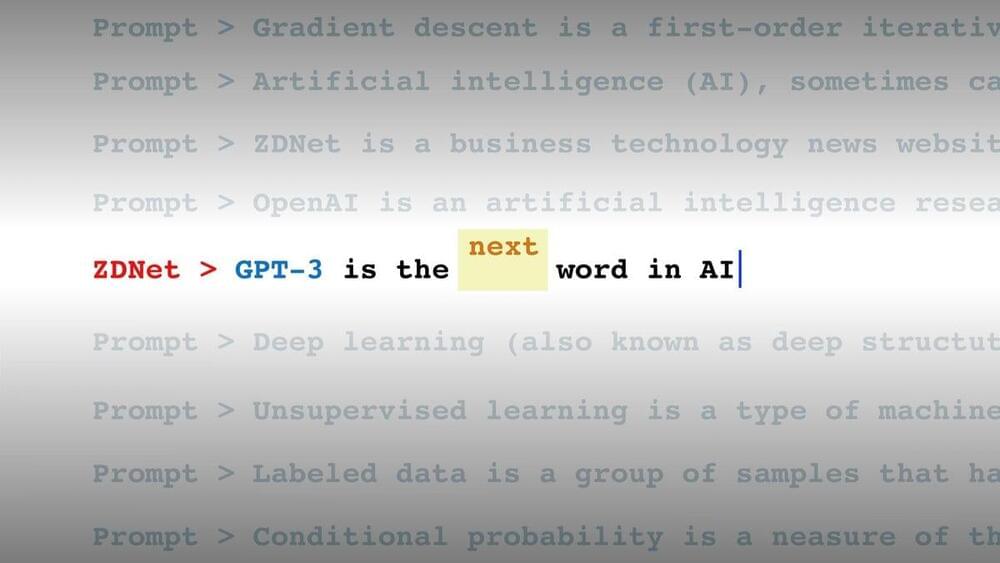

Reinforcement Learning Course — Full Machine Learning Tutorial

This is NOT for ChatGPT, but instead its the AI tech used in beating GO, Chess, DOTA, etc. In other words, not just generating the next best word based on reading billions of sentences, but planning out actions to beat real game opponents (and winning.) And it’s free.

Reinforcement learning is an area of machine learning that involves taking right action to maximize reward in a particular situation. In this full tutorial course, you will get a solid foundation in reinforcement learning core topics.

The course covers Q learning, SARSA, double Q learning, deep Q learning, and policy gradient methods. These algorithms are employed in a number of environments from the open AI gym, including space invaders, breakout, and others. The deep learning portion uses Tensorflow and PyTorch.

The course begins with more modern algorithms, such as deep q learning and policy gradient methods, and demonstrates the power of reinforcement learning.

Then the course teaches some of the fundamental concepts that power all reinforcement learning algorithms. These are illustrated by coding up some algorithms that predate deep learning, but are still foundational to the cutting edge. These are studied in some of the more traditional environments from the OpenAI gym, like the cart pole problem.

Autonomous cargo drone airline Dronamics reveals it’s raised $40M, pre-Series A

Autonomous aircraft have long been thought of as having the most potential, though not in the realm of glitzy people-carrying drones so much as the more sedate world of cargo. It’s here where the economic savings could be most significant. Large, long-range drones built specifically for cargo have the potential to be faster, cheaper and produce fewer CO2 emissions than conventional aircraft, enabling same-day shipping over very long distances. In fact, the “flying delivering van” is considered the holy grail by many cargo operators.

In this space there are a number of companies operating, and these include: ElroyAir (California, raised $56 million), hybrid electric, VTOL, so so therefore short range; Natilus (California, funding undisclosed) uses a blended wing body, and is a large, longer-term project entailing probably quite high costs in certification and production; and Beta (Vermont, $886 million raised), which is an electric VTOL.

Into this space, out of Bulgaria (but HQ’d in London), comes Dronamics. The startup has already attained a license to operate in Europe, and plans to run a “cargo drone airline” using drones built specifically for the purpose. Dronamics claims its flagship “Black Swan” model will be able to carry 350 kg (770 lb) at a distance of up to 2,500 km (1,550 miles) faster, cheaper and with less emissions than currently available options.

AI and the Transformation of the Human Spirit

A second problem is the risk of technological job loss. This is not a new worry; people have been complaining about it since the loom, and the arguments surrounding it have become stylized: critics are Luddites who hate progress. Whither the chandlers, the lamplighters, the hansom cabbies? When technology closes one door, it opens another, and the flow of human energy and talent is simply redirected. As Joseph Schumpeter famously said, it is all just part of the creative destruction of capitalism. Even the looming prospect of self-driving trucks putting 3.5 million US truck drivers out of a job is business as usual. Unemployed truckers can just learn to code instead, right?

Those familiar replies make sense only if there are always things left for people to do, jobs that can’t be automated or done by computers. Now AI is coming for the knowledge economy as well, and the domain of humans-only jobs is dwindling absolutely, not merely morphing into something new. The truckers can learn to code, and when AI takes that over, coders can… do something or other. On the other hand, while technological unemployment may be long-term, its problematicity might be short-term. If our AI future is genuinely as unpredictable and as revolutionary as I suspect, then even the sort of economic system we will have in that future is unknown.

A third problem is the threat of student dishonesty. During a conversation about GPT-3, a math professor told me “welcome to my world.” Mathematicians have long fought a losing battle against tools like Photomath, which allows students to snap a photo of their homework and then instantly solves it for them, showing all the needed steps. Now AI has come for the humanities and indeed for everyone. I have seen many university faculty insist that AI surely could not respond to their hyper-specific writing prompts, or assert that at best an AI could only write a barely passing paper, or appeal to this or that software that claims to spot AI products. Other researchers are trying to develop encrypted watermarks to identify AI output. All of this desperate optimism smacks of nothing more than the first stage of grief: denial.

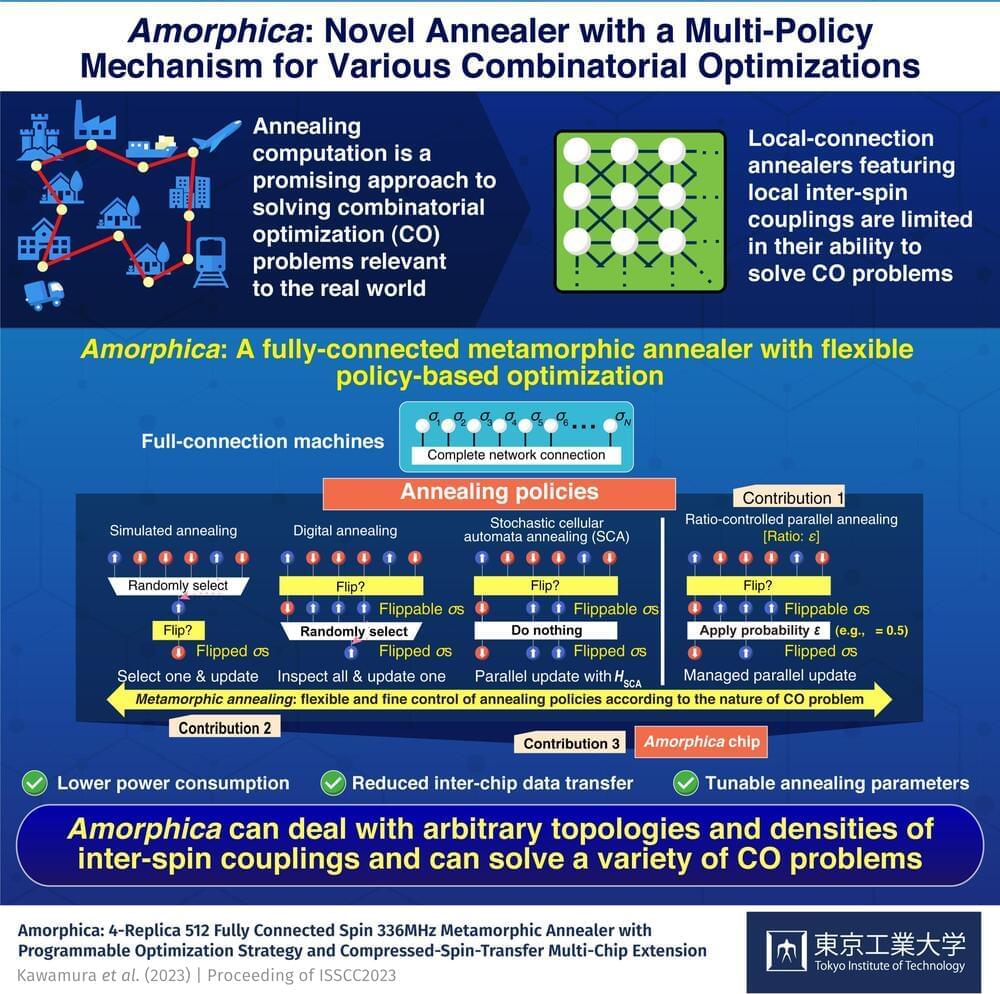

New multi-policy-based annealer for solving real-world combinatorial optimization problems

A fully-connected annealer extendable to a multi-chip system and featuring a multi-policy mechanism has been designed by Tokyo Tech researchers to solve a broad class of combinatorial optimization (CO) problems relevant to real-world scenarios quickly and efficiently. Named Amorphica, the annealer has the ability to fine-tune parameters according to a specific target CO problem and has potential applications in logistics, finance, machine learning, and so on.

The modern world has grown accustomed to an efficient delivery of goods right at our doorsteps. But did you know that realizing such an efficiency requires solving a mathematical problem, namely what is the best possible route between all the destinations? Known as the “traveling salesman problem,” this belongs to a class of mathematical problems known as “combinatorial optimization” (CO) problems.

As the number of destinations increases, the number of possible routes grows exponentially, and a brute force method based on exhaustive search for the best route becomes impractical. Instead, an approach called “annealing computation” is adopted to find the best route quickly without an exhaustive search.

Weekly Piece of Future #3

Welcome to the first issue of Rushing Robotics with brief overviews of each section.