What is Prompt Engineering?

Artificial intelligence, particularly natural language processing, has a notion called prompt engineering (NLP). In prompt engineering, the job description is included explicitly in the input, such as a question, instead of being provided implicitly. Typically, prompt engineering involves transforming one or more tasks into a prompt-based dataset and “prompt-based learning”—also known as “prompt learning”—to train a language model. Prompt engineering, also known as “prefix-tuning” or “prompt tuning,” is a method wherein a big, “frozen” pretrained language model is used, and just the prompt’s representation is learned.

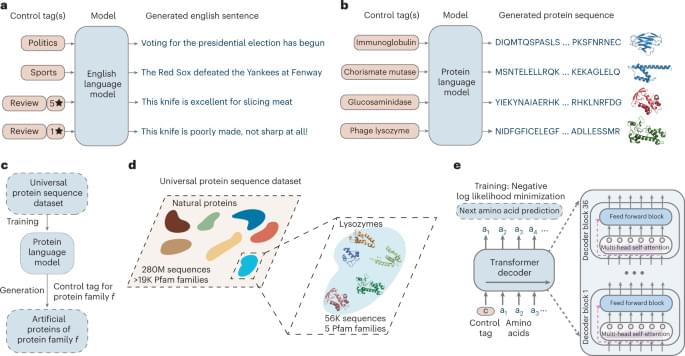

Developing the ChatGPT Tool, GPT-2, and GPT-3 language models was crucial for prompt engineering. Multitask prompt engineering in 2021 has shown strong performance on novel tasks utilizing several NLP datasets. Few-shot learning examples prompt with a thought chain provide a stronger representation of language model thinking. Prepaying text to a zero-shot learning prompt that supports a chain of reasoning, such as “Let’s think step by step,” may enhance a language model’s performance in multi-step reasoning tasks. The release of various open-source notebooks and community-led image synthesis efforts helped make these tools widely accessible.