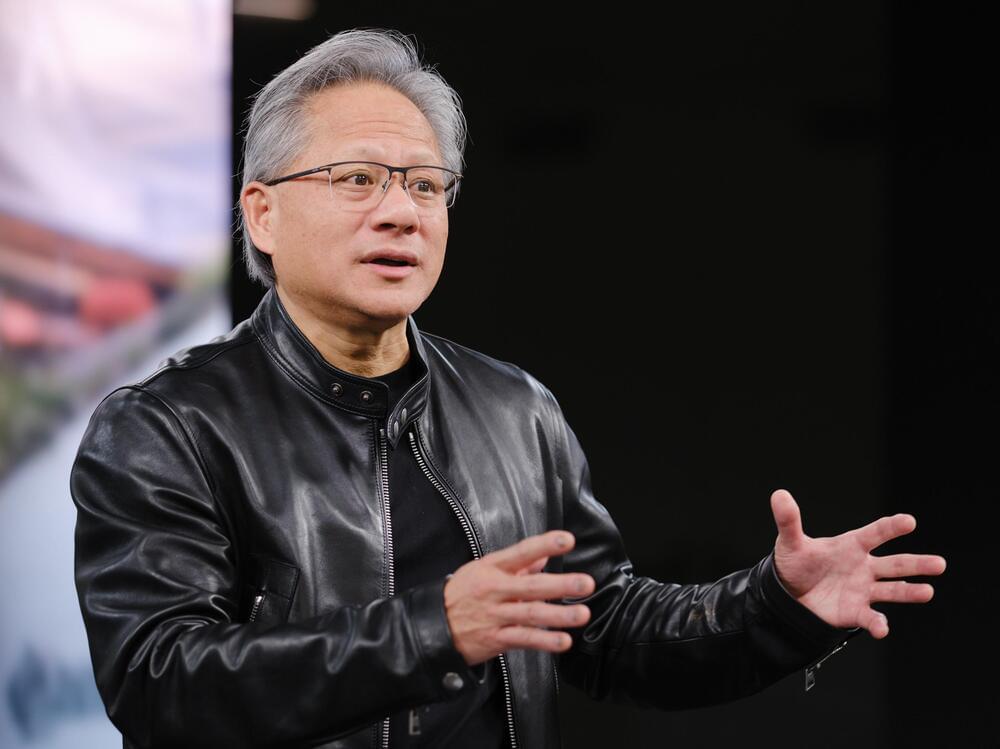

This year’s NVIDIA GPU Technology Conference (GTC) could not have come at a more auspicious time for the company. The hottest topic in technology today is the Artificial Intelligence (AI) behind ChatGPT, other related Large Language Models (LLMs), and their applications for generative AI applications. Underlying all this new AI technology are NVIDIA GPUs. NVIDIA’s CEO Jensen Huang doubled down on support for LLMs and the future of generative AI based on it. He’s calling it “the iPhone moment for AI.” Using LLMs, AI computers can learn the languages of people, programs, images, or chemistry. Using the large knowledge base and based on a query, they can create new, unique works: this is generative AI.

Jumbo sized LLM’s are taking this capability to new levels, specifically the latest GPT 4.0, which was introduced just prior to GTC. Training these complex models takes thousands of GPUs, and then applying these models to specific problems require more GPUs as well for inference. Nvidia’s latest Hopper GPU, the H100, is known for training, but the GPU can also be divided into multiple instances (up to 7), which Nvidia calls MIG (Multi-Instance GPU), to allow multiple inference models to be run on the GPU. It’s in this inference mode that the GPU transforms queries into new outputs, using trained LLMs.

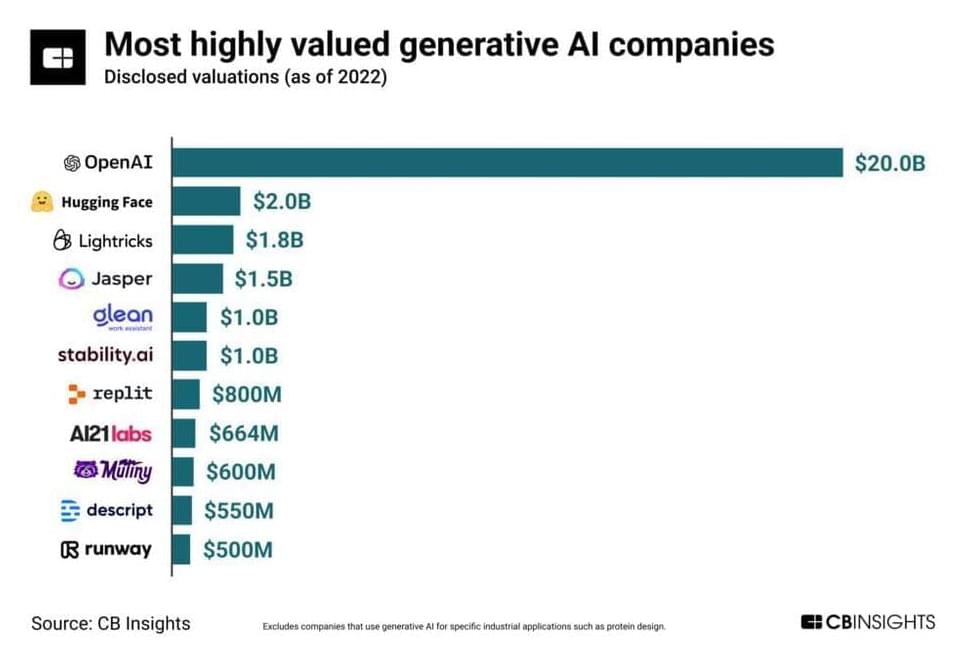

Nvidia is using its leadership position to build new business opportunities by being a full-stack supplier of AI, including chips, software, accelerator cards, systems, and even services. The company is opening up its services business in areas such as biology, for example. The company’s pricing might be based on use time, or it could be based on the value of the end product built with its services.