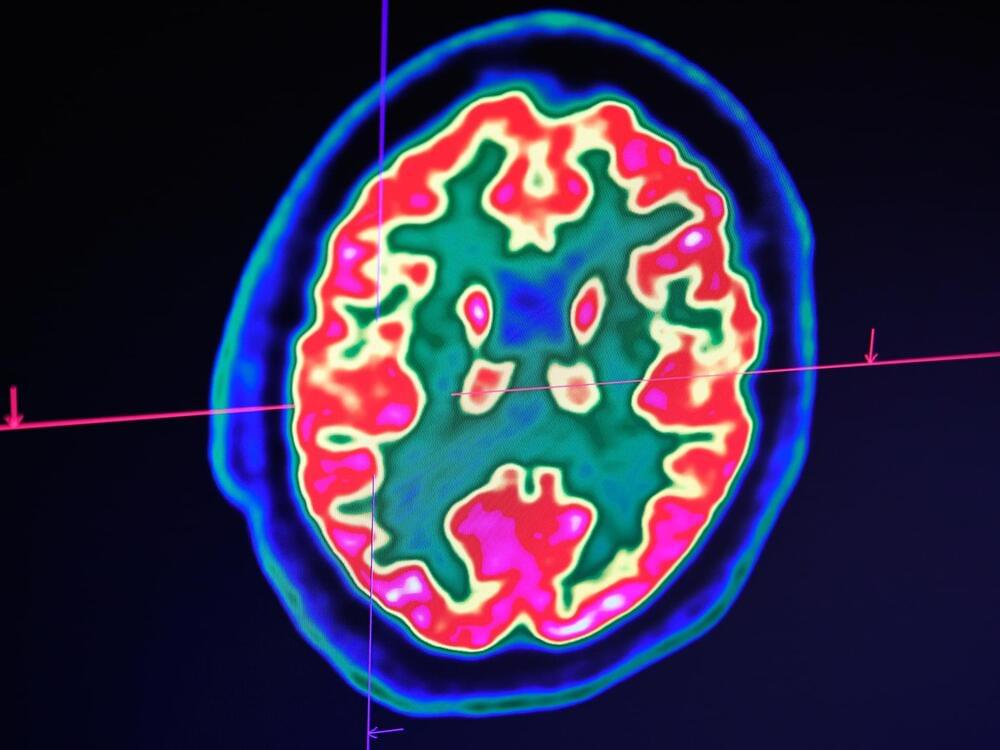

Tokyo, Japan – Yu Takagi could not believe his eyes. Sitting alone at his desk on a Saturday afternoon in September, he watched in awe as artificial intelligence decoded a subject’s brain activity to create images of what he was seeing on a screen.

“I still remember when I saw the first [AI-generated] images,” Takagi, a 34-year-old neuroscientist and assistant professor at Osaka University, told Al Jazeera.

“I went into the bathroom and looked at myself in the mirror and saw my face, and thought, ‘Okay, that’s normal. Maybe I’m not going crazy’”.