A commercial spaceflight in May will take a Carnegie Mellon-designed rover, named Iris, to the lunar surface.

The real move at play here, by so called AI Ethics clowns, is a complete shut down of Ai, and AI research. That IS their end goal — end game. See if can really turn it off 6 months. ha! Ok, how about 2 more years! etc… etc…

Ya publicly tipped your hand.

An open letter published today calls for “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

This 6-month moratorium would be better than no moratorium. I have respect for everyone who stepped up and signed it. It’s an improvement on the margin.

I refrained from signing because I think the letter is understating the seriousness of the situation and asking for too little to solve it.

The non-profit said powerful AI systems should only be developed “once we are confident that their effects will be positive and their risks will be manageable.” It cited potential risks to humanity and society, including the spread of misinformation and widespread automation of jobs.

The letter urged AI companies to create and implement a set of shared safety protocols for AI development, which would be overseen by independent experts.

Apple cofounder Steve Wozniak, Stability AI CEO Emad Mostaque, researchers at Alphabet’s AI lab DeepMind, and notable AI professors have also signed the letter. At the time of publication, OpenAI CEO Sam Altman had not added his signature.

OAKLAND, California, March 28 (Reuters) — Artificial intelligence chip startup Cerebras Systems on Tuesday said it released open source ChatGPT-like models for the research and business community to use for free in an effort to foster more collaboration.

Silicon Valley-based Cerebras released seven models all trained on its AI supercomputer called Andromeda, including smaller 111 million parameter language models to a larger 13 billion parameter model.

“There is a big movement to close what has been open sourced in AI…it’s not surprising as there’s now huge money in it,” said Andrew Feldman, founder and CEO of Cerebras. “The excitement in the community, the progress we’ve made, has been in large part because it’s been so open.”

Energy production in nature is the responsibility of mitochondria and chloroplasts, and is crucial for fabricating sustainable, synthetic cells in the lab. Mitochondria are “the powerhouses of the cell,” but are also one of the most complex intracellular components to replicate artificially.

In Biophysics Reviews, by AIP Publishing, researchers from Sogang University in South Korea and the Harbin Institute of Technology in China identified the most promising advancements and greatest challenges of artificial mitochondria and chloroplasts.

“If scientists can create artificial mitochondria and chloroplasts, we could potentially develop synthetic cells that can generate energy and synthesize molecules autonomously. This would pave the way for the creation of entirely new organisms or biomaterials,” author Kwanwoo Shin said.

In this weeks episode of The Futurists, cognitive scientist and AI researcher Ben Goertzel joins the hosts to talk the likely path to Artificial General Intelligence. Goertzel is the founder of SingularityNet, Chairman at OpenCog Foundation, and previously as the Chief Scientist at Hanson Robotics he helped create Sophia the robot. Goertzel is on a different level, get ready to step up. Follow @bengoertzel.

ABOUT SHOW

Subscribe and listen to TheFuturists.com Podcast where hosts Brett King and Robert TerceK interview the worlds foremost super-forecasters, thought leaders, technologists, entrepreneurs and futurists building the world of tomorrow. Together we will explore how our world will radically change as AI, bioscience, energy, food and agriculture, computing, the metaverse, the space industry, crypto, resource management, supply chain and climate will reshape our world over the next 100 years. Join us on The Futurists and we will see you in the future!

HOSTS

https://thefuturists.com/info/hosts-brett-king-robert-tercek/

https://twitter.com/BrettKing & http://brettking.com/

https://twitter.com/Superplex &https://roberttercek.com/

SUBSCRIBE & LISTEN

https://thefuturists.com/info/listen-here/

https://podcasts.apple.com/us/podcast/the-futurists/id1615809726

https://blubrry.com/thefuturists/

FOLLOW & ENGAGE

https://www.instagram.com/Futuristpodcast/

https://twitter.com/Futuristpodcast.

https://soundcloud.com/thefuturistspodcast.

https://www.youtube.com/channel/UCXLNDsNWVd32caN1doB2PXQ

GET EVEN MORE

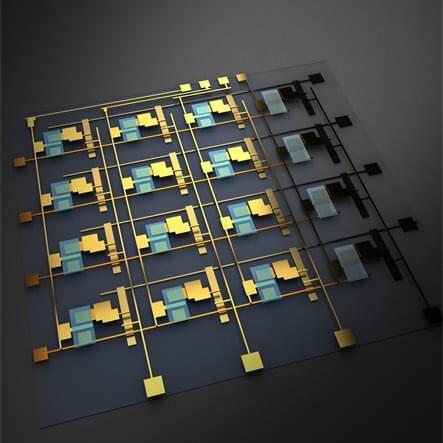

The transformative changes brought by deep learning and artificial intelligence are accompanied by immense costs. For example, OpenAI’s ChatGPT algorithm costs at least $100,000 every day to operate. This could be reduced with accelerators, or computer hardware designed to efficiently perform the specific operations of deep learning. However, such a device is only viable if it can be integrated with mainstream silicon-based computing hardware on the material level.

This was preventing the implementation of one highly promising deep learning accelerator—arrays of electrochemical random-access memory, or ECRAM—until a research team at the University of Illinois Urbana-Champaign achieved the first material-level integration of ECRAMs onto silicon transistors. The researchers, led by graduate student Jinsong Cui and professor Qing Cao of the Department of Materials Science & Engineering, recently reported an ECRAM device designed and fabricated with materials that can be deposited directly onto silicon during fabrication in Nature Electronics, realizing the first practical ECRAM-based deep learning accelerator.

“Other ECRAM devices have been made with the many difficult-to-obtain properties needed for deep learning accelerators, but ours is the first to achieve all these properties and be integrated with silicon without compatibility issues,” Cao said. “This was the last major barrier to the technology’s widespread use.”

One can only hope.

A former Google engineer has just predicted that humans will achieve immortality in eight years, something more than likely considering that 86% of his 147 predictions have been correct.

Ray Kurzweil visited the YouTube channel Adagio, in a discussion on the expansion of genetics, nanotechnology and robotics, which he believes will lead to age-reversing ‘nanobots’.

These tiny robots will repair damaged cells and tissues that deteriorate as the body ages, making people immune to certain diseases such as cancer.

I dunno if anyone has seen this. As a former Linux user, I’ve been an Nvidia fan for a long time and now they’ve gone on from games and Bitcoin mining. Sorry if this is a double post. I’m on my way out the door for my mom’s Dr appointment. I always worry I’ll double post by accident.

NVIDIA’s Jensen Huang just announced a set of revolutionary new Artificial Intelligence Models and Partnerships at GTC 2023. NVIDIA has always been one of, if not the most important company in the AI Industry by creating the most powerful AI hardware to date. Among them the A100 and future H100 GPU’s which are powering GPT-4 from OpenAI, Midjourney and everyone else. This gives them a lot of power to jump into the AI race themselves and allows them to surpass and beat the currently best AI models from Large Language Models and Image Generation with software like Omniverse and Hardware like the DGX H100 Supercomputer and Grace CPU’s.

–

TIMESTAMPS:

00:00 NVIDIA enters the AI Industry.

01:43 GTC 2023 Announcements.

04:48 How NVIDIA Beat Every Competitor at AI

07:40 Running High End AI Locally.

10:20 What is NVIDIA’s Future?

13:05 Accelerating Future.

–

Technology is improving at an almost exponential rate. Robots are learning to walk & think, Brain Computer Interfaces are becoming commonplace, new Biotechnology is allowing for age reversal and Artificial Intelligence is starting to surpass humans in many areas. Follow FutureNET to always be up to date on what is happening in the world of Futuristic Technology and Documentaries about humanities past achievements.

–

#nvidia #ai #gtc

The latest breakthroughs in artificial intelligence could lead to the automation of a quarter of the work done in the US and eurozone, according to research by Goldman Sachs.

The investment bank said on Monday that “generative” AI systems such as ChatGPT, which can create content that is indistinguishable from human output, could spark a productivity boom that would eventually raise annual global gross domestic product by 7 percent over a 10-year period.

But if the technology lived up to its promise, it would also bring “significant disruption” to the labor market, exposing the equivalent of 300 million full-time workers across big economies to automation, according to Joseph Briggs and Devesh Kodnani, the paper’s authors. Lawyers and administrative staff would be among those at greatest risk of becoming redundant.