Ways to think about generative AI as a co-creator.

Suppose a large inbound asteroid were discovered, and we learned that half of all astronomers gave it at least 10% chance of causing human extinction, just as a similar asteroid exterminated the dinosaurs about 66 million years ago. Since we have such a long history of thinking about this threat and what to do about it, from scientific conferences to Hollywood blockbusters, you might expect humanity to shift into high gear with a deflection mission to steer it in a safer direction.

Sadly, I now feel that we’re living the movie “Don’t look up” for another existential threat: unaligned superintelligence. We may soon have to share our planet with more intelligent “minds” that care less about us than we cared about mammoths. A recent survey showed that half of AI researchers give AI at least 10% chance of causing human extinction. Since we have such a long history of thinking about this threat and what to do about it, from scientific conferences to Hollywood blockbusters, you might expect that humanity would shift into high gear with a mission to steer AI in a safer direction than out-of-control superintelligence. Think again: instead, the most influential responses have been a combination of denial, mockery, and resignation so darkly comical that it’s deserving of an Oscar.

Read More: The Only Way to Deal with the Threat from AI.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

~“May you live in interesting times”~

Having the blessing and the curse of working in the field of cybersecurity, I often get asked about my thoughts on how that intersects with another popular topic — artificial intelligence (AI). Given the latest headline-grabbing developments in generative AI tools, such as OpenAI’s ChatGPT, Microsoft’s Sydney, and image generation tools like Dall-E and Midjourney, it is no surprise that AI has catapulted into the public’s awareness.

Created using Paragraph ai.

Time travel has long been a popular theme in movies, but scientists believe that the concept of time teleportation is unlikely in reality. However, they do not dismiss the possibility of time travel altogether. The laws of physics suggest that time travel may be possible, but the details are complex.

Physicists explain that traveling to the near future is relatively simple, as we are all doing it right now at a rate of one second per second. Additionally, Einstein’s special theory of relativity states that the speed at which we move affects the flow of time. In other words, the faster we travel, the slower time passes. Furthermore, Einstein’s general theory of relativity suggests that gravity also impacts the flow of time. The stronger the nearby gravity, the slower time goes.

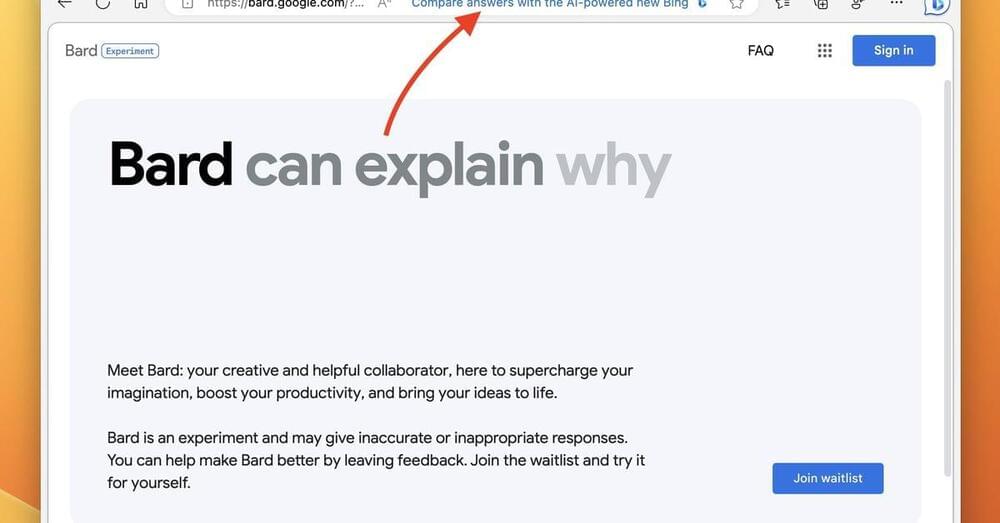

A developer release of Microsoft’s Edge browser has a new address bar advertisement for the company’s Bing AI — but it only appears when the user goes to its main competitor’s site at bard.google.com.

Microsoft’s Edge can be a great Chromium browser alternative to Google’s Chrome, but the former is displaying some annoying new rivalry antics: advertising Microsoft’s Bing AI chatbot while you’re trying out Google’s Bard AI. As pointed out by developer and Twitter user Vitor de Lucca, a new developer version of Edge will now display a new Bing ad next to the Google Bard URL.

When pointing Edge to bard.google.

Microsoft adds a Bing AI ad to Edge’s address bar when trying Google Bard.

VC firms including Tiger Global, Sequoia Capital, Andreessen Horowitz, Thrive and K2 Global picking up new shares, according to documents seen by TechCrunch. A source tells us Founders Fund is also investing. Altogether the VCs have put in just over $300 million at a valuation of $27 billion — $29 billion.

Updated to note that the Microsoft investment closed in January. The money from VCs reported here, part of a tender offer, is separate to that.

OpenAI, the startup behind the widely used conversational AI model ChatGPT, has picked up new backers, TechCrunch has learned.

There is a new war happening online: the browser war. Tech companies everywhere are seeking to integrate AI in browsers in an attempt to please and attract users looking for the best possible search experience.

This is according to a new article by FOX Business published on Friday.

This phenomenon was amplified with Microsoft’s announcement earlier this year that it would roll out a new AI-powered version of its Bing search engine and Edge web browser that will incorporate OpenAI’s ChatGPT.

“The new capability of low thermal budget growth on an 8-inch scale enables the integration of this material with silicon CMOS technology and paves the way for its future electronics application.”

With our pockets and houses filling with electronic gadgets and AI and Big Data fueling the rise of data centers, there is a need for more computer chips— more powerful, potent, and denser than ever.

These chips are traditionally made with boxy 3D materials bulky in nature, making stacking these into layers difficult.

The quarterly reports by these tech behemoths show their efforts to increase AI productivity in the face of growing economic worries.

The US tech giants like Alphabet, Microsoft, Amazon, and Meta are increasing their large language model (LLM) investments as a show of their dedication to utilizing the power of artificial intelligence (AI) while cutting costs and jobs.

Since the launch of OpenAI’s ChatGPT chatbot in late 2022, these businesses have put their artificial intelligence AI models on steroids to compete in the market, CNBC reported on Friday.

The IT behemoths Alphabet, Microsoft, Amazon, and Meta are increasing their large language model (LLM) investments as a show of their dedication to utilizing the power of artificial intelligence (AI) while cutting costs and jobs.

“We can now ask the robots about past and future missions and get an answer in real-time.”

A team of programmers has outfitted Boston Dynamics’ robot dog, Spot, with OpenAI’s ChatGPT and Google’s Text-to-Speech speech modulation in a viral video.

Santiago Valdarrama, a machine learning engineer, tweeted about the successful integration, which allows the robot to answer inquiries about its missions in real-time, considerably boosting data query efficiency, in a viral video on Twitter.