The demo is clever, questionably real, and prompts a lot of questions about how this device will actually work.

Buzz has been building around the secretive tech startup Humane for over a year, and now the company is finally offering a look at what it’s been building. At TED last month, Humane co-founder Imran Chaudhri gave a demonstration of the AI-powered wearable the company is building as a replacement for smartphones. Bits of the video leaked online after the event, but the full video is now available to watch.

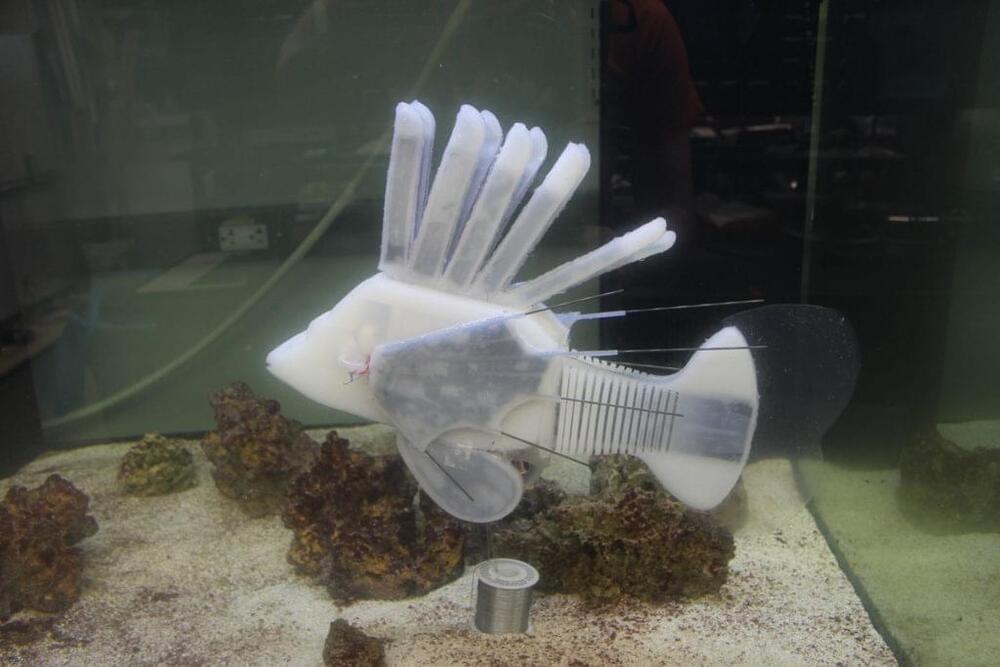

The device appears to be a small black puck that slips into your breast pocket, with a camera, projector, and speaker sticking out the top.

From a designer with two decades’ experience at Apple.