Year 2021 😗

It only took the robotic arm 90 minutes of both virtual and physical training to learn to play the game.

“It’s not worse than a regular human player,” Zelle said. “It’s already on par with me.”

Year 2021 😗

It only took the robotic arm 90 minutes of both virtual and physical training to learn to play the game.

“It’s not worse than a regular human player,” Zelle said. “It’s already on par with me.”

Advances in AI mean robots could be doing your weekly shop by the 2030s, according to a new study — and this could help close the gender gap. Here’s how.

Additionally, with the boom of artificial intelligence (AI) and advanced language learning models, Astro’s capabilities will only continue to improve in being able to solve increasingly challenging queries and requests. Amazon is investing billions of dollars into its SageMaker platform as a means to “Build, train, and deploy machine learning (ML) models for any use case with fully managed infrastructure, tools, and workflows.” Furthermore, the company’s Bedrock platform enables the “development of generative AI applications using [foundational models] through an API, without managing infrastructure.” Undoubtedly, Amazon has the resources and technical prowess to truly make significant strides in generative AI and machine learning, and will increasingly do so in the coming years.

However, it is important to note that Astro is not the only gladiator in the arena. AI enthusiast and Tesla founder Elon Musk announced last year that Tesla is actively working on developing a humanoid robot named “Optimus.” The goal behind the project will be to “Create a general purpose, bi-pedal, autonomous humanoid robot capable of performing unsafe, repetitive or boring tasks. Achieving that end goal requires building the software stacks that enable balance, navigation, perception and interaction with the physical world.” Musk has also ensured that the bot will be powered by Tesla’s advanced AI technology, meaning that it will be an intelligent and self-teaching bot that can respond to second-order queries and commands. Again, with enough time and testing, this technology can be leveraged in a positive way for healthcare-at-home needs and many more potential uses.

This is certainly an exciting and unprecedented time across multiple industries, including artificial intelligence, advanced robotics, and healthcare. The coming years will assuredly push the bounds of this technology and its applications. This advancement will undoubtedly bring with it certain challenges; however, if done correctly, it may also empower the means to benefit millions of people globally.

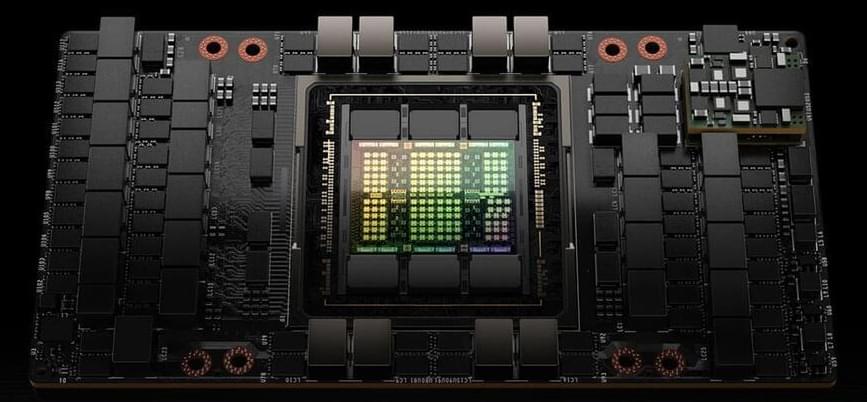

In case anyone is wondering how advances like ChatGPT are possible while Moore’s Law is dramatically slowing down, here’s what is happening:

Nvidia’s latest chip, the H100, can do 34 teraFLOPS of FP64 which is the standard 64-bit standard that supercomputers are ranked at. But this same chip can do 3,958 teraFLOPS of FP8 Tensor Core. FP8 is 8 times less precise than FP64. Also, Tensor Cores accelerate matrix operations, particularly matrix multiplication and accumulation, which are used extensively in deep learning calculations.

So by specializing in operations that AI cares about, the speed of the computer is increased by over 100 times!

A massive leap in accelerated compute.

Spotify ramps up policing after complaints of ‘artificial streaming.’

Spotify, the world’s most popular music streaming subscription service, has reportedly pulled down tens and thousands of songs from its platform, which were uploaded by an AI company Boomy, which came under the suspicion of ‘artificial streaming.’

Spotify took down around 7% of the AI-generated tracks created by Boomy, whose users have, till date, created a total of 14,591,095 songs, which the company claims is 13.95% of the world’s recorded music.

On Wednesday, Google unveiled the second generation of its Pathways Language Model (PaLM), called PaLM 2. The new large language model (LLM) will power the latest version of the company’s ChatGPT-rivalling artificial intelligence (AI) chatbot, Bard, and Google has claimed to have significantly improved the capabilities of its latest AI model over its predecessor. The list of upgrades to PaLM is similar to the changes that OpenAI announced with the release of its latest LLM, Generative Pre-trained Transformer (GPT)-4, but with a few key differences.

What is Google PaLM 2?

In a blog post announcing the rollout, Zoubin Ghahramani, vice-president at Google’s AI research division DeepMind, said that PaLM 2 is a “state-of-the-art language model with improved multilingual, reasoning and coding capabilities.”

Artificial intelligence could potentially replace 80% of jobs “in the next few years,” according to AI expert Ben Goertzel.

Goertzel, the founder and chief executive officer of SingularityNET, told France’s AFP news agency at a summit in Brazil last week that a future like that could come to fruition with the introduction of systems like OpenAI’s ChatGPT.

“I don’t think it’s a threat. I think it’s a benefit. People can find better things to do with their life than work for a living… Pretty much every job involving paperwork should be automatable,” he said.

And, imagine if every penny sank into this was available for AI research right now.

Meta sank tens of billions into its CEO’s virtual reality dream, but what will he do next?

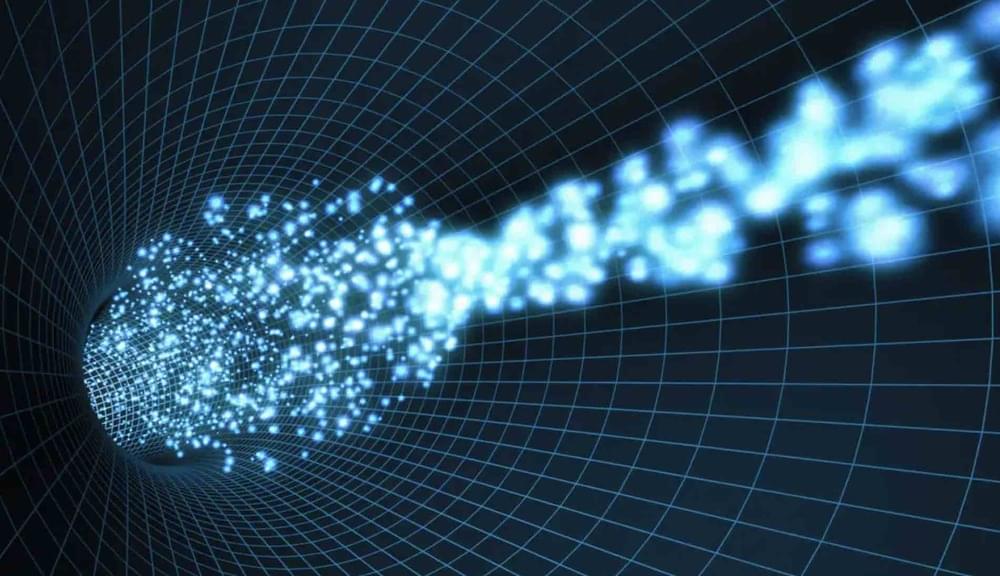

An algorithm that allows more precise forecasts of the positions and velocities of a beam’s distribution of particles as it passes through an accelerator has been developed by researchers with the Department of Energy (DOE) and the University of Chicago.

Traveling at nearly light speed, the linear accelerator at the DOE’s SLAC National Accelerator Laboratory fires bursts of close to one billion electrons through long metallic pipes to generate its particle beam. Located in Menlo Park, California, the facility, originally called the Stanford Linear Accelerator Center, has used its 3.2-kilometer accelerator since its construction in 1962 to propel electrons to energies as great as 50 gigaelectronvolts (GeV).

The powerful particle beam generated by SLAC’s linear accelerator is used in the study of everything from innovative materials to the behavior of molecules on the atomic scale, despite how the beam itself remains somewhat mysterious since researchers have a hard time gauging its appearance as it passes through an accelerator.