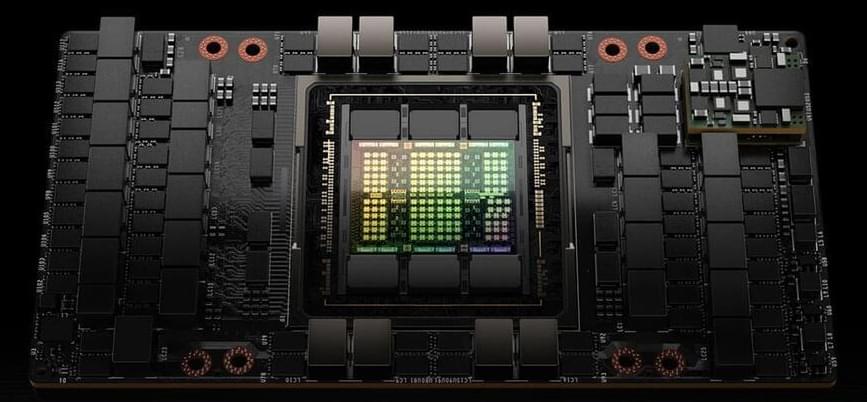

Additionally, with the boom of artificial intelligence (AI) and advanced language learning models, Astro’s capabilities will only continue to improve in being able to solve increasingly challenging queries and requests. Amazon is investing billions of dollars into its SageMaker platform as a means to “Build, train, and deploy machine learning (ML) models for any use case with fully managed infrastructure, tools, and workflows.” Furthermore, the company’s Bedrock platform enables the “development of generative AI applications using [foundational models] through an API, without managing infrastructure.” Undoubtedly, Amazon has the resources and technical prowess to truly make significant strides in generative AI and machine learning, and will increasingly do so in the coming years.

However, it is important to note that Astro is not the only gladiator in the arena. AI enthusiast and Tesla founder Elon Musk announced last year that Tesla is actively working on developing a humanoid robot named “Optimus.” The goal behind the project will be to “Create a general purpose, bi-pedal, autonomous humanoid robot capable of performing unsafe, repetitive or boring tasks. Achieving that end goal requires building the software stacks that enable balance, navigation, perception and interaction with the physical world.” Musk has also ensured that the bot will be powered by Tesla’s advanced AI technology, meaning that it will be an intelligent and self-teaching bot that can respond to second-order queries and commands. Again, with enough time and testing, this technology can be leveraged in a positive way for healthcare-at-home needs and many more potential uses.

This is certainly an exciting and unprecedented time across multiple industries, including artificial intelligence, advanced robotics, and healthcare. The coming years will assuredly push the bounds of this technology and its applications. This advancement will undoubtedly bring with it certain challenges; however, if done correctly, it may also empower the means to benefit millions of people globally.