From the surreal world of AI-generated films to a nanoscale robotic hand, check out this week’s awesome tech stories from around the web.

I believe that every function in trade book publishing today can be automated with the help of generative AI. And, if this is true, then the trade book publishing industry as we know it will soon be obsolete. We will need to move on.

There are two quick provisos, however. The first is straightforward: this is not just about ChatGPT—or other GPTs (generative pretrained transformers) and LLMs (large language models). A range of associated technologies and processes can and will be brought into play that augment the functionality of generative AI. But generative AI is the key ingredient. Without it, what I’m describing is impossible.

The second proviso is of a different flavor. When you make absolutist claims about a technology, people will invariably try to defeat you with another absolute. If you claim that one day all cars will be self-driving, someone will point out that this won’t apply to Formula One race cars. Point taken.

The new approach, which takes cues from NASA’s methods for sending data through deep space, could revolutionize EV infrastructures by enabling electric vehicles and autonomous factory machines to charge while driving.

Stability AI became a $1 billion company with the help of a viral AI text-to-image generator and — per interviews with more than 30 people — some misleading claims from founder Emad Mostaque.

Emad Mostaque is the modern-day Renaissance man who kicked off the AI gold rush. The Oxford master’s degree holder is an award-winning hedge fund manager, a trusted confidant to the United Nations and the tech founder behind Stable Diffusion — the text-to-image generator that broke the internet last summer and, in his words, pressured OpenAI to launch ChatGPT, the bot that mainstreamed AI.

A raft of industry experts have given their views on the likely impact of artificial intelligence on humanity in the future. The responses are unsurprisingly mixed.

The Guardian has released an interesting article regarding the potential socioeconomic and political impact of the ever-increasing rollout of artificial intelligence (AI) on society. By asking various experts in the field on the subject, the responses were, not surprisingly, a mixed bag of doom, gloom, and hope.

Yucelyilmaz/iStock.

“I don’t think the worry is of AI turning evil or AI having some kind of malevolent desire,” Jessica Newman, director of University of California Berkeley’s Artificial Intelligence Security Initiative, told the Guardian. “The danger is from something much more simple, which is that people may program AI to do harmful things, or we end up causing harm by integrating inherently inaccurate AI systems into more and more domains of society,” she added.

Continued thoughts 💭 about humanity and our beloved AI

Maybe interesting.

173K likes, — Philosophy & Literature (@philosophyofexistence) on Instagram: ‘Aristotle’s Paradox of Time’

Researchers at Washington State University have been monitoring challenges honeybees face for nearly 20 years, and they said this year could be one of the worst ones for the important pollinators in decades.

However, they have also been working to create robot bees to help with pollination. KCBS Radio’s Holly Quan spoke with Ryan Bena, a PhD student at the University of Southern California and co-author of the study about the project.

“Essentially we built this this robot – it’s about 95 milligrams,” he explained. “So it’s roughly the size of… an actual insect bee. And we use flapping wings. So for flapping wings to fly and control the bee, you know, fly through the air… what’s unique and sort of interesting about our particular robot is that we finally developed a way to coordinate the flapping of these four wings so that we can control the bee in every direction.”

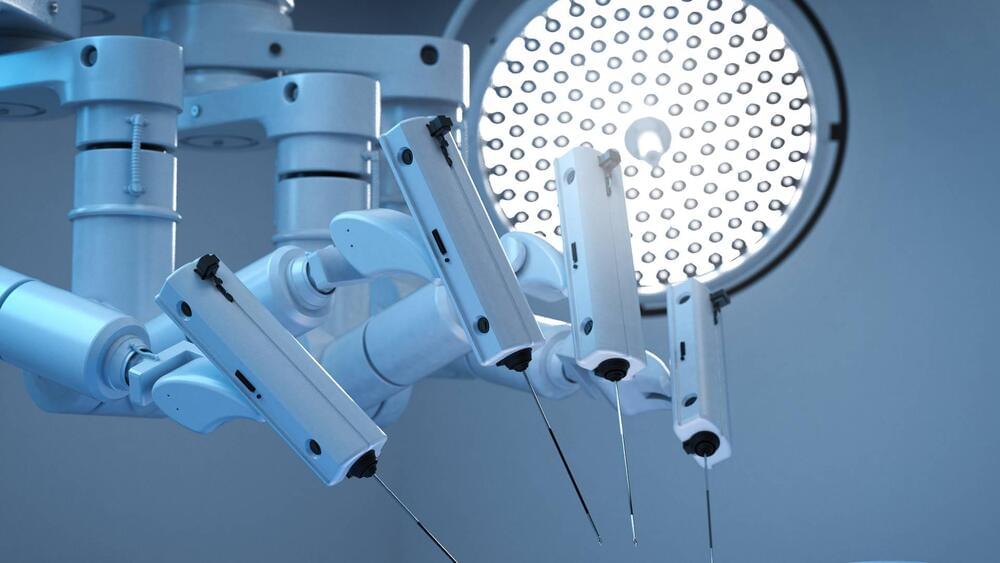

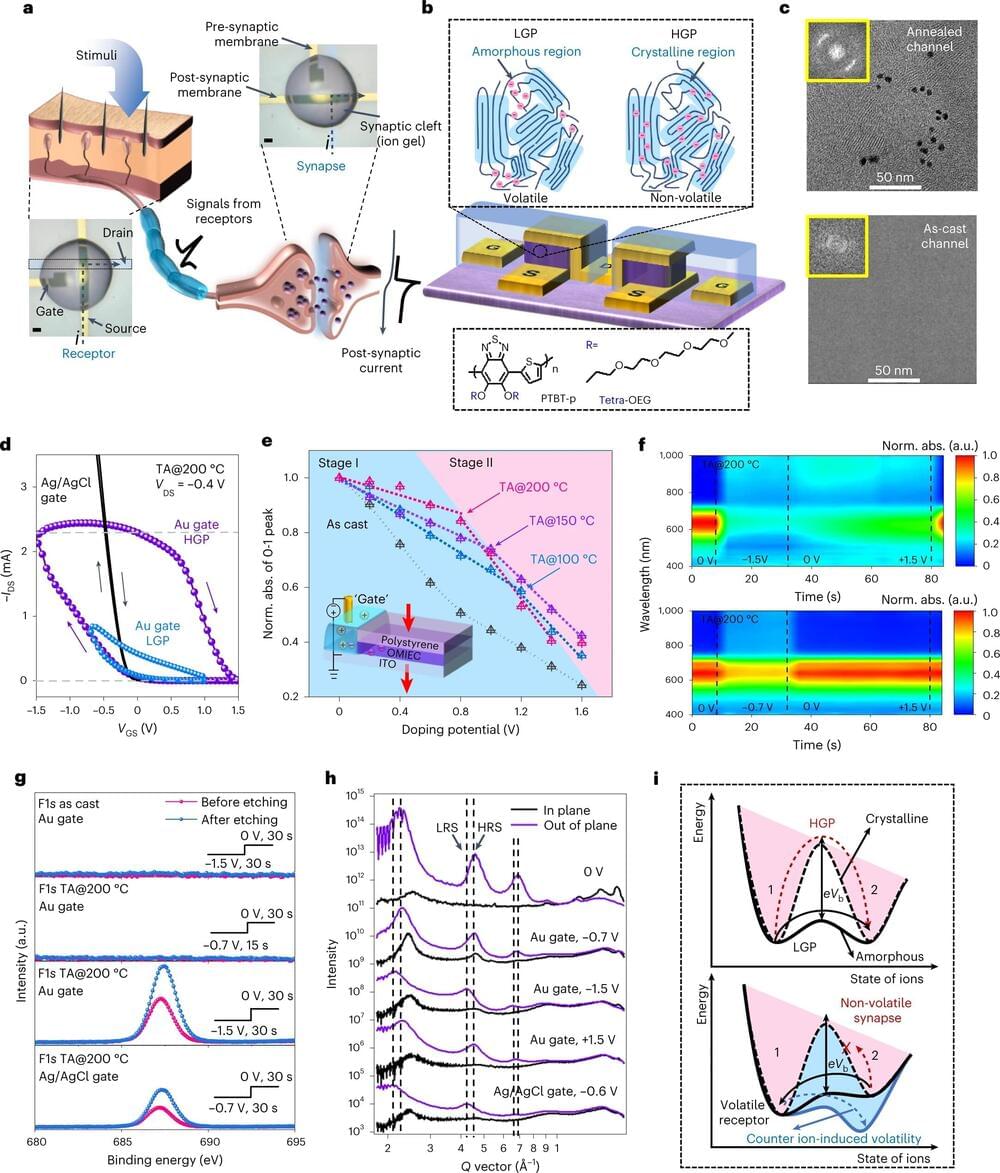

In recent years, electronics engineers have been trying to develop new brain-inspired hardware that can run artificial intelligence (AI) models more efficiently. While most existing hardware is specialized in either sensing, processing or storing data, some teams have been exploring the possibility of combining these three functionalities in a single device.

Researchers at Xi’an Jiaotong University, the University of Hong Kong and Xi’an University of Science and Technology introduced a new organic transistor that can act as a sensor and processor. This transistor, introduced in a paper published in Nature Electronics, is based on a vertical traverse architecture and a crystalline-amorphous channel that can be selectively doped by ions, allowing it to switch between two reconfigurable modes.

“Conventional artificial intelligence (AI) hardware uses separate systems for data sensing, processing, and memory storage,” Prof. Wei Ma and Prof. Zhongrui Wang, two of the researchers who carried out the study, told Tech Xplore.