The ERP literature has also reported findings on the P600 in the context of language processing. This ERP component is commonly observed in the 500–900 ms time window, with a parietal topography57. The P600 component was initially thought to reflect manipulation of syntactic information57, but has since been associated with conflict monitoring58,59,60. The P600 response can be found in a wide range of syntactic violations such as phrase structure violation61, semantic violations in extended discourse contexts62, subject-verb number agreement63, pronoun case47, verb inflection64 and subjacency65. The P600 response has been consistently associated with capturing differences between syntactically congruent relative to incongruent syntactic structures (e.g.,27,66,67. It has been debated, however, whether the P600 responses seen in these cases are specialized for syntax processing or instead linked to a more general domain process such as attention, context updating or learning27,47,68,69. Fitz and Chang 70 proposed a model presenting P600 as the prediction error at the sequencing layer of a neural network. Their studies have shown that the recorded ERP components could be the result of learning processes, that helps in the adaptation process to new inputs.

These studies have also suggested that P600 reflects an integration process in the comprehension of the visual world. Sitnikova et al.71 presented movie clips of real-world activities with two types of endings: congruent and incongruent with the context. Their results showed that the violations of the expected event elicited the P600 component, which led them to conclude that the comprehension of the visual real-world required the mediation of two mechanisms reflected by N400 and P600. Differences in language processing in ASD individuals are also reflected in the P600 amplitude and latency. When exploring linguistic violations, the group with ASD presented longer reaction times72 and broader distributed P600 effects73. P600 variations were associated with higher attentional cost and compensatory strategies. However, studies assigning the P600 response exclusively to incongruency in individuals with ASD remain scarce.

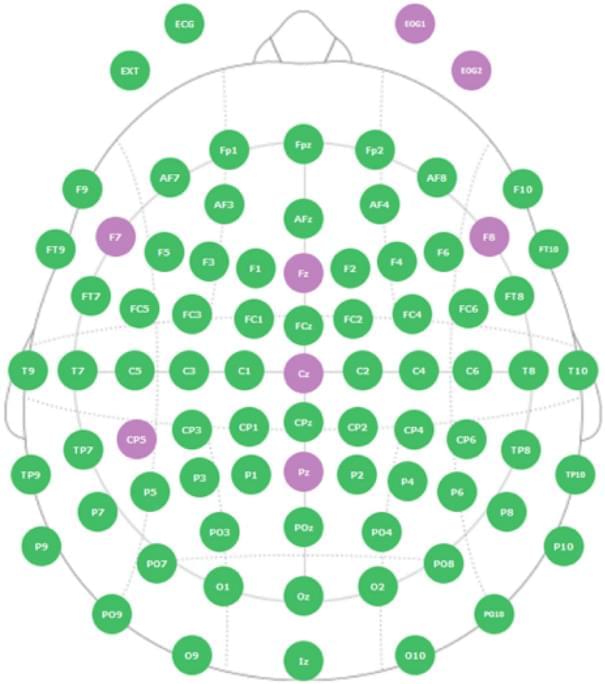

Due to the mixed results commonly found in studies of ASD, it is important to identify the paradigms capable of identifying differences in the neural responses to contextual language processing. In our study, we aim to investigate brain processing in children with ASD related to difficulties in the interpretation of language in context. To achieve this, we studied the detection of context incongruencies. We applied a task that demanded integrating visual and auditory information to assess whether a sentence contradicts the context (incongruent condition) or matches the context (congruent condition). The incongruent condition included two different categories: i) incongruent trials with sentences that are grammatically correct, and ii) incongruent trials with sentences that are grammatically incorrect presenting semantic mistakes. We used a 2 × 2 design with images (context) accompanied by an oral description (language) that could be either congruent or incongruent with the image. We examined the ERP waves amplitudes for N400 and P600 components and studied the differences across children with ASD and typically developing controls. We assessed group differences and differences between the two conditions within the groups. We hypothesized that individuals in the typically developing group would detect the incongruencies and, in response, present significantly higher N400 and P600 amplitudes on the incongruent conditions compared to the congruent conditions. We also expected the ASD group to have difficulties detecting the incongruencies between the context and the description. When investigating group differences, we expected to find significant differences in the amplitudes of the N400 and P600 ERPs on the incongruent conditions, with larger ERP amplitudes in the non-autistic group.