Google CEO Sundar Pichai told 60 Minutes the company’s founding motto includes more nuance, but still underpins its values.

Sam Harris is an American author, philosopher, neuroscientist, and podcast host.

His work touches on a wide range of topics, including rationality, religion, ethics, free will, neuroscience, meditation, philosophy of mind, politics, terrorism, and artificial intelligence.

His academic background is in philosophy and cognitive neuroscience.

Finally, stories born from paranoia teach you to see A.I. as the ultimate surveillance tool, watching your every eye moment and jiggle of your mouse. But what if it’s used instead to catch you doing things well, and to foster trust between managers and employees?

With the ability to compile reports of your accomplishments—or even assess their quality—A.I. can help managers better appreciate the output of their employees, rather than relying on quantified inputs, like time spent at your desk. It can watch out for deadlines and critical paths, automatically steering you toward the work that’s most urgent. And if you do fall behind on deadlines, A.I. can let your manager know: They don’t have to poke their nose in all the time just to catch the one time you fell behind. With A.I. helping everyone focus their attention to match intentions as they do their work, managers can instead spend their time investing in ways to support their team and grow individuals.

The way we work right now will soon look vestigial, a kind of social scaffolding in our journey to build something better. We know that A.I. will transform the future of work. Will the future edifices of our labor be austere, brutalist towers that callously process resources? Or will they be beautiful, intricate monuments to growth and thriving?

Missed the GamesBeat Summit excitement? Don’t worry! Tune in now to catch all of the live and virtual sessions here.

Hexagon, a Nordic company focused on digital reality, is collaborating with Nvidia to enable industrial digital twins to capture data in real time.

Digital twins are digital replicas of designs such as factories or buildings in the real. The idea of the collaboration is to unite reality capture, manufacturing twins, AI, simulation and visualization to deliver real-time comparisons to real-world models.

Connecting artificial intelligence systems to the real world through robots and designing them using principles from evolution is the most likely way AI will gain human-like cognition, according to research from the University of Sheffield.

In a paper published in Science Robotics, Professor Tony Prescott and Dr. Stuart Wilson from the University’s Department of Computer Science, say that AI systems are unlikely to resemble real brain processing no matter how large their neural networks or the datasets used to train them might become, if they remain disembodied.

Current AI systems, such as ChatGPT, use large neural networks to solve difficult problems, such as generating intelligible written text. These networks teach AI to process data in a way that is inspired by the human brain and also learn from their mistakes in order to improve and become more accurate.

In the film “Top Gun: Maverick,” Maverick, played by Tom Cruise, is charged with training young pilots to complete a seemingly impossible mission—to fly their jets deep into a rocky canyon, staying so low to the ground they cannot be detected by radar, then rapidly climb out of the canyon at an extreme angle, avoiding the rock walls. Spoiler alert: With Maverick’s help, these human pilots accomplish their mission.

A machine, on the other hand, would struggle to complete the same pulse-pounding task. To an autonomous aircraft, for instance, the most straightforward path toward the target is in conflict with what the machine needs to do to avoid colliding with the canyon walls or staying undetected. Many existing AI methods aren’t able to overcome this conflict, known as the stabilize-avoid problem, and would be unable to reach their goal safely.

MIT researchers have developed a new technique that can solve complex stabilize-avoid problems better than other methods. Their machine-learning approach matches or exceeds the safety of existing methods while providing a tenfold increase in stability, meaning the agent reaches and remains stable within its goal region.

The technology was recently demonstrated by the Australian Army, where soldiers operated a Ghost Robotics quadruped robot using the brain-machine interface. Photo supplied by Australian Army. #Graphene

New technology is making mind reading possible with positive implications for the fields of healthcare, aerospace and advanced manufacturing.

Researchers from the University of Technology Sydney (UTS) have developed biosensor technology that will allow you to operate devices, such as robots and machines, solely through thought-control.

The advanced brain-computer interface was developed by Distinguished Professor Chin-Teng Lin and Professor Francesca Iacopi, from the UTS Faculty of Engineering and IT, in collaboration with the Australian Army and Defence Innovation Hub.

Artificial Intelligence is breaking new barriers by the day. Hundreds of worshippers at St. Paul’s Church in Fürth, Bavaria, Germany recently attended a service, entirely conducted by AI with the sermon being presented by the AI chatbot ChatGPT.

An hour before the service even began, people formed a long queue outside the 19th-century, neo-Gothic building to witness what the AI could deliver.

The chatbot’s sermon majorly focused on themes surrounding leaving one’s past behind, living in the present, not being afraid of death and maintaining faith in Jesus Christ. Notably, four different AI avatars took turns to impart the sermons and lead the service.

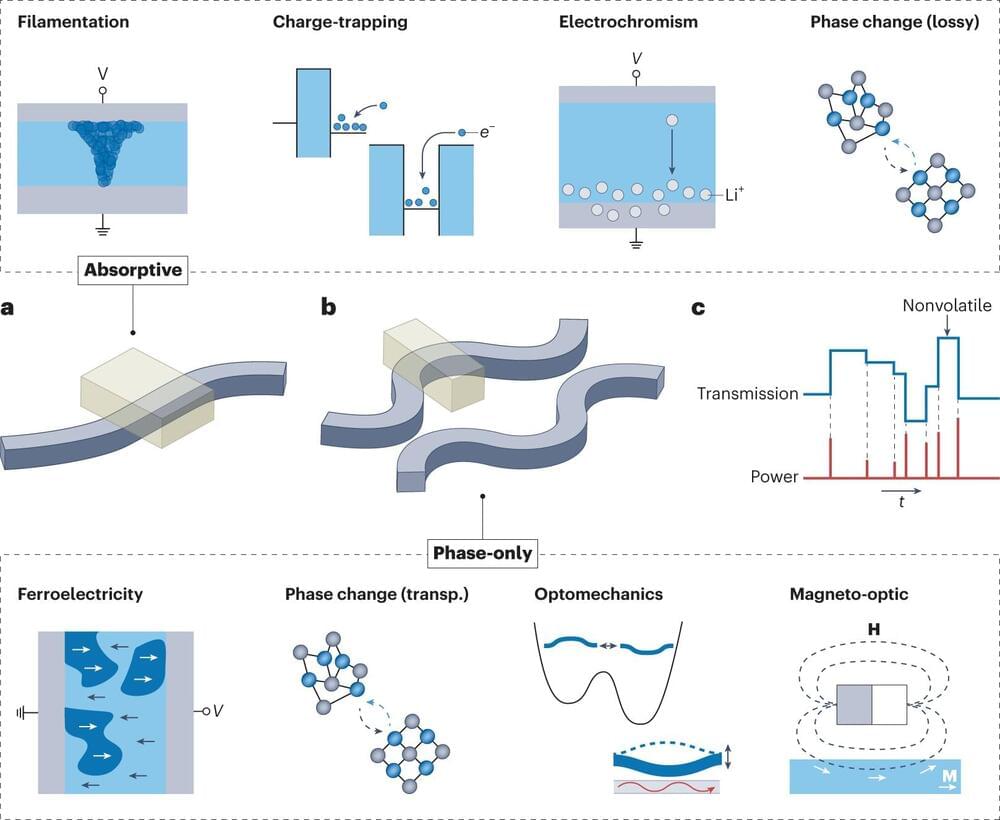

AI, machine learning, and ChatGPT may be relatively new buzzwords in the public domain, but developing a computer that functions like the human brain and nervous system—both hardware and software combined—has been a decades-long challenge. Engineers at the University of Pittsburgh are today exploring how optical “memristors” may be a key to developing neuromorphic computing.

Resistors with memory, or memristors, have already demonstrated their versatility in electronics, with applications as computational circuit elements in neuromorphic computing and compact memory elements in high-density data storage. Their unique design has paved the way for in-memory computing and captured significant interest from scientists and engineers alike.

A new review article published in Nature Photonics, titled “Integrated Optical Memristors,” sheds light on the evolution of this technology—and the work that still needs to be done for it to reach its full potential.