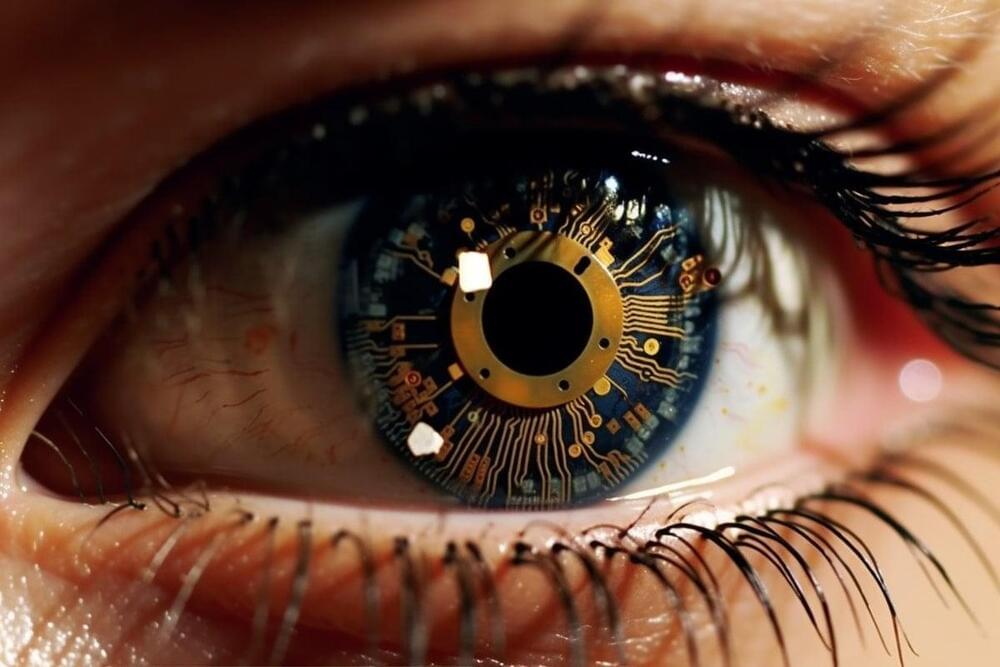

The team’s research demonstrates a working device that captures, processes and stores visual information. With precise engineering of the doped indium oxide, the device mimics a human eye’s ability to capture light, pre-packages and transmits information like an optical nerve, and stores and classifies it in a memory system like the way our brains can.

Summary: Researchers developed a single-chip device that mimics the human eye’s capacity to capture, process, and store visual data.

This groundbreaking innovation, fueled by a thin layer of doped indium oxide, could be a significant leap towards applications like self-driving cars that require quick, complex decision-making abilities. Unlike traditional systems that need external, energy-intensive computation, this device encapsulates sensing, information processing, and memory retention in one compact unit.

As a result, it enables real-time decision-making without being hampered by processing extraneous data or being delayed by transferring information to separate processors.

Generative artificial intelligence (AI) has put AI in the hands of people, and those who don’t use it could struggle to keep their jobs in future, Jaspreet Bindra, Founder and MD, Tech Whisperer Lt. UK, surmised at the Mint Digital Innovation Summit on June 9.

Generative artificial intelligence (AI) has put AI in the hands of people, and those who don’t use it could struggle to keep their jobs in future, Jaspreet Bindra, Founder and MD, Tech Whisperer Lt. UK, surmised at the Mint Digital Innovation Summit on June 9.