Gen Zers have long careers ahead and might face more uncertainty about their professional futures than older workers.

Tesla says its long-awaited Dojo supercomputer, which is supposed to bring its self-driving effort to a new level, is finally going into production next month.

Dojo is Tesla’s own custom supercomputer platform built from the ground up for AI machine learning and, more specifically, for video training using the video data coming from its fleet of vehicles.

The automaker already has a large NVIDIA GPU-based supercomputer that is one of the most powerful in the world, but the new Dojo custom-built computer uses chips and an entire infrastructure designed by Tesla.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

For Star Trek fans and tech nerds, the holodeck concept is a form of geek grail. The idea is an entirely realistic simulated environment where just speaking the request (prompt) seemingly brings to life an immersive environment populated by role-playing AI-powered digital humans. As several Star Trek series envisioned, a multitude of scenes and narratives could be created, from New Orleans jazz clubs to private eye capers. Not only did this imagine an exciting future for technology, but it also delved into philosophical questions such as the humanity of digital beings.

Since it first emerged on screen in 1988, the holodeck has been a mainstay quest of the Digerati. Over the years, several companies, including Microsoft and IBM, have created labs in pursuit of building the underlying technologies. Yet, the technical challenges have been daunting for both software and hardware. Perhaps AI, and in particular generative AI, can advance these efforts. That is just one vision for how generative AI might contribute to the next generation of technology.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

Modern IT networks are complex combinations of firewalls, routers, switches, servers, workstations and other devices. What’s more, nearly all environments are now on-premise/cloud hybrids and are constantly under attack by threat actors. The intrepid souls that design, implement and manage these technical monstrosities are called network engineers, and I am one.

Although other passions have taken me from that world into another as a start-up founder, a constant stream of breathless predictions of a world without the need for humans in the age of AI prompted me to investigate, at least cursorily, whether ChatGPT could be used an effective tool to either assist or eventually replace those like me.

The robots will be self-driving.

Estonian ride-hailing firm Bolt has revealed it will soon begin delivering food to people’s doors using a fleet of autonomous robots. The move hails from a partnership with robotics firm Starship Technologies.

The company will first trial its online food deliveries in its home city of Tallinn, Estonia later this year.

Bolt.

This is according to a press release by the firms published on Wednesday.

EPFL

Engineers at EPFL’s Computational Robot Design & Fabrication (CREATE) lab are training robots to pick the famous fruit on a silicone version that mimics the real thing.

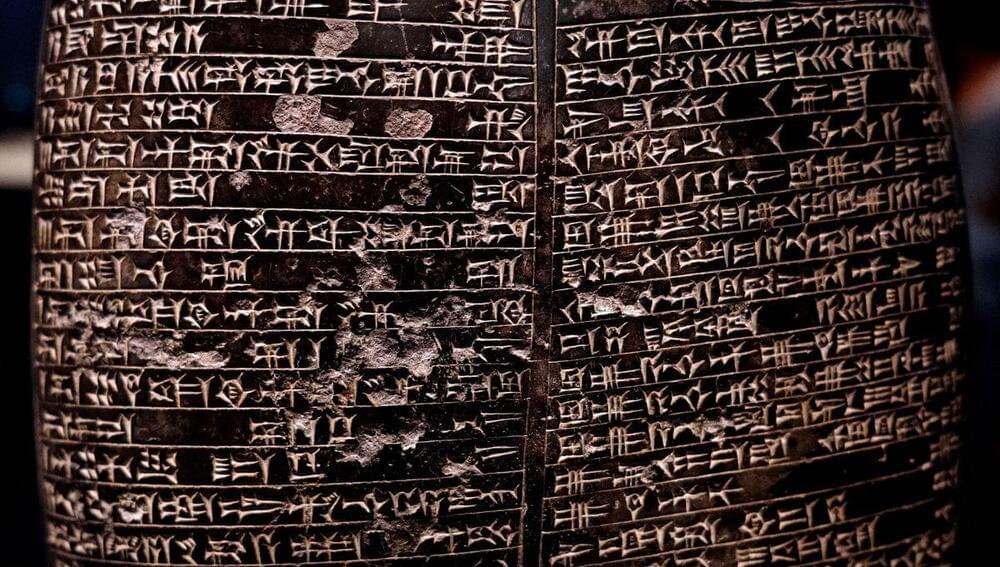

Archaeologists and computer scientists have worked together to create an artificial intelligence (AI) program capable of translating ancient cuneiform texts. The researchers say their goal is for the program to form part of a “human-machine collaboration”, which will assist future scholars in their study of archaic languages.

Cuneiform is thought to be the oldest writing system in the world. Recorded by gouging symbols into clay tablets, it was originally developed by the Mesopotamians in what is now Iraq, where it started out as a way of keeping track of bread and beer rations. The system quickly spread throughout the ancient Middle East, where it remained in use continuously for over 3,000 years.

Thousands of documents, most written in either the Sumerian or Akkadian languages using the cuneiform script, survive to this day; but translating them can be a major headache. For one thing, there simply aren’t that many people with the necessary expertise. For another, the texts are often broken up into fragments.

When I’m asked to check a box to confirm I’m not a robot, I don’t give it a second thought—of course I’m not a robot. On the other hand, when my email client suggests a word or phrase to complete my sentence, or when my phone guesses the next word I’m about to text, I start to doubt myself. Is that what I meant to say? Would it have occurred to me if the application hadn’t suggested it? Am I part robot? These large language models have been trained on massive amounts of “natural” human language. Does this make the robots part human?

AI chatbots are new, but public debates over language change are not. As a linguistic anthropologist, I find human reactions to ChatGPT the most interesting thing about it. Looking carefully at such reactions reveals the beliefs about language underlying people’s ambivalent, uneasy, still-evolving relationship with AI interlocutors.

ChatGPT and the like hold up a mirror to human language. Humans are both highly original and unoriginal when it comes to language. Chatbots reflect this, revealing tendencies and patterns that are already present in interactions with other humans.