The technological research firm’s recent survey that found corporate strategists view AI and analytics as paramount to future business success.

Titles like AI Dungeon are already using generative AI to generate in-game content. Nobody knows who owns it.

OpenAI today announced the general availability of GPT-4, its latest text-generating model, through its API.

Starting this afternoon, all existing OpenAI API developers “with a history of successful payments” can access GPT-4. The company plans to open up access to new developers by the end of this month, and then start raising availability limits after that “depending on compute availability.”

“Millions of developers have requested access to the GPT-4 API since March, and the range of innovative products leveraging GPT-4 is growing every day,” OpenAI wrote in a blog post. “We envision a future where chat-based models can support any use case.”

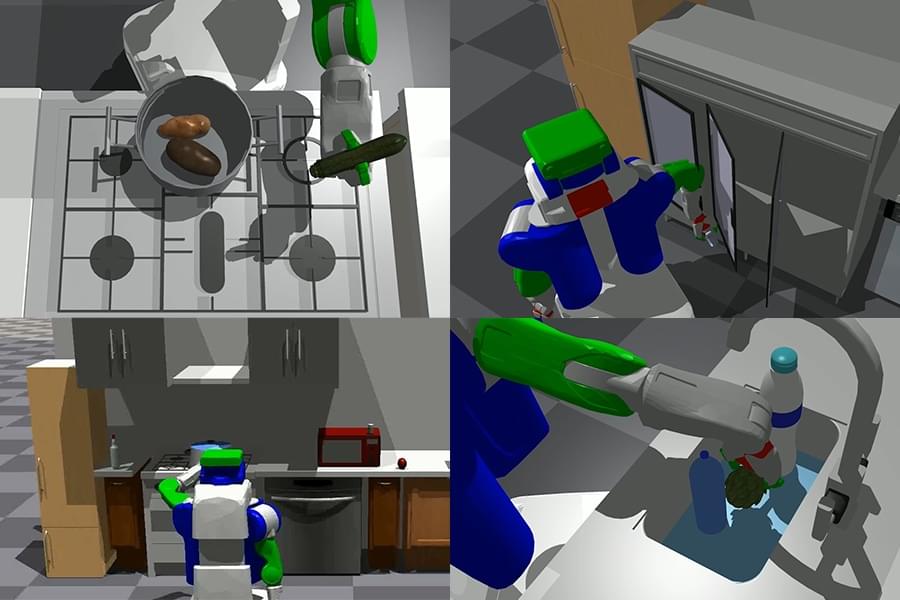

Why aren’t there more robots in homes? This a surprising complex question — and our homes are surprisingly complex places. A big part of the reason autonomous systems are thriving on warehouse and factory floors first is the relative ease of navigating a structured environment. Sure, most systems still require a space be mapped prior to getting to work, but once that’s in place there tends to be little in the way of variation.

Homes, on the other hand, are kind of a nightmare. Not only do they vary dramatically from unit to unit, they’re full of unfriendly obstacles and tend to be fairly dynamic, as furniture is moved around or things are left on the floor. Vacuums are the most prevalent robots in the home, and they’re still being refined after decades on the market.

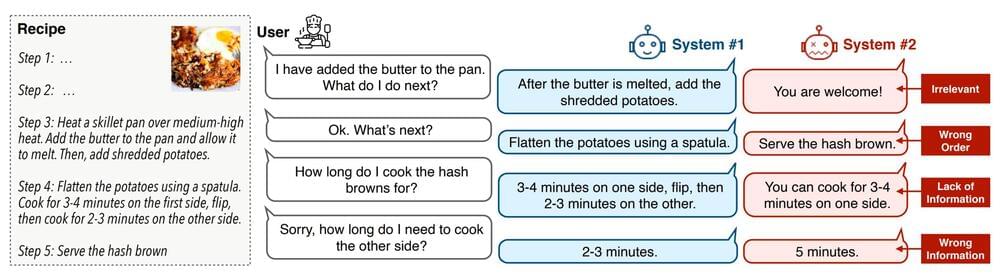

This week, researchers at MIT CSAIL are showcasing PIGINet (Plans, Images, Goal, and Initial facts), which is designed to bring task and motion planning to home robotic systems. The neural network is designed to help streamline their ability to create plans of action in different environments.

YouTube is experimenting with AI-generated quizzes on its mobile app for iOS and Android devices, which are designed to help viewers learn more about a subject featured in an educational video. The feature will also help the video-sharing platform get a better understanding of how well each video covers a certain topic.

The AI-generated quizzes, which YouTube noted on its experiments page yesterday, are rolling out globally to a small percentage of users that watch “a few” educational videos, the company wrote. The quiz feature is only available for a select portion of English-language content, which will appear on the home feed as links under recently watched videos.

Not all of YouTube’s experiments make it to the platform, so it will be interesting to see if this one sticks around. We’re not sure how many people — especially if they’re no longer in school — want to take a quiz while they scroll through videos.

The Mayo Clinic has reportedly been testing the system since April.

Google’s Med-PaLM 2, an AI tool designed to answer questions about medical information, has been in testing at the Mayo Clinic research hospital, among others, since April, The Wall Street Journal.

WSJ reports that an internal email it saw said Google believes its updated model can be particularly helpful in countries with “more limited access to doctors.” Med-PaLM 2 was trained on a curated set of medical expert demonstrations, which Google believes will make it… More.

Google says doctors prefer its answers, even if they’re less accurate.

Artificial intelligence algorithms, such as the sophisticated natural language processor ChatGPT, are raising hopes, eyebrows and alarm bells in multiple industries. A deluge of news articles and opinion pieces, reflecting both concerns about and promises of the rapidly advancing field, often note AI’s potential to spread misinformation and replace human workers on a massive scale. According to Jonathan Chen, MD, PhD, assistant professor of medicine, the speculation about large-scale disruptions has a kernel of truth to it, but it misses another element when it comes to health care: AI will bring benefits to both patients and providers.

Chen discussed the challenges with and potential for AI in health care in a commentary published in JAMA on April 28. In this Q&A, he expands on how he sees AI integrating into health care.

The algorithms we’re seeing emerge have really popped open Pandora’s box and, ready or not, AI will substantially change the way physicians work and the way patients interact with clinical medicine. For example, we can tell our patients that they should not be using these tools for medical advice or self-diagnosis, but we know that thousands, if not millions, of people are already doing it — typing in symptoms and asking the models what might be ailing them.

The arrangement of electrons in matter, known as the electronic structure, plays a crucial role in fundamental but also applied research, such as drug design and energy storage. However, the lack of a simulation technique that offers both high fidelity and scalability across different time and length scales has long been a roadblock for the progress of these technologies.

Researchers from the Center for Advanced Systems Understanding (CASUS) at the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) in Görlitz, Germany, and Sandia National Laboratories in Albuquerque, New Mexico, U.S., have now pioneered a machine learning–based simulation method that supersedes traditional electronic structure simulation techniques.

Their Materials Learning Algorithms (MALA) software stack enables access to previously unattainable length scales. The work is published in the journal npj Computational Materials.